Last week the Stanford Institute for Human-Centered Artificial Intelligence (HAI) hosted its 2022 Spring Conference. The event was co-hosted by Fei-Fei Li, AI pioneer and Professor in Stanford’s computer science department. Fei-Fei is also co-director of HAI. The conference focused on three key advances in artificial intelligence – accountable AI, foundation models, and embodied AI in the virtual and real world. I attended the conference along with Radical Ventures Partner Rob Toews thanks to Fei-Fei’s invitation.

Below I am sharing a short overview of each of the main topics explored during the conference and selected salient quotes. Please note that the quotes may have been edited for clarity and length.

The first session examined Accountable AI – the ethical, legal, safety and privacy issues related to AI systems. There is growing interest in better understanding AI decisions, maintaining data privacy, and instilling fairness in AI models within the AI community. While some of these issues may require societal and regulatory solutions, advances in AI research point to possible technological solutions for producing interpretable AI systems and systems that work effectively with data that has been obscured to protect people’s privacy.

“A misconception is that regulation is the best solution to algorithmic discrimination and all we need for safe, accountable AI. We saw [The United States’] first AI-specific regulation in New York City earlier this year. The law calls out three categories that people have a right to not to be discriminated against based on their age, race, or gender. And as we all know, there are many other minority categories that would otherwise be implied in the existing laws. In some ways, by calling out these three protected categories exclusively, with regard to AI, the lawmakers in NYC… actually made a huge oversight in omitting for instance those who have disabilities.” – Liz O’Sullivan, CEO, Parity

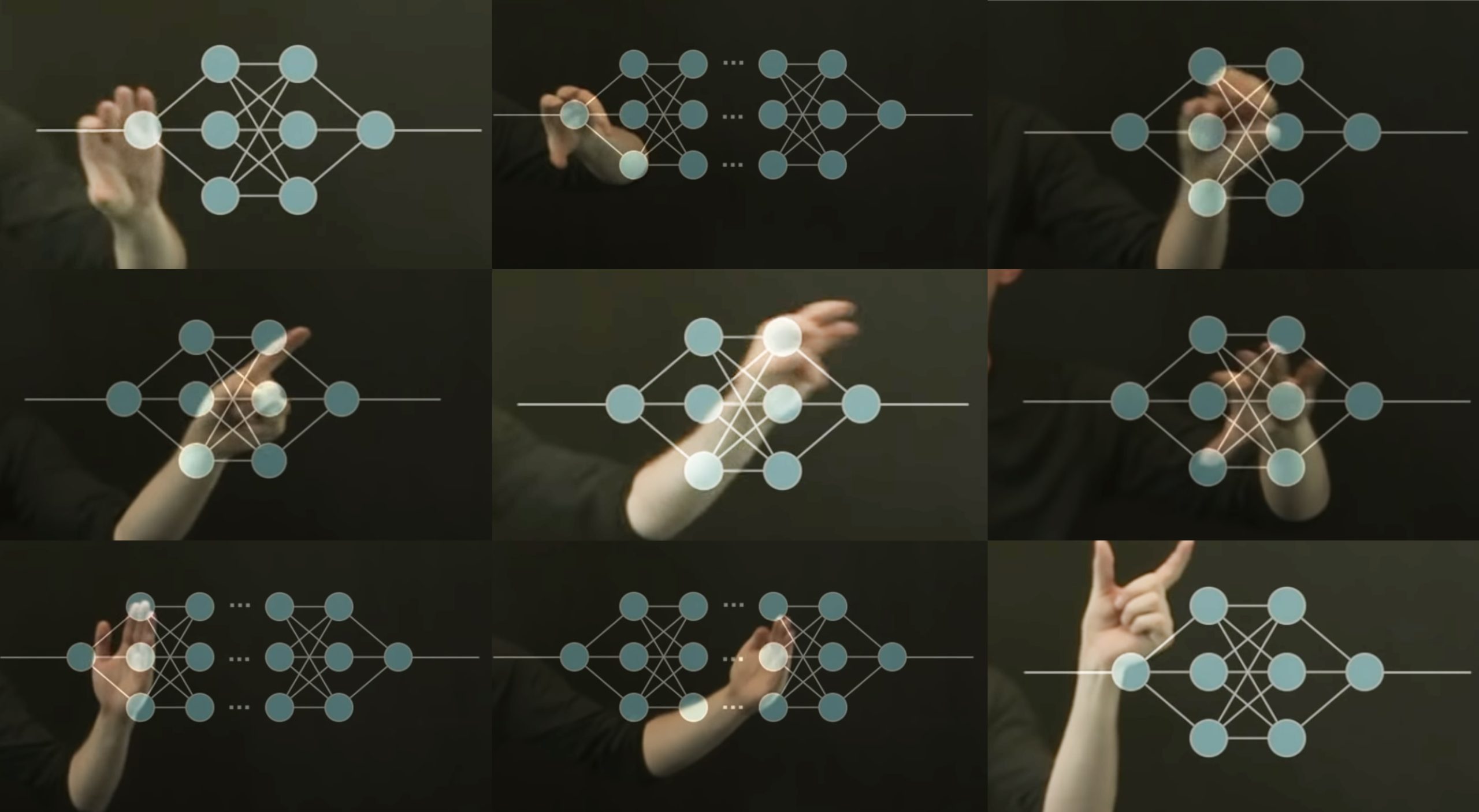

The second session examined Foundation Models. Many AI systems are built in the same way: train one versatile model on a huge amount of data and adapt it to many applications. These models are called foundation models. Technologists on the panel discussed the various applications of foundation models from social sciences research to multi-modal learning and large language models. Professor Rob Reich acknowledged the massive progress in the field. He also encouraged the technologists to rapidly accelerate the development and organization of professional ethics, pointing to the biomedical industry as an example.

“We live in an age of AI where AI is transforming every aspect of life, personal life, professional life, political life and these enormous foundation models are the latest and potentially most powerful of the transformations that AI will bring about. In this race between disruption and democracy, the technologists and the scientists are always pushing ahead more quickly than how regulators can knowledgeably act. We need to think about professional ethics and norms that organize the work that we do as AI scientists because we shouldn’t count on the ability of regulators to keep up with the frontier.

I offer up an invitation to you to imagine a comparison of the professional norms that guide the field of biomedical research as compared to AI. In biomedical research we have things as old as the Hippocratic Oath, we have professional licensure requirements, we have the Institutional Review Board that guides any type of work with human subjects that happens in universities and pharmaceutical companies. AI is by contrast a developmentally immature domain of scientific inquiry. It has only been around, computer science, as a field, formally speaking, since the 1950s and ’60s. And given recent developments in AI, this neural net age, it’s much younger still. To put it in a provocative way, what I invite you to think about is the idea that AI scientists lack a dense institutional footprint of professional norms and ethics… we need a rapid acceleration of professional norms and ethics to steward our collective work as AI scientists.” – Rob Reich, Professor of Political Science, Stanford University

The final session examined AI’s applications in Physical/Simulated Worlds. In recent years, AI has progressed in 3D computer vision, computer graphics, and computational optics to model the physical world. Advances in these areas have resulted in progress in AR/VR technologies, a resurgence of work linking AI and robotics, and new frontiers of embodied AI. The conference focused on some of these advances and their social implications.

“We live in a world that is 3D and dynamic. It has a lot of people that are interacting with each other and the environment… Thinking about capturing this reality, we can look at the earliest painting created 45,000 years ago. Over these 45,000 years, we got much better at painting and figured out how to do perspective effects and even model the lighting effects to capture the reality of that day. Of course, this led to the photograph and then to the development of film. These are the mediums of reality capture that are in our phones and every day. But these devices, mediums, drop a significant aspect of the reality that it is 3D and dynamic. So what kind of 3D capture devices exist? Computer vision people have been working on structure for motion for a very long time, which you can find in your Google Maps. But it’s not really that accessible… So what is the next direction? We’re thinking about photorealistic memory – to be able to capture motion in 3D, in a casual way.” – Angjoo Kanazawa, Assistant Professor, Department of Electrical Engineering and Computer Sciences, University of California, Berkeley

Anna Lembke, Professor and Medical Director of Addiction Medicine, Stanford University School of Medicine also challenged the panel to ensure we take care of our vulnerable minorities that could become addicted to physical/simulated worlds technology, better understand potential derealization in individuals, and the negative impacts of constant social comparisons through social media. “We cannot look at this technology without asking the deeper existential question of why do we want this technology and what are we going to do with it?”

The conference can be watched in full on the Stanford HAI website.

AI News This Week

-

A deep-learning algorithm could detect earthquakes by filtering out city noise (MIT Technology Review)

Amongst the rumbling of traffic, trains, and construction, it’s difficult to discern an approaching earthquake in bustling cities. Researchers from Stanford have found a way to get a clearer signal. They have created an algorithm called UrbanDenoiser, described in a paper in Science Advances, that promises to improve the detection capacity of earthquake monitoring networks in cities and other built-up areas. UrbanDenoiser demonstrated it can effectively suppress the high noise levels with data from California, although false positives and false negatives in denoised data should still be expected to occur and need to be assessed. Deep learning could be an excellent tool for seismologists to make their work quicker and more accurate by helping to cut through large volumes of data.

-

Pathways Language Model (Google)

Google released a language model – PaLM – trained on 540 billion parameters, demonstrating applications in language generation, reasoning and code tasks. PaLM demonstrates an ability to create explicit explanations for scenarios that require a complex combination of multi-step logical inference, world knowledge, and deep language understanding. For example, it can provide high-quality explanations for novel jokes not found on the web.

-

The 11 commandments of hugging robots (IEEE Spectrum)

Researchers are teaching robots to give better hugs. Why would the simple act of hugging demand so much research effort? As the article states, “Hugs are interactive, emotional, and complex, and giving a good hug (especially to someone you do not know well or have never hugged before) is challenging. It takes a lot of social experience and intuition, which is another way of saying that it is a hard robotics problem because social experience and intuition are things that robots tend not to be great at.” The goal is not to have robotic embraces supplant human hugs but to supplement physical human comfort when difficult or impossible.

-

Learning equations for extrapolation and control (arXiv)

Breakthroughs in determining mathematical expressions from a sequence of numbers reflect important advances in developing AI systems capable of abstract reasoning. Converting a sequence of numbers into a mathematical expression is analogous to translating one natural language into another. Neural networks have proven adept at discovering the mathematical expressions that generate particular number patterns. The latest step in this research uses transformer models to extend that success to a further class of expressions, demonstrating again that transformers excel at learning underlying patterns in natural language.

-

AI around the world: Announcing our first five published datasets (Lacuna Fund)

Machine learning has shown great potential to revolutionize everything from how farmers increase their crop yields, to how governments communicate with their citizens during natural disasters, to how healthcare providers respond to global pandemics. But in global low and middle-income contexts, a lack of labeled and unbiased data puts the benefits of machine learning out of reach. In many cases, the data required to build AI applications for real-world problems doesn’t exist. Funded in part by Canada’s IDRC, the foundation released its first datasets with work such as a Nigerian Twitter sentiment corpus for multilingual sentiment analysis and a dataset for crop phenology monitoring of smallholder farmer’s fields. These datasets add to other efforts, like the pan-African Masakhane group, that improve African language representation in our natural language data.

Radical Reads is edited by Leah Morris (Senior Director, Velocity Program, Radical Ventures).