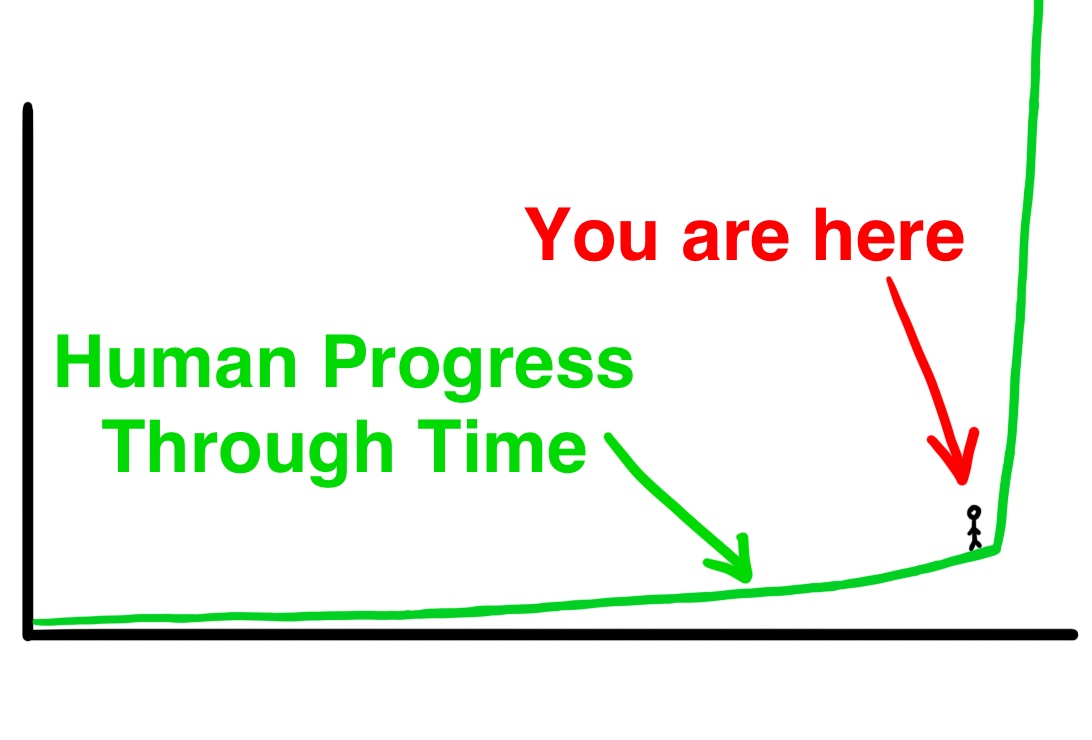

As we have entered the era of foundation models and generative AI in recent years, the number of data points on which AI models are trained has risen from millions to billions to trillions. With so much focus on data quantity, the importance of data quality remains remarkably underappreciated.

AI models are a reflection of the data on which they are trained – models are what they eat. The specifics of the data on which a model is trained generally has a far greater impact on that model’s performance than the specifics of the model’s architecture. Training models on the right data can drive dramatic improvements in model performance and – just as importantly – in model efficiency.

Data curation for self-supervised learning remains a challenging, cutting-edge research problem, one that only the world’s top AI research labs are able to do effectively. Until now.

Radical’s new portfolio company, Datology, was founded to democratize this critical part of the AI infrastructure stack, making model training more accessible to all through better data.

Led by CEO Ari Morcos, a former senior research scientist at Meta and DeepMind, Datology’s founding team includes many of the world’s foremost experts in the field of data research for AI models.

Radical is thrilled to invest in Datology’s seed round alongside our friends at Amplify Partners and a long list of AI legends including Geoff Hinton, Yann LeCun, Jeff Dean, Adam D’Angelo, Cohere co-founders Aidan Gomez and Ivan Zhang, Naveen Rao, Douwe Kiela and Jonathan Frankle.

The company is actively hiring and onboarding early customers. If you are excited about the importance of data to AI, please see here for more details.

AI News This Week

-

How a shifting AI chip market will shape Nvidia’s future (Wall Street Journal)

The AI chip market, long dominated by Nvidia, is undergoing a shift from focusing primarily on training AI models to deploying them, a transition that promises a vast and more competitive landscape. Nvidia, which has built its fortune on the back of supplying high-end GPUs for AI training, is now seeing a significant portion of its business, over 40%, coming from the deployment of AI systems, indicating a shift towards inference chips. These inference chips are essential for running AI models post-training, generating texts and images for an expanding user base. The focus on inference reflects a broader industry realization that the cost and efficiency of deploying AI are becoming as crucial as the training phase, with big tech firms and startups alike, such as Radical Ventures portfolio company Untether AI, exploring more cost-effective, specialized solutions for inference tasks.

-

Artificial Intelligence & Machine Learning Report – Q4 2023 (Pitchbook)

In the latest Emerging Tech Research report by PitchBook, AI and machine learning startups concluded the fourth quarter with $22.3 billion in funding over 1,665 transactions. Powering these startups are AI-specific data centres, whose specialized infrastructure and operations are designed to meet the rigors of AI workloads. A few upstarts in the AI data centre space are emerging, looking to challenge the hyperscalers. The Q4 2023 report also highlights ambitious startups in generative AI including Radical Ventures portfolio companies Cohere and Orbital Materials.

-

OpenAI rival Cohere says some AI startups build Bugatti sports cars, ‘We make F-150s’ (CNBC)

This week Radical Ventures portfolio company Cohere opened its New York City office as a critical talent market and to connect the company directly with its customers and partners in the city. Cohere is betting on generative AI for enterprise use, rather than on consumer chatbots. Cohere President Martin Kon says a lot of the hot AI startups on the market today are building the equivalent of fancy sports cars. Cohere’s product, he says, is more like a heavy-duty truck. “If you’re looking for vehicles for your field technical service department, and I take you for a test drive in a Bugatti, you’re going to be impressed by how fast and how well it performs,” Kon told CNBC in an interview. However, he said, the price coupled and certain lack of certain attributes make them inappropriate for an enterprise.

-

The AI project pushing local languages to replace French in Mali’s schools (Rest of World)

RobotsMali, supported by the Malian government, leverages AI tools such as ChatGPT, Google Translate, and Playground to develop educational content in Mali’s national languages, a move sparked by the 2023 shift from French to local languages in education. Spearheaded by volunteers, the initiative has produced over 100 books in Bambara, reaching 300+ children and showcasing AI’s role in cultural preservation and education accessibility. Despite concerns over limiting student’s global opportunities, RobotsMali’s efforts are praised for enhancing local culture understanding and addressing educational challenges in Mali.

-

esearch: The boundary of neural network trainability is fractal (Sohl-Dickstein)

Some patterns, like those seen in the Mandelbrot or Julia set, are made by numerous repetitions of a math formula to see which patterns spread out infinitely and which do not. Similarly, training neural networks involves a process of repeating steps and adjusting settings to see if the program can learn without going off track. These settings are very sensitive, and small changes can make a big difference in whether the program learns successfully or fails. Inspired by creating fractal patterns, Sohl-Dickstein decided to explore how changing these settings in neural networks affects their ability to learn. The experiments showed that the line between settings that work and those that do not is incredibly complex and detailed, much like a fractal, across a wide range of situations.

Radical Reads is edited by Ebin Tomy.