Over the course of a seven-decade career in which he received two Nobel Prizes, the founding father of molecular biology, Linus Pauling, often returned to a favourite saying: “The best way to have a good idea is to have lots of ideas.”

An idea, of course, is the domain of the human mind. Breakthrough advances in generative AI technology, however, are changing the notion of what it means to substantiate an idea, offering new mechanisms for creation and iteration that were – until just recently – entirely bound by our biological capacity.

The technology commentator Ben Thompson explored this extraordinary moment in an article last week, offering a short history of the “idea propagation value chain.” For millennia, Thompson argues, the distribution of human ideas were constrained by our proximity to the source – literally, the need to be physically present when an idea was passed down through the spoken word. While reading and writing somewhat relieved this bottleneck, it wasn’t until the advent of the printing press that communicating ideas could be economically distributed. Four hundred years later, the Internet would scale humanity’s access to ideas. While the Web solved for distribution, one final barrier remained. As Thompson puts it, “I have plenty of ideas, and thanks to the Internet, the ability to distribute them around the globe; however, I still need to write them down, just as an artist needs to create an image, or a musician needs to write a song.”

Enter generative AI which stands to unclog the idea substantiation bottleneck. Generative AI presents ethical issues and risks. But, it is also changing the relationship between an idea and its realization.

In his most recent Forbes column, Radical Partner Rob Toews offers four predictions on text-to-image AI, perhaps the most attention-grabbing and fast-moving subcategory of generative AI today. We share an excerpt from the article below, which you can read in full at Forbes.

The biggest business opportunities and best business models for this technology have yet to be discovered.

The primary use case that has driven adoption of text-to-image AI to date has been sheer novelty and curiosity on the part of individual users. And no wonder—as anyone who has played around with one of these models can attest, it is an exhilarating and engaging experience, especially at first.

But over the longer term, casual use by individual hobbyists is not by itself likely to sustain massive new businesses.

What use cases will unleash vast enterprise value creation and present the most compelling business opportunities for this technology? Put simply, what are the “killer apps” for text-to-image AI?

One application that immediately comes to mind is advertising. Advertising is visual in nature, making it a natural fit for these generative AI models. And after all, advertising powers the business models of technology giants like Alphabet and Facebook, among the most successful businesses in history.

Some brands, for instance Kraft Heinz, have already begun experimenting with AI models like DALL-E 2 to produce new advertising content. No doubt we will see a lot more of this. But—to be frank—let us all hope that we find more meaningful use cases for this incredible new technology than simply more advertising.

Taking a step back, consider that these AI models make it possible to generate and iterate upon any visual content quickly, affordably, and imaginatively, without the need for any special expertise or training. When we frame the scope of the technology this broadly, it becomes more evident that all sorts of transformative, disruptive business opportunities should emerge.

Perhaps the most intuitive use case for this technology is to create art. The global market size for fine art is $65 billion. Even setting aside this high end of the market, there are numerous more quotidian uses for art to which text-to-image AI could be profitably applied: book covers, magazine covers, postcards, posters, music album designs, wallpaper, digital media, and so on.

Take stock images as an example. Stock imagery may seem like a relatively niche market, but by itself it represents a multi-billion-dollar opportunity, with publicly traded competitors including Getty Images and Shutterstock. These businesses face existential disruption from generative AI.

Longer term, the design (and thus production) of any physical product—cars, furniture, clothes—could be transformed as generative AI models are used to dream up novel features and designs that captive consumers.

Relatedly, text-to-image AI may influence architecture and building design by “proposing” unique, unexpected new structures and layouts that in turn inspire human architects. Initial work along these lines is already being pursued today.

Alongside the question of killer applications is the related but distinct topic of how the competitive landscape in this category will evolve, and in turn which product and go-to-market strategies will prove most effective.

Early movers like OpenAI and Midjourney have positioned themselves as horizontal, sector-agnostic providers of the core AI technology. They have built general-purpose text-to-image models, made them available to customers via API (with pricing on a pay-per-use basis), and left it to users to discover their own use cases.

Will one or more horizontal players achieve massive scale by offering a foundational text-to-image platform on top of which an entire ecosystem of diverse applications is built? If so, will it be winner-take-all? As the technology eventually becomes commoditized, what would the long-term moats for such a business be?

Or as the sector matures and different use cases come into focus, will there be more value in building purpose-built, specialized solutions for particular applications?

One could imagine, say, a text-to-image solution built specifically for the auto industry for the design of new vehicle models. In addition to the AI model itself being fine-tuned on training data for this particular use case, such a solution might include a full SaaS product suite and a well-developed user interface built to integrate seamlessly into car designers’ overall workflows.

Another key strategic issue concerns the core AI models themselves. Can these models serve as a sustainable source of defensibility for companies, or will they quickly become commoditized? Recall that Stable Diffusion, one of today’s leading text-to-image models, has already been fully open-sourced, with all of its weights freely available online. How often and under what conditions will it make sense for a new startup to train its own proprietary text-to-image models internally, as opposed to leveraging what has already been built from the open-source community or from another company?

We cannot yet know the answer to any of these questions with certainty. The only thing we can be sure of is that this field will develop in surprising, unexpected ways in the months and years ahead. Part of the magic of new technology is that it unlocks previously unimaginable possibilities. When dial-up internet first became available, who predicted YouTube? When the first smartphones debuted, who saw Uber coming?

It is entrepreneurs who will ultimately answer these questions by envisioning and building the future themselves.

— Rob writes a regular column for Forbes about artificial intelligence.

AI News This Week

-

Economist’s view of artificial intelligence: Beyond cheaper prediction power (Forbes)

AI drives strategic business decisions by providing cheaper, better, and faster predictions, and AI is only getting better in this area. We are starting to outsource a large share of human decision-making to machines, which may have unforeseen implications. Prediction and judgement are two aspects of decision-making, which University of Toronto professors Ajay Agrawal, Joshua Gans, and Avi Goldfarb claim is the basis of economic and political power. In AI systems, these two tasks are being separated; humans are still in charge of judgement, but AI is now in charge of prediction. With this trend, AI opens the door to “a flourishing of new decisions.” The implications are further explored in their upcoming book Power and Prediction: The Disruptive Economics of Artificial Intelligence.

-

Five ways deep learning has transformed image analysis (Nature)

Using AI, we can extract information from images faster. Among the five areas where deep learning has been used are large-scale connectomics, virtual histology, cell finding, mapping protein localization, and tracking animal behaviour. In connectomics, deep learning has made it possible to generate increasingly complex connectomes, or maps of neural pathways in the brain. In virtual histology AI eliminates toxic dyes, expensive staining equipment, and labour-intensive process. Cell finding with deep learning enables faster visualization and imaging-based change research. For the same reasons, researchers apply deep learning to automate intracellular localization instead of manually annotating images to outline protein expression in cells and tissues. And in animal behaviour, deep learning has helped scientists better understand how animals interact with their environment and each other.

-

These autonomous, wireless robots could dance on a human hair (TechCrunch)

Researchers at Cornell University have developed robots the size of an ant that can move independently and are powered solely by light. Robots use AI to direct their movable legs around a light-absorbing photovoltaic cell. Applications would range from environmental cleanup and monitoring to targeted delivery of drugs, monitoring or stimulation of cells, and microscopic surgery. The paper, “Microscopic Robots with Onboard Digital Control,” was recently published in Science Robotics alongside a video.

-

Study provides insights on GitHub Copilot’s impact on developer productivity (VentureBeat)

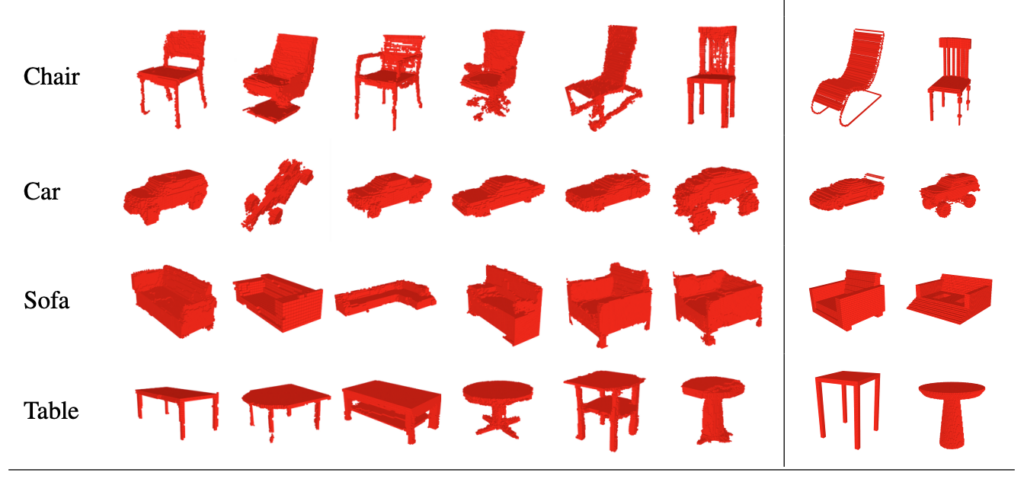

Language models are not yet suited to autonomous end-to-end deployment, but can increase productivity for programmers, writers, and artists. In brief, the work needs to be checked, but more work is done overall. GitHub conducted a survey to assess the impact of its language-to-code tool on developer’s workflows. The survey found 60–75% of developers feel more fulfilled and less frustrated when coding and 90% of respondents reported automation tools helped them complete tasks faster. However,Image: 64x64x64 renderings of computer-generated objects for data types, chair, car, sofa, table. To the right, the most similar object from the original source data is shown. Image from Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling, MIT CSAIL, 2017, developers continued to flag that new attack vectors may be created if suggestions are not reviewed. Despite their increasing usefulness as collaborators, these tools are not ready to act on their own.

-

Just for fun: James Earl Jones signs over rights to voice of Darth Vader to be replaced by AI (Mashable)

“The most iconic voice in cinema is now an AI.” At 91 years old, legendary actor James Earl Jones has signed over the rights to Darth Vader’s voice to a Ukrainian start-up. The company has been working with Lucasfilm to generate many of the voices heard throughout the Star Wars universe like Luke Skywalker in Disney’s The Book of Boba Fett. Using archival recordings and an AI algorithm, new dialogue can be created using the voices of past performers.

Radical Reads is edited by Leah Morris (Senior Director, Velocity Program, Radical Ventures).