Last month, Radical Ventures announced our lead investment in Outset‘s $30M Series B funding round. Outset is pioneering AI-moderated research and building the first AI-native Customer Experience Management platform, giving organizations deeper context on their customers at every touchpoint.

For decades, organizations have faced an impossible tradeoff: choose between the depth of qualitative research or the speed and scale of quantitative surveys. Traditional market research and customer experience tools rely on human moderators, limiting sample sizes, inflating costs, and extending timelines by weeks or months. Meanwhile, companies struggled to truly understand their customers across every touchpoint of the journey.

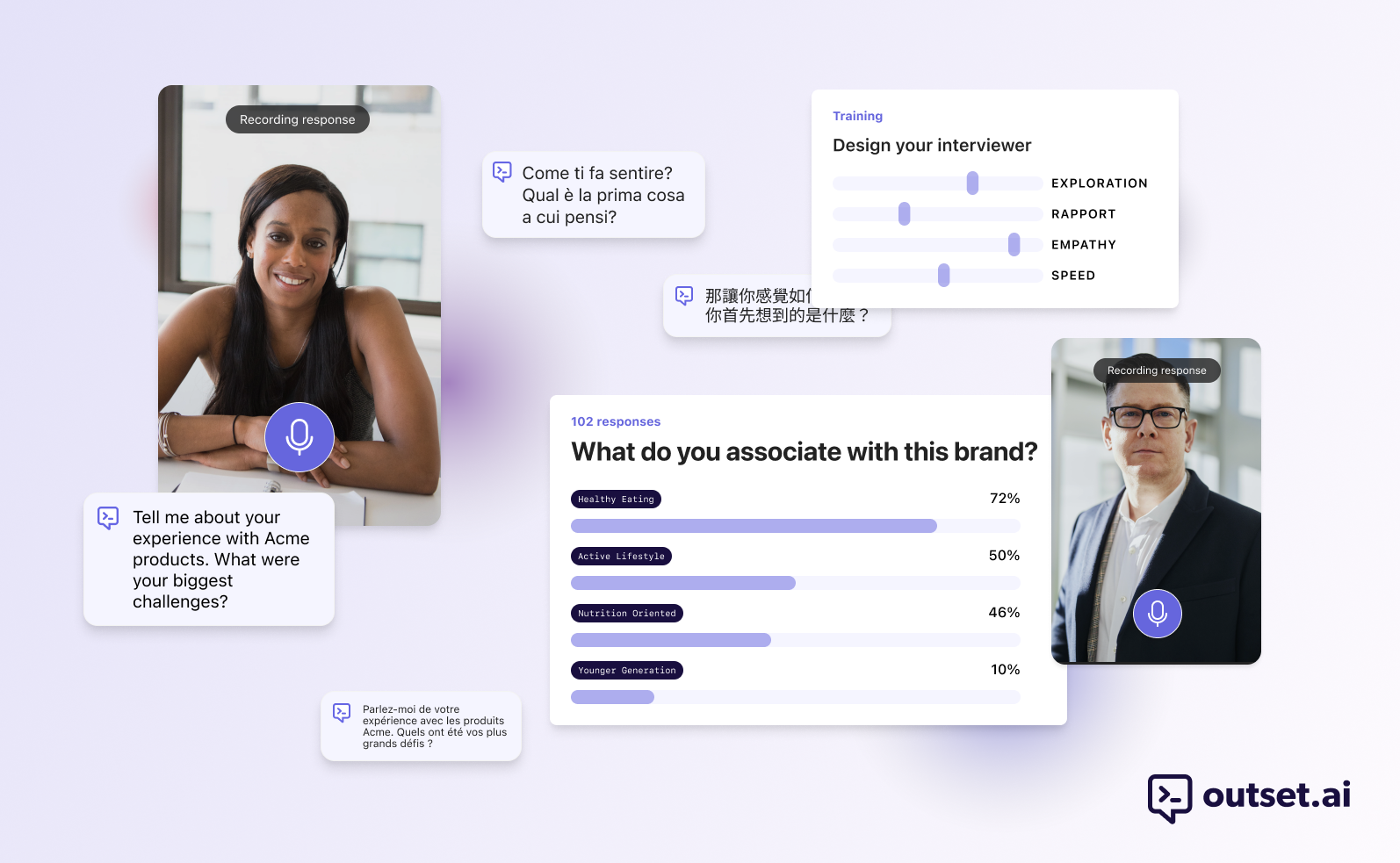

Outset is eliminating this trade-off by using AI agent moderators to conduct and analyze qualitative interviews at scale. The platform allows researchers to input discussion guides, recruit participants through panel integrations or client lists, and then conduct live video or voice interviews in real-time across more than 40 languages. Sessions deliver the nuanced depth of human conversation with the speed and reach of automated surveys, reducing research costs and time by over 80%. Jevon’s Paradox struck us as remarkably salient here — customers were increasing the number of surveys conducted by several multiples, given the ease delivered by these platforms.

The company has experienced remarkable traction, growing revenue more than 8x this year. Customers including Microsoft, WeightWatchers, Nestlé, HubSpot, Uber, Coinbase, Away, Indeed, Glassdoor, FanDuel, and several others have adopted Outset to test product concepts and user experiences, achieving research outcomes that are faster, more affordable, and larger in scale than traditional methods.

Co-founder and CEO Aaron Cannon brings strong product instincts and commercial acumen from his years in strategy consulting and product leadership roles at companies including Tesla, Triplebyte, and Untapped, where he experienced firsthand the limitations of traditional research methods. Co-founder and CTO Michael Hess, who has worked closely with Aaron historically, has demonstrated exceptional product velocity, building an enterprise-grade platform that continues to push the realm of what’s possible.

As AI continues to reshape enterprise workflows, the most valuable applications will be those that reimagine entire categories rather than simply automating existing processes. Outset exemplifies this opportunity, using AI to transform how organizations listen to and understand the most important thing — the people they serve.

We are excited to support Aaron, Michael, and the rest of the Outset team as they build the future of customer understanding.

Read more in Outset’s announcement of the round.

Radical Talks: Jonathan Frankle on the Future of Enterprise AI

Databricks Chief AI Scientist Jonathan Frankle joins Radical Partner Vin Sachidananda for a special conversation from Radical Ventures’ AI Masterclass Series. Together, they explore what it takes to move AI from academic insight to real-world, enterprise-scale deployment.

Drawing on Jonathan’s experience co-founding Mosaic ML and now leading research at Databricks, the discussion covers why early efficiency gains feel “quaint,” how open-source serves as scientific proof, and why evaluation remains the missing infrastructure for enterprise AI adoption. A practical conversation for founders and technical leaders building AI systems that need to perform in production.

Listen to the podcast on Spotify, Apple Podcasts, or YouTube.

AI News This Week

-

What An Investor Does at the World’s Biggest AI Research Conference (Logic)

Wonder what a VC does at NeurIPS, the world’s largest AI research conference? Radical Ventures partner Daniel Mulet spent the week in San Diego supporting portfolio companies in recruiting top talent, scouting emerging startups, and diving deep into technical research on world models and AI for scientific discovery. Radical hosted several marquee events, including a panel discussion featuring AI pioneer Geoffrey Hinton and Google DeepMind chief scientist Jeff Dean that was three times oversubscribed (listen to the full conversation on the Radical Talks podcast), and a party aboard the USS Midway aircraft carrier with Radical portfolio company Cohere.

-

An AI Revolution in Drugmaking is Under Way (The Economist)

AI is silently revolutionizing drug discovery, compressing preclinical timelines from 3-5 years to 12-18 months and improving clinical trial success rates from 40-65% to 80-90%. The technology enables in silico screening of tens of billions of molecules, predicts protein interactions, and optimizes trial design. Digital twins, virtual patient models created by companies like Radical portfolio company Unlearn.AI, have shown the ability to reduce control arm sizes, making studies faster and cheaper. Radical Ventures is bullish on AI’s impact across the drug discovery value chain, with investments spanning the entire pipeline: Genesis Molecular AI discovers novel small molecules through AI; Intrepid Labs employs AI-driven robotics to accelerate drug formulations; Latent Labs builds foundation models for de novo protein design; and Nabla Bio develops generative models to design antibodies against challenging disease targets.

-

In 2026, AI Will Move From Hype to Pragmatism (TechCrunch)

Experts predict the AI industry is shifting from brute-force scaling to practical deployment. As scaling laws plateau, enterprise adoption will favour smaller, fine-tuned models that offer superior cost and speed over larger systems, while agentic AI moves from demos to practice through standards like Anthropic’s Model Context Protocol, which connects agents to real tools. Physical AI applications like robotics and wearables will expand with advances in edge computing. World models — AI systems that learn how things move and interact in 3D space — show breakthrough potential next year, with models like Marble built by Radical portfolio company World Labs enabling users to create entire 3D worlds from image or text prompts.

-

LLMs Contain a Lot of Parameters. But What’s a Parameter? (MIT Technology)

A quick refresher on what a parameter is in the world of AI. Parameters are the numerical dials that control how AI models behave. When training a large language model like GPT-3, each of its 175 billion parameters gets updated iteratively through quadrillions of calculations, which is why training consumes so much energy. There are three types: embeddings convert words into numerical representations; weights determine how different parts connect, for example, allowing the model to understand how words relate in a sentence; and biases adjust thresholds to ensure no information is missed. Designers can also tune hyperparameters like “temperature,” a creativity dial that pushes models toward providing either factual or surprising outputs. Interestingly, researchers have found that in some cases, smaller models can outperform larger ones by employing training techniques like distillation.

-

Research: End-to-End Test-Time Training for Long Context (Astera, NVIDIA, Stanford, UC Berkeley, UC San Diego)

Researchers demonstrate that AI models can become more efficient by adopting human-like learning strategies. Traditional language models struggle with long contexts because they are designed to recall every detail, making them computationally expensive. The breakthrough shows AI systems can maintain performance while running 2.7× faster by compressing information as they process it. This suggests the future of AI lies not in building bigger memory banks, but in teaching models to learn continuously from what they are exposed to, mirroring how humans compress vast experiences into useful knowledge. For more on continual learning, listen to our Radical Talks podcast episode on the topic.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)