Editor’s note:

This week Cohere announced its US $125 million Series B fundraise led by Tiger Global, with participation from Radical Ventures (founding investors in the company) and returning investors Index Ventures and Section 32 (for more on the raise, you can read the Globe and Mail’s coverage).

Before the company was created, Radical’s managing partner, Jordan Jacobs, began conversations with the founders of Cohere (Aidan, Nick and Ivan) to explore how transformer technology could be developed into an NLP platform, and encouraged them to found what became Cohere. Radical invested in the company upon its incorporation just over two years ago and has continued to support Cohere’s growth by working very closely with the founders and growing leadership team ever since.

Cohere has recently opened up general access to its API and inked an agreement under which Google provisions to Cohere a world-leading, purpose-built supercomputer. Now every developer and company has easy, cheap access to the kind of language technology that previously only the Googles and Microsofts of the world could afford and access.

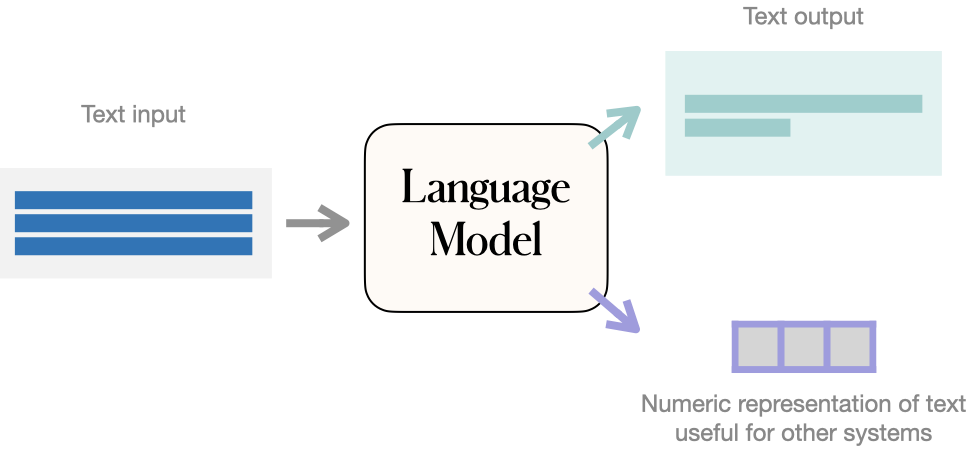

The Cohere Platform uses transformer-based language technology to enable any developer or business to build NLP products that can read and write. Aidan Gomez, the co-founder and CEO of Cohere, is a former member of the Google Brain team that developed transformers. Transformers are the enabling technology for recent breakthroughs where computers produce human-like text.

In his monthly Forbes column, Radical Ventures Partner Rob Toews discusses why language is the next great frontier in AI and the critical role Transformer-based NLP models stand to play in helping machines understand language. We are sharing an excerpt from his article below.

Language is the cornerstone of human intelligence. The emergence of language was the most important intellectual development in our species’ history. Building machines that can understand language has thus been a central goal of the field of artificial intelligence dating back to its earliest days. It has proven maddeningly elusive.

The technology is now at a critical inflection point, poised to make the leap from academic research to widespread real-world adoption. In the process, broad swaths of the business world and our daily lives will be transformed. Given language’s ubiquity, few areas of technology will have a more far-reaching impact on society in the years ahead.

The most powerful way to illustrate the capabilities of today’s cutting-edge language AI is to start with a few concrete examples.

Today’s AI can correctly answer complex medical queries—and explain the underlying biological mechanisms at play. It can craft nuanced memos about how to run effective board meetings. It can write articles analyzing its own capabilities and limitations, while convincingly pretending to be a human observer. It can produce original, sometimes beautiful, poetry and literature. (It is worth taking a few moments to inspect these examples yourself.)

What is behind these astonishing new AI abilities, which just five years ago would have been inconceivable? In short: the invention of the transformer, a new neural network architecture, has unleashed vast new possibilities in AI.

Transformers’ great innovation is to make language processing parallelized, meaning that all the tokens in a given body of text are analyzed at the same time rather than in sequence. Before transformers, the state of the art in NLP—for instance, LSTMs and the widely-used Seq2Seq architecture—was based on recurrent neural networks. By definition, recurrent neural networks process data sequentially—that is, one word at a time, in the order that the words appear. A flurry of innovation followed in the wake of the original transformer paper as the world’s leading AI researchers built upon this foundational breakthrough.

Language is at the heart of human intelligence. It therefore is and must be at the heart of our efforts to build artificial intelligence. No sophisticated AI can exist without mastery of language.

AI News This Week

-

DeepMind’s AI can control superheated plasma inside a fusion reactor (MIT Technology Review)

DeepMind has developed a deep reinforcement algorithm capable of handling superheated plasma inside a nuclear fusion reactor – a critical system in nuclear fusion. Hotter than the sun’s centre, the plasma needs to be held inside a reactor long enough to extract energy. Researchers use a variety of tricks, including lasers and magnets and require constant monitoring and manipulations of the magnetic field. In the experimental reactor, the AI could control the plasma for only two seconds, as long as the reactor could run before getting too hot. The breakthrough is another application of AI to hard science problems. The findings were published in Nature and could pave the way for more stable and efficient reactions.

-

This super-realistic virtual world is a driving school for AI (MIT Technology Review)

Last week, Radical portfolio company Waabi announced their scalable, highest-fidelity, closed-loop simulator called Waabi World – a key to unlocking the potential of self-driving technology. Waabi World is an immersive and reactive environment powered by AI. The system can design tests, assess skills, and teach the self-driving “brain” to learn the same mix of intuition and skills a human uses to drive while eliminating human vulnerabilities such as distraction and fatigue. Usually, it would take thousands of self-driving vehicles navigating millions of miles for thousands of years to experience everything necessary to learn to drive safely in every possible circumstance, and it would be risky. Waabi World represents the next step toward rapidly and safely commercializing self-driving vehicles.

-

Economists are revising their views on robots and jobs (The Economist)

Studies find that automation is associated with more jobs, fewer working hours, and higher productivity. In a recent paper, Philippe Aghion, Céline Antonin, Simon Bunel and Xavier Jaravel, economists at a range of French and British institutions, put forward a “new view” of robots, saying that “the direct effect of automation may be to increase employment at the firm level, not to reduce it.” A growing body of research supports the paper and provides evidence that the narrative connecting automation and joblessness is unfounded.

-

Towards better data discovery and collection with flow-based programming (Arxiv)

Despite enormous successes for machine learning, such as voice assistants or self-driving cars, businesses still face challenges deploying machine learning in production. Researchers at the University of Cambridge proposed a new infrastructure designed for data-oriented activities. The infrastructure called flow-based programming (FBP) simplifies data discovery and collection in software systems. Networked software is often built using a service-oriented architecture, but networked machine learning applications may be easier to manage using a different programming style.

-

The elusive hunt for a robot that can pick a ripe strawberry (Wired)

Berry-picking proves to be a difficult challenge for a robot. Identifying a berry that is ripe enough to pick, grasping it firmly but without damaging the fruit, and pulling hard enough to separate it from the plant without harming the plant. Proponents of agricultural computer vision systems argue that predicting when fruit is ripe will lead to improved sales, reduced waste, and yield gains as the global population expands to 10 billion. Robots can also help grow and market expensive specialty fruits. Robots working in tandem with automated vision systems can monitor crops 24 hours a day to predict the ideal time to pick a ripe red strawberry.

Radical Reads is edited by Ebin Tomy.