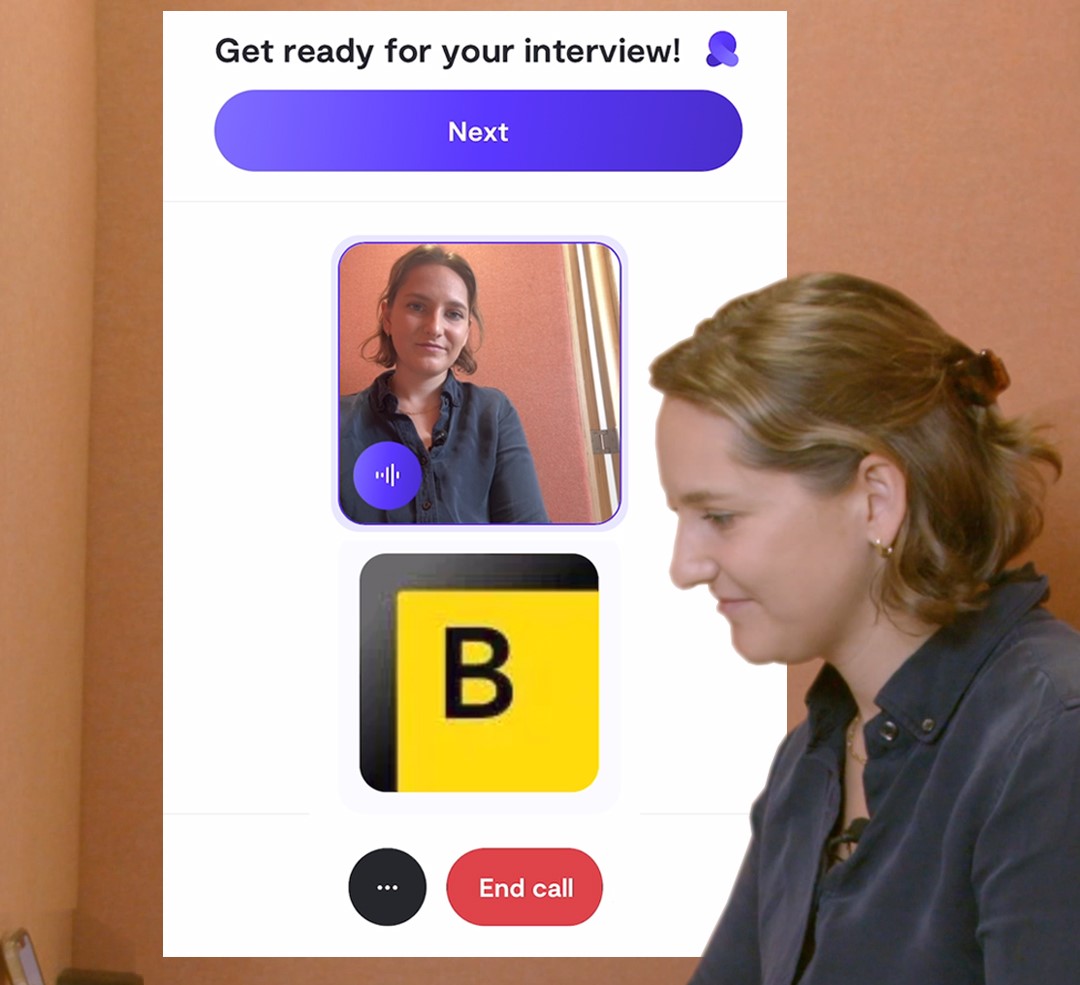

Recently, Ribbon AI made headlines on the front page of The New York Times in a piece that explored various perspectives on AI-powered job interviews. Ribbon AI has developed an AI interviewer that screens and evaluates candidates, helping enterprises identify top talent. Arsham Ghahramani, Co-Founder and CEO of Ribbon, explores how AI interviews can make the recruitment process more human.

The current hiring landscape has created a paradox: in our quest to find the right people, we have built systems that systematically exclude great candidates.

Consider the reality most job seekers face today. Ninety-five percent of applications are ghosted without any constructive feedback. Over a thousand people apply for every decent role. Candidates wait weeks for responses that never come, while recruiters struggle to engage with the overwhelming volume of applications they receive in a meaningful way.

This is not a human problem; it is a scale problem. And scale problems require technological solutions that amplify human capabilities rather than replace them.

Everyone’s debating: “Will AI replace human recruiters?” We think the right question to ask is: “How do we use AI to make human conversations more meaningful?”

AI as a Bridge to Human Connection

When someone needs to pass a basic qualification screen to reach a human conversation, AI becomes a bridge, not a barrier. For these skilled professionals, waiting weeks for a five-minute phone screen represents a barrier to accessing opportunities.

This approach enables recruiters to focus their time where human connection truly matters: conversations about company culture, growth opportunities, and organizational vision. They can explore team dynamics and strategic alignment through discussions that demand human insight, empathy, and the nuanced understanding that only comes from lived experience.

Unlocking Human Insights at Scale

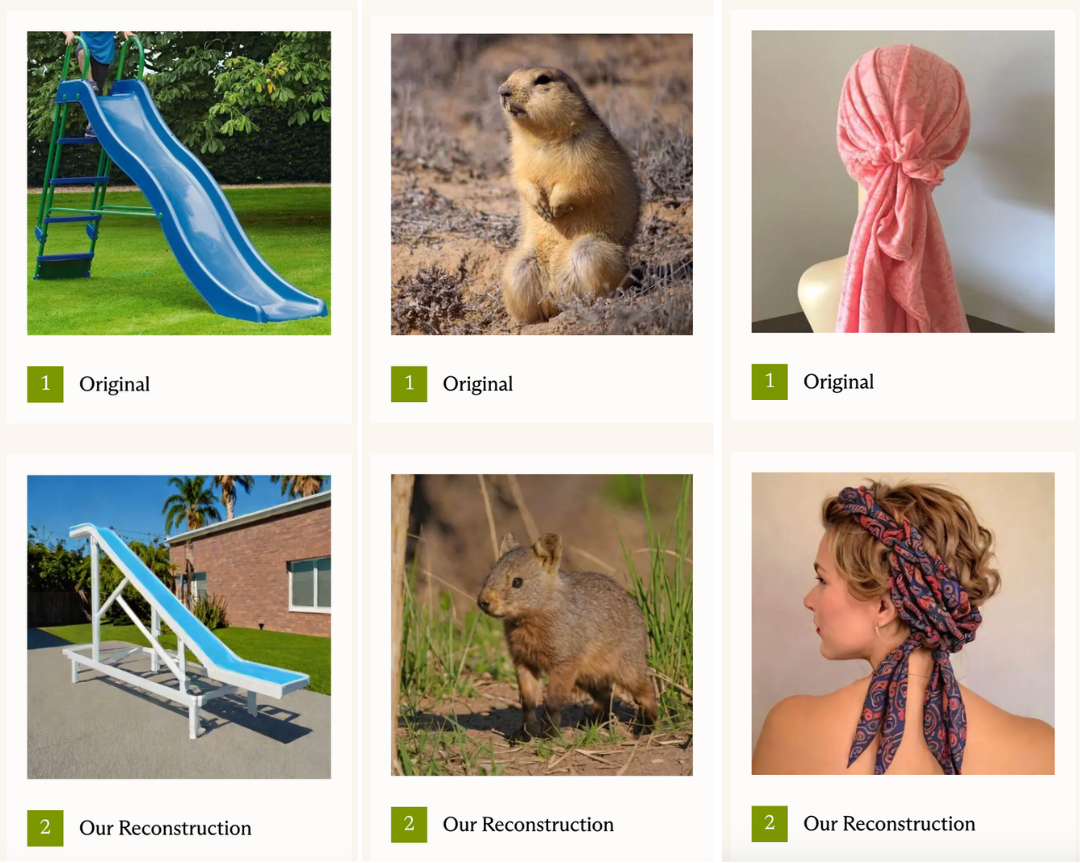

One of AI interviews’ most powerful capabilities is pattern recognition across thousands of conversations. When human recruiters conduct 20-30 interviews per week, they develop intuition about candidate quality, enthusiasm, and fit. But imagine if that same recruiter could glean insights from hundreds of conversations, analyzing not just what candidates say, but how they say it.

AI interviews can detect enthusiasm in vocal tonality, identify genuine passion in word choice, and recognize authentic responses versus rehearsed scripts. These insights help hiring teams understand candidate motivation and cultural fit at a scale impossible through traditional methods.

Making Access More Equitable and In-Depth

Perhaps the most human aspect of AI interviews is their accessibility. Traditional hiring schedules systematically exclude millions of talented people who cannot step away during business hours. AI interviews operate on candidates’ timelines.

When our AI interviewer engages with candidates, it goes beyond asking generic questions from a predetermined script. Instead, it reads resumes, LinkedIn profiles, and portfolio projects to ask thoughtful, personalized questions.

This level of preparation and personalization often exceeds what candidates experience in traditional phone screens, where overworked recruiters may have had only moments to glance at a resume.

The Future of Human-Centred Hiring

The most successful AI isn’t the one that fools humans — it’s the one that honours them. By handling routine qualification tasks efficiently and transparently, AI interviews create space for more meaningful human interactions later in the hiring process.

This technology does not represent the death of human connection in hiring. Instead, it offers a path toward hiring systems that are more accessible, more equitable, and ultimately more human than what we have today.

Visit Ribbon to learn more about how they are transforming hiring with Voice AI technology.

AI News This Week

-

AI is Changing the World Faster than Most Realize (Axios)

AI leaders are highlighting the rapid advancement of the technology. The technology is transforming education, with an estimated 90% of college students using ChatGPT for learning. Economists emphasize AI’s tremendous potential for productivity gains given the trajectory of rapid advances towards human-like intelligence. UVA’s Anton Korinek noted the possibility for “enormous productivity gains” and “much more broadly shared prosperity.” Even at current capabilities, AI is advanced enough to transform how we live, learn, and work.

-

EU Pushes Ahead with AI Code of Practice (FT)

The EU has unveiled its code of practice for general-purpose AI models. Under the code, companies must implement technical measures to prevent models from generating copyrighted content and commit to testing for risks. Providers of the most advanced models are required to monitor their systems post-release and provide external evaluators with access to their capabilities. The decision comes amid intense pressure from industry groups which have warned that unclear regulations threaten Europe’s competitiveness in AI.

-

China is Building an Entire Empire on Data (The Economist)

China is transforming data into a strategic national asset, with President Xi Jinping referring to it as a “foundational resource” as the government systematically organizes the country’s vast data streams from 1.1 billion citizens and surveillance networks into an integrated “national data ocean.” Key initiatives include new regulations that compel government data sharing, state-owned enterprise data valuations, and a digital ID system that creates centralized oversight of citizens’ online activity.

-

Can AI Solve the Content-Moderation Problem? (WSJ)

Researchers are developing AI-powered tools that could give social media users personalized control over content filtering. A study presented at the ACM Web Conference demonstrated a YouTube filter using large language models like GPT-4 and Claude 3.5 that achieved 80% accuracy in identifying harmful content. Concerns remain about the effectiveness of off-the-shelf models for nuanced moderation tasks and the potential for users to customize feeds to see more harmful content.

-

Research: Research: Chain-of-Thought Is Not Explainability (Oxford/WhiteBox/Google DeepMind/AI2/Mila)

Researchers challenge the assumption that chain-of-thought (CoT) reasoning, in which models verbalize step-by-step rationales before answering, provides genuine interpretability in large language models. Analyzing 1,000 CoT-focused papers, they found 25% treat CoT as interpretability without validation. The study demonstrates that CoT explanations are often unfaithful to actual model computations, as models silently correct errors, ignore prompt biases, and employ shortcuts not reflected in their reasoning. The authors recommend treating CoT as a form of communication rather than a measure of true interpretability and developing causal validation methods.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)