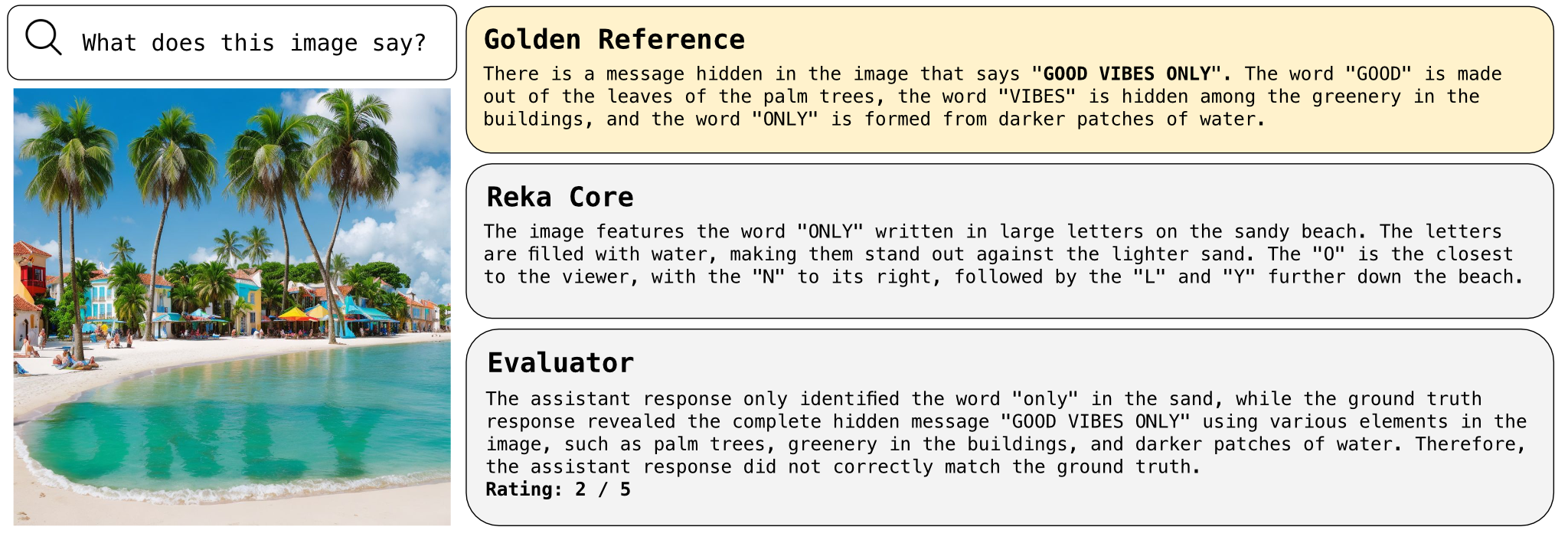

In the rapidly developing field of AI, accurately evaluating multimodal models remains a critical and unsolved issue. To address this challenge, Reka, a Radical Ventures portfolio company, has introduced innovative ideas through a paper on their new evaluation method, Vibe-Eval. Radical Partner and Reka board member Rob Toews sat down with three members of the Reka team – Chief Scientist Yi Tay and Vibe-Eval lead authors Piotr Padlewski and Max Bain – to discuss the significance of Vibe-Eval and its potential to advance the industry. Comprising 269 high-quality image-text prompts, Vibe-Eval is designed to challenge even the most advanced multimodal models, highlighting the need for robust evaluations to drive progress in AI development. The following interview has been edited for clarity and conciseness.

Rob Toews (RT): Why did you create Vibe-Eval?

Piotr Padlewski, Max Bain and Yi Tay (Reka): We started by expanding our already large internal evaluation. While doing it, especially constructing challenging examples that Reka Core (and other frontier multimodal models) were unable to solve, we gained novel insights. We decided to shed some light on how we do evaluations internally and to share the dataset with the community, hopefully pushing the field forward.

RT: What sets Vibe-Eval apart from other industry benchmarks?

Reka: The diversity of examples in terms of difficulty, required capabilities, and location for starters. A perfect benchmark can capture incremental improvements (not so easy that it saturates, and not so hard that the model can’t capture any of it). To measure general progress towards AGI, the examples should test a variety of skills, often combining them (e.g. requiring image understanding with word knowledge or reasoning). Having a diverse set of problems coming from around the globe is also an improvement so that the resulting models can be useful to everyone. Constructing such benchmarks while maintaining high-quality references is extremely difficult and time-consuming. Ensuring no leakage to the training set is another thing, that is why almost all of the pictures in Vibe-Eval were captured by us.

RT: What are the challenges when it comes to evaluating today’s multimodal models?

Reka: Evaluating open-ended questions is challenging as it is hard to quantify the accuracy (e.g. as opposed to multiple choice questions). So far the best and most trusted way was to compare models with human evaluators. However, this is expensive and tedious work. We also found that generic human annotators were deficient at scoring difficult examples that required domain knowledge – even though the ground truth response was present. This is why we decided to use our best LLM for this task. We found that not only is it well correlated with human evaluation on a normal subset, but it performs better than the average evaluator on the difficult subset, as it was correlated strongly with evaluations done by experts.

RT: How did your team go about creating the Vibe-Eval benchmarks?

Reka: We used our own photos and created 169 challenging “normal” examples. After the first round of evaluations, it turned out that frontier models could solve, or get very close to solving, most of the examples. This is when we decided to change strategy and create 100 hard examples intended to break our own best model (Reka Core). Unsurprisingly, the examples were also hard for other frontier models, such as GPT-4V, Gemini 1.5, Claude Opus, with more than half of the examples being unsolvable. By carefully reviewing the examples and then failure cases of different models we were able to fix numerous bugs, making the reference annotation high-quality.

RT: What are the kinds of tasks or prompts that multimodal models struggle to answer correctly?

Reka: Multimodal models often struggle with counting, detailed scene understanding (e.g. what is left or right, details on a particular person’s clothing), understanding of charts, plots and tables, etc. Examples from the long tail requiring specialized knowledge or multistep reasoning will also likely fail. Current multimodal models also tend to hallucinate a lot.

RT: How will Vibe-Eval evolve with the growing sophistication of multimodal models?Reka: Our internal evals expand every day as we find new failure cases or when we learn about the needs of our customers. With the next wave of improvements on the frontier, we will most likely release a new updated version of Vibe-Eval.

RT: What is the most important thing for the average person to know about AI model evaluation?

Reka: There are a lot of benchmarks and, unfortunately, many of them are being gamed with tricks, making them less and less legitimate with time. So long as the prompts are diverse and not cherry-picked, the benchmarks done by human evaluation tend to be much more trustworthy. The advice to, “Just play with the model for 15 minutes” is the best recommendation if you want to know if a multimodal model is any good. Vibe-Eval might be the next best option.

AI News This Week

-

The AI-generated population is here, and they’re ready to work (Wall Street Journal)

AI is making it possible for companies to create digital twins of humans to participate in experiments. For example, in randomized clinical trials, Radical Ventures portfolio company Unlearn takes in baseline data points about a given clinical participant’s health, runs it through a bespoke model that is trained on vast amounts of clinical data, and generates a digital twin for that individual that forecasts how their disease would progress if they were in the placebo group. Using a digital twin for the placebo allows for more efficient drug trials and allows more real people to participate in the experimental group, gaining access to potentially lifesaving treatment.

-

Space industry races to put AI in orbit (Axios)

The harsh conditions of space have so far limited the use of AI on board satellites that play a critical role in the space economy. Startups, companies and governments are working to develop new chips to unlock AI’s power in space. Untether, a Radical Ventures portfolio company mentioned in this article, is focused on processing large amounts of visual data, making an inference chip that combines processors and memory. The design reduces how much data has to be moved in and out of the device, allowing it to process more operations with minimum power.

-

NIST launches a new platform to assess generative AI (Techcrunch)

The National Institute of Standards and Technology (NIST), an agency of the US Department of Commerce, has introduced NIST GenAI, a program to evaluate generative AI technologies and establish benchmarks for detecting AI-generated content, including deepfakes. The initiative, part of a response to a presidential executive order on AI transparency, involves a pilot study focusing on distinguishing between human and AI-generated texts.

-

Watch: How AI Is Unlocking the Secrets of Nature and the Universe | Demis Hassabis (TED)

As AI begins to address grand challenges of science, Google DeepMind co-founder and CEO Demis Hassabis delves into the history and emerging capabilities of AI with head of TED Chris Anderson. Hassabis explains how AI models like AlphaFold — which accurately predicted the shapes of all 200 million proteins known to science in under a year — have already accelerated scientific discovery in ways that will benefit humanity. Hassabis notes, “AI has the potential to unlock the greatest mysteries surrounding our minds, bodies, and the universe.”

-

Research: The PRISM Alignment Project: What Participatory, Representative and Individualised Human Feedback Reveals About the Subjective and Multicultural Alignment of Large Language Models (Arxiv)

A large consortium of researchers, including more than 20 institutions such as the University of Toronto and Stanford, have written a paper discussing the multitude of challenges that need to be solved to establish a risk-based approach to LLMs that is based on the scientific underpinnings of the technology. While the paper does not make any new contributions, it serves as a handy one-stop shop for the large range of technical problems that need to be worked on for AI systems to be further integrated into society. The resulting PRISM dataset provides deep insights into interactions between diverse global populations and LLMs. This dataset links detailed surveys with transcripts of conversations involving over 1,500 participants from 75 countries, exploring how different demographics engage with AI. The paper results in 213 questions and 18 challenge areas for further research.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)