Radical Ventures Partner Rob Toews published his latest article in Forbes outlining the current inflection point we are seeing for synthetic data. The technology enables practitioners to digitally generate the data that they need, on demand, in whatever volume they require, tailored to their precise specifications. The article covers synthetic data’s roots in the autonomous vehicle field and its growing impact on language technologies. Synthetic data is poised to upend the entire value chain and technology stack for artificial intelligence, with immense economic implications. Here is an excerpt from the article’s conclusion:

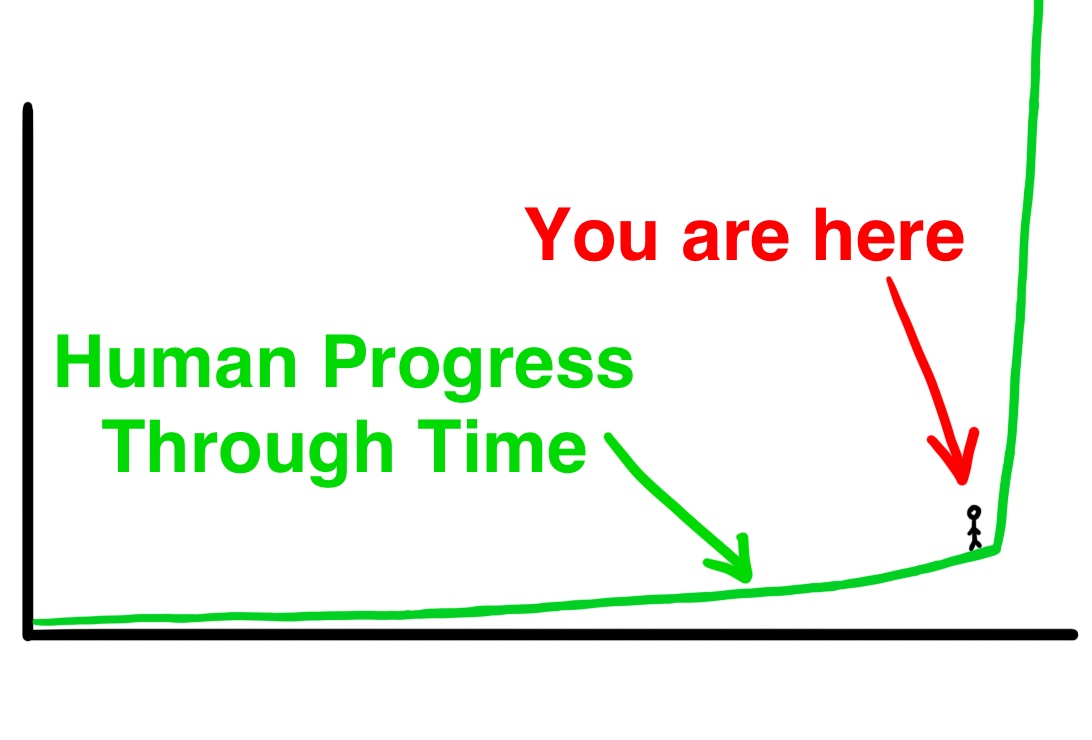

The graph below speaks volumes. Synthetic data will completely overshadow real data in AI models by 2030, according to Gartner.

As synthetic data becomes increasingly pervasive in the months and years ahead, it will have a disruptive impact across industries. It will transform the economics of data.

By making quality training data vastly more accessible and affordable, synthetic data will undercut the strength of proprietary data assets as a durable competitive advantage.

Historically, no matter the industry, the most important first question to ask in order to understand the strategic dynamics and opportunities for AI has been: who has the data? One of the main reasons that tech giants like Google, Facebook and Amazon have achieved such market dominance in recent years is their unrivaled volumes of customer data.

Synthetic data will change this. By democratizing access to data at scale, it will help level the playing field, enabling smaller upstarts to compete with more established players that they otherwise might have had no chance of challenging.

To return to the example of autonomous vehicles: Google (Waymo) has invested billions of dollars and over a decade of effort to collect many millions of miles of real-world driving data. It is unlikely that any competitor will be able to catch up to them on this front. But if production-grade self-driving systems can be built almost entirely with synthetic training data, then Google’s formidable data advantage fades in relevance, and young startups like Waabi have a legitimate opportunity to compete.

The net effect of the rise of synthetic data will be to empower a whole new generation of AI upstarts and unleash a wave of AI innovation by lowering the data barriers to building AI-first products.

Rob writes a regular column for Forbes about artificial intelligence.

AI News This Week

-

AI Startup Cohere launches a nonprofit research lab (TechCrunch)

Entering the machine learning field can be extremely difficult, especially depending on where you are in the world. Radical Ventures portfolio company Cohere is looking to tackle this issue while also solving some of the field’s most complex problems by launching the non-profit Cohere for AI, led by former Google Brain researcher Sara Hooker. Her vision is to change how, where and by whom research is done. Cohere For AI represents the opportunity to make an impact in ways that don’t just advance progress on machine learning research, but also create new points of entry into the field.

-

Four AI Pioneers Win Top Spanish Science Prize (Barron’s)

Four scientists considered pioneers in the field of AI were awarded Spain’s prestigious Princess of Asturias prize for scientific research. One of the recognized scientists was Radical Ventures’ friend, investor and AI luminary Geoffrey Hinton. He was awarded alongside Canadian researcher Yoshua Bengio, Meta’s Chief AI Scientist, Yann LeCun, and Demis Hassabis, co-founder and CEO of DeepMind. Geoff, Yoshua and Yann were already honoured in 2018 with the Turing Award, which is sometimes referred to as the ‘Nobel Prize’ of computing. As the jury for this latest prize put it, “Their contributions to the development of deep learning have led to major advances in techniques as diverse as speech recognition… object perception, machine translation, strategy optimisation, the analysis of protein structure, medical diagnosis and many others.”

-

How a scientist taught chemistry to the AlphaFold AI (Fast Company)

In 2016 DeepMind launched its AlphaFold program solving what is known as the protein-folding problem, which computer scientists had been studying for 50 years. The problem asks how a protein’s amino acid sequence dictates its three-dimensional atomic structure. A typical protein can “adopt an estimated 10 to the power of 300 different forms” (more than the number of atoms in the universe). AlphaFold was a major win for AI, accruing significant scientific prestige and delivering a critical scientific advance that could affect everyone’s lives. A chemist in Connecticut studying fluorescent proteins recently demonstrated that AlphaFold2 could fold the fluorescent proteins that were not in the system’s protein data bank. “Only a chemist with a significant amount of fluorescent protein knowledge would be able to use the amino acid sequence to find the fluorescent proteins that have the right amino acid sequence to undergo the chemical transformations required to make them fluorescent.” The research showed that AlphaFold2 had learned some chemistry, figuring out which amino acids in fluorescent proteins undergo the chemistry that makes them glow.

-

Huge “foundation models” are turbo-charging AI progress (The Economist – subscription required)

In recent years, a new successful paradigm for building AI systems has emerged: train one model on a huge amount of data and adapt it to many applications. These models are known as foundation models. The ability to base a range of different tools on a single model reduces barriers for businesses to adopt AI. Developing an AI for a business use case is increasingly a predictable process where previously using AI models was a “speculative and artisanal” endeavour. BirchAI, a Radical Ventures portfolio company, is noted in this article for turning the output of foundation models into products. BirchAI aims to automate how conversations in health care-related call centres are documented by fine-tuning a model developed by one of its founders, Yinhan Liu. Prior to BirchAI Yinhan was a star researcher at Facebook AI Research (FAIR), where she was lead author on some of Facebook’s most important work on foundational natural language models. Decreasing the uncertainty in developing and deploying AI will have huge economic impacts globally.

-

AI may have unearthed one of the world’s oldest campfires (Science)

Molecular change, invisible to the eye but uncovered by AI, provides evidence that early human ancestors may have been using fire as early as 800,000 to 1 million years ago. Harnessing one of humanity’s newest technologies to study one of its oldest, archeologists in Israel and Canada were able to discern subtle signs of heating by fire in stone tools from the Evron Quarry archaeological site in Israel. The results bring new evidence to bear on the long-standing question of how fire influenced the ancestors of modern Homo Sapiens.

Radical Reads is edited by Ebin Tomy.