We are thrilled to announce that Vin Sachidananda has joined Radical Ventures as our newest Partner.

Vin brings a remarkable depth of experience to our team, having navigated the AI landscape from multiple vantage points. His journey began in the world of pure research, earning a PhD from Stanford, where he worked on the foundational AI models that shape our world today. He then applied that knowledge at scale inside tech giants like Google, Apple, and Amazon, before diving into the trenches as a founding engineer at two subsequently acquired startups.

This progression, from researcher to builder to, most recently, a Partner at Two Sigma Ventures, has forged a truly distinct perspective on what it takes to build an enduring company. We sat down with him to explore the core principles that guide his thinking and gain insight into his vision for the future of intelligent systems.

Radical: You’ve seen the entire AI lifecycle, from publishing foundational research to productionizing models at Google and Apple, and now investing. Where in the current AI stack — from new silicon and foundation models to the application layer — do you see the most defensible, long-term technical moats being built by early-stage startups?

Vin: I see the potential for defensible and long-term technical moats in companies building custom silicon, marketplaces, and drug discovery. As an example in custom silicon, we are starting to see disaggregation across AI use cases (e.g. batch, real-time, or modality-specific inference), and there is an opportunity for companies to build towards $10B+ markets as these workflows commercialize.

Radical: How does your evaluation framework change between a company claiming a fundamental research breakthrough versus one with a brilliant go-to-market strategy for applying existing AI?

Vin: For companies with fundamental research breakthroughs, the first things I evaluate are: (1) the improvements of a particular advancement relative to existing literature, and (2) the markets/use cases that the technology built off the advancements could unlock. The second point is particularly important, and the companies I have invested in with this approach are usually enabling large new markets to exist.

My evaluation for strong GTM approaches is usually centred around unique distribution advantages that a team or product may have relative to competitors. A couple of examples of this would be a particular team’s relationships with buyers or channel partnerships for top-down motions, while in bottom-up motions, you look for network effects or strongly converting incentive mechanisms.

Radical: You have published papers at NeurIPS and ICLR, so I’m sure you know there’s often a significant gap between a published paper and a commercially viable product. What recent research area do you feel is being overlooked by the venture community but has massive commercial potential?

Vin: An area that is still early but shows promise is discrete diffusion models. There are a few companies which I’ve invested in that look promising for use cases in code generation and protein modelling. Some distinct advantages of these models relative to autoregressive approaches are capabilities in better in-filling, stronger throughput, and potentially the admission of alternative algorithms for alignment.

Radical: From your perspective, what is the most significant limitation of the current Transformer architecture that you believe will pave the way for the next dominant model architecture?

Vin: I’m not particularly pessimistic about the Transformer architecture for general-purpose use cases. I made a bet 3 years back with one of my best friends that Transformers would be the architecture most used for at least 10 years. So far, so good.

Functionally, Transformers provide a superset of locality filters relative to convolutional neural networks (CNNs) while encoding sequentiality similarly to recurrent neural networks (RNNs). The architecture imposes few biases, which provides generality, at the expense of compute. If there is a reason to move away from Transformers, it would be around efficiency, but current infrastructure continues to allow compute to scale exponentially. At least for now.

Radical: Looking beyond language, which other scientific or industrial domain, be it materials science, robotics, or biology, do you believe is most ripe for its own ‘Transformer moment’?”

Vin: I’m particularly excited about the use of foundation models in drug discovery and believe that strong tools for high-fidelity simulation will enable the capability to provide better therapeutics for a large number of indications. DeepMind’s Alphafold was a critical discovery which advanced structured protein prediction, but there are a number of other software advancements needed to enable stronger target molecule selection in silico.

Radical: What is one non-consensus belief you hold about the future of AI?

Vin: Currently, there is a lot of talk about an AI bubble surrounding datacenter capex buildout. Perhaps in time I will be proven wrong, but I don’t think we are in a bubble.

In the short term, we have large diversions of free cash flow and commitments from the most creditworthy businesses around — Google, Microsoft, Meta, etc. As AI is an existential risk for many of these software businesses, hundreds of billions will be invested off these balance sheets towards datacenter capex for years to come.

In the long term, I believe the unit economics will settle to look more like hyperscaler expansion over the last decade rather than the fibre buildout of the dot-com boom. A few reasons for this are the efficiency gains we are seeing from GPU/ASIC throughput year over year, improvements in software/kernels for inference, strong token usage growth across model providers, and emerging high-value use cases in higher bandwidth modalities.

AI News This Week

-

Latent Labs’ Simon Kohl Is Rewriting the Code of Biology With Generative A.I. (The Observer)

Simon Kohl, co-developer of Nobel Prize-winning AlphaFold2 and founder/CEO of Radical Ventures portfolio company Latent Labs, is pioneering the shift from predicting biological structures to designing them from scratch. In this Q&A, Simon describes how Latent Labs is working towards making biology programmable with their platform, LatentX, which achieves 91-100% laboratory hit rates for macrocycles. This compresses months of experiments into seconds, enabling scientists to achieve with 30 candidates what previously required testing millions.

-

The Transformative Potential of AI in Healthcare (FT)

AI is uncovering efficiencies in healthcare, from diagnostic tools that outperform doctors by four times to speech-processing technologies reducing clinician documentation time by over 50%. With aging populations and a projected shortage of 11 million healthcare workers by 2030, the adoption of AI is critical for sustaining healthcare systems. Radical portfolio companies are at the forefront of this effort: PocketHealth empowers patients with ownership of their imaging data while streamlining hospital image management; Signal 1 AI addresses backlogs and staffing shortages by integrating AI-driven insights directly into hospital workflows; and Attuned Intelligence deploys AI Voice Agents, providing 24/7 call center coverage and achieving over 70% automation and immediate staffing relief for healthcare providers.

-

Promise Robotics Opens Second Factory to Help Increase Canadian Housing Supply (BetaKit)

Radical Ventures portfolio company Promise Robotics is expanding its robotic homebuilding operations with a new 60,000 sq. ft. factory in Calgary, set to begin production this summer, with the capacity to produce up to 1 million sq. ft. of housing annually. The company’s factory-as-a-service model enables builders to convert blueprints into manufacturing instructions for robots that produce homes ready for onsite assembly. Promise’s robots can build a single-family unit in approximately six hours, 70% faster than conventional methods, without requiring significant capital investment or technical expertise from builders.

-

Why AI Will Widen the Gap Between Superstars and Everybody Else (WSJ)

Research by Texas A&M Professor Matthew Call challenges the belief that AI will level workplace performance. Instead, top performers gain disproportionate advantages by leveraging domain expertise to craft better prompts, systematically evaluate AI outputs, and experiment freely due to organizational autonomy. To level the playing field, Call recommends creating AI experimentation sandboxes, establishing knowledge-sharing practices, and redesigning evaluation systems with transparency guidelines on AI usage.

-

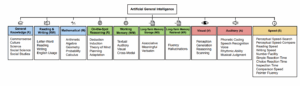

Research: A Definition of AGI (Multi-Institution Contribution) (Multi-Institution Contribution)

Researchers introduced a quantifiable AGI framework grounded in human cognitive theory, evaluating AI across ten core domains, including knowledge, reasoning, memory, and processing speed. Current models exhibit “jagged” profiles and excel in knowledge-intensive tasks while scoring near 0% in long-term memory storage. For example, GPT-4 achieves 27% while GPT-5 reaches 58% on the AGI scale. The framework reveals that AI systems employ “capability contortions,” leveraging strengths such as massive context windows to mask fundamental weaknesses, thereby creating a brittle illusion of general intelligence.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)