The International Conference on Machine Learning (ICML) is one of the premier events in the field of machine learning. This year, the conference received over 9,400 paper submissions, with 2,610 selected for presentation. Among these, only ten were honoured with a best paper award. Notably, many of this year’s awarded papers emerged from collaborations between industry and academia, highlighting the increasing prevalence of such partnerships. In 2023, 21 significant machine learning models resulted from these types of collaborations, a sharp rise from just one major contribution in 2022. This week we highlight three of the awarded papers which reflect emerging research trends.

Debating with More Persuasive LLMs Leads to More Truthful Answers

A study by cross-industry and academic researchers including University College London, Anthropic, FAR AI, and Speechmatics shows that AI models debating each other can lead to more accurate answers. By having AI argue for different answers, both non-expert models and humans are better at identifying the correct one.

Key Contributions:

- Enhanced Accuracy: AI debates significantly improve answer accuracy compared to non-adversarial methods.

- Persuasiveness Matters: Optimizing AI for persuasiveness increases argument accuracy, using a metric that does not rely on predefined answers.

- Human Oversight: Human judges outperform AI in assessing debates, showing the value of human involvement.

- Scalable Method: Debates could be a scalable way to oversee increasingly advanced AI models.

This research highlights that as AI evolves, optimizing for persuasiveness in debates could result in more reliable information.

Considerations for Differentially Private Learning with Large-Scale Public Pretraining

Researchers from ETH Zürich, the University of Waterloo, and Google DeepMind highlight the risks to confidentiality in current training paradigms for large language models (LLMs). The authors argue that there is a need to address privacy challenges in machine learning, particularly when models are trained on large public datasets.

Key Contributions:

- Critique of Training Paradigms: The authors argue that current methods compromise confidentiality for two main reasons:

- Overestimated Value of Public Pre-Training: Public and private data distributions often overlap, leading to a false sense of security.

- Outsourcing of Private Data: The significant computational power required for training large models necessitates outsourcing, risking data privacy.

The paper calls on the research community to develop solutions to these privacy concerns, especially as smaller models gain popularity.

Genie: Generative Interactive Environments

Researchers from Google DeepMind and the University of British Columbia have introduced Genie, a generative interactive environment trained unsupervised using unlabelled Internet videos. Genie can generate various action-controllable virtual worlds based on text descriptions, synthetic images, photos, or sketches.

Key Contributions:

- Scalable Training: By training Genie to predict actions between video game frames without supervision, it can efficiently scale data during training.

- Next State Prediction: The model can also predict the next state by analyzing past states and user input actions.

- Behaviour Imitation from Unseen Videos: Genie uses spatiotemporal transformers, a novel video tokenizer, and a causal action model to extract latent actions and create dynamic content. These latent actions, combined with video tokens, allow the dynamics model to autonomously predict future frames.

The paper reflects a growing interest in advancing beyond text-centric LLMs toward multimodal models that integrate physical and digital experiences. Recently, Stanford Professor and Radical Ventures Scientific Partner Fei-Fei Li, shared her vision of building spatial intelligence with AI – enabling systems to process visual data, make predictions, and interact with humans in the real world.

AI News This Week

-

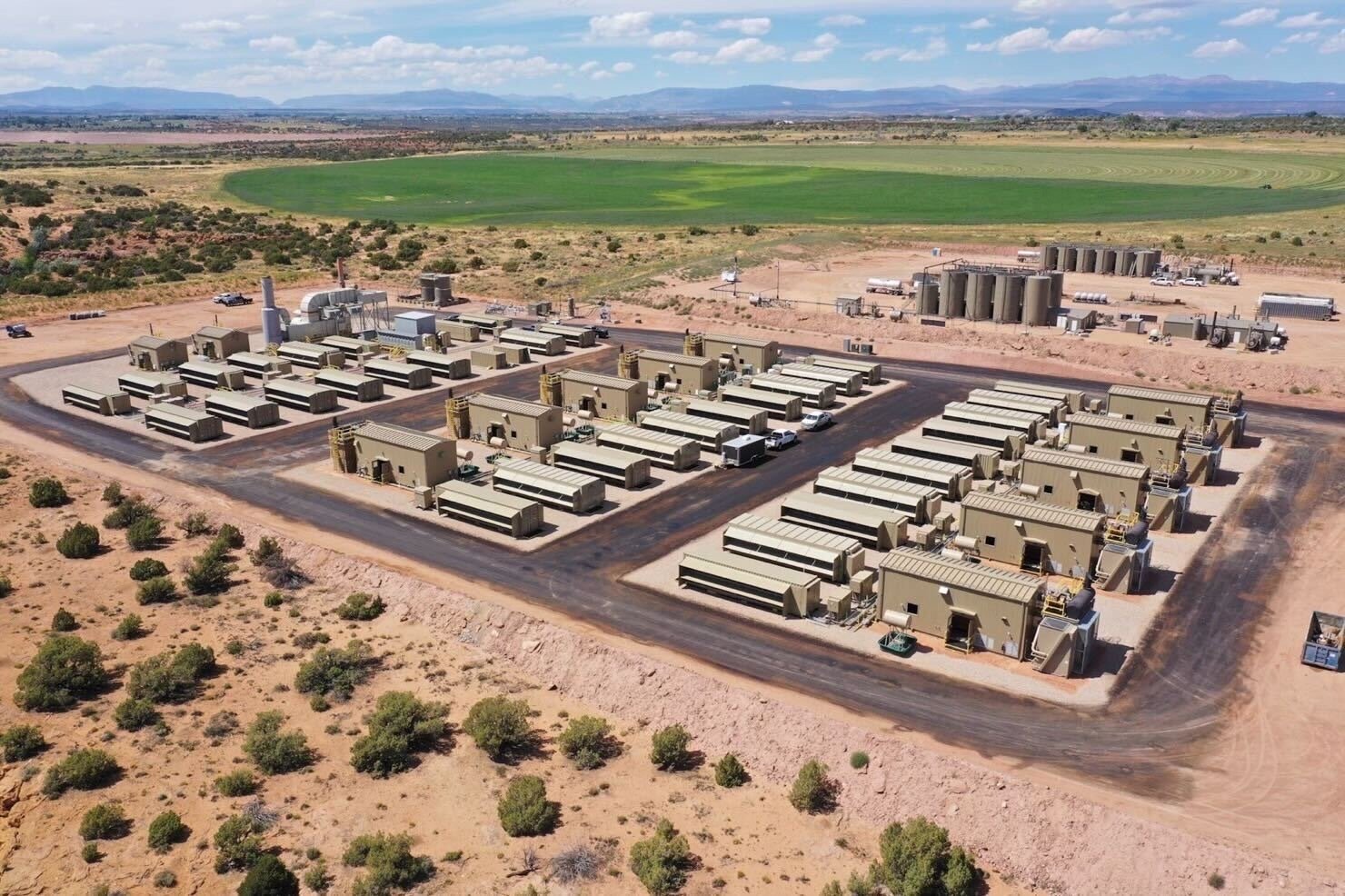

Satellite startup Muon Space raises $56 million (SpaceNews)

Muon Space, a Radical portfolio company, closed its Series B funding round led by Activate Capital, with participation from Acme Capital, Costanoa Ventures, Radical Ventures, and Congruent Ventures. This funding will accelerate the buildout of Muon’s Halo Platform, enhancing its mission-tailored low-earth orbit satellite constellations. Muon Space’s first project, the FireSat Constellation, is a partnership with Earth Fire Alliance aimed at revolutionizing global wildfire response by delivering high-fidelity data to safeguard communities from the growing threat of wildfires. In 2024, Muon secured over $100 million in customer contracts, including a landmark agreement with the Sierra Nevada Corporation to develop and deliver three satellites for their Vindler commercial RF technology. This new round of funding positions the company for significant growth in the space-based sensing and analytics sector.

-

Inside the Canadian summer school that’s a global hothouse for AI talent (The Logic)

The Canadian Institute for Advanced Research (CIFAR) Deep Learning and Reinforcement Learning (DLRL) summer school, celebrates its 20th anniversary this year as a key incubator for AI talent, attracting hundreds of researchers and students from around the globe to learn the latest AI techniques and connect with industry leaders. Alumni, such as Raquel Urtasun (CEO and Founder of Radical Ventures portfolio company Waabi), Graham Taylor (Canada CIFAR AI Chair, the Vector Institute), and Luz Angélica Caudillo Mata (Senior Applied AI Scientist, MDA Space), have made significant contributions to AI, with some founding influential startups.

-

End-of-life decisions are difficult and distressing. Could AI help? (MIT Technology Review)

Bioethicists David Wendler and Brian Earp built a “digital psychological twin” to aid doctors and families in end-of-life decisions for incapacitated patients. They aim to develop this AI tool using personal data to predict patient preferences, potentially easing the emotional burden on surrogates. While promising to improve decision accuracy, the tool faces ethical concerns about data use and decision-making autonomy, highlighting the importance of maintaining human empathy and ethical considerations in medical care.

-

Indonesian fishermen are using a government AI tool to find their daily catch (Rest of World)

In Indonesia, AI adoption is being spearheaded by the National Research and Innovation Agency (BRIN), rather than large enterprises or startups. BRIN is focusing on practical applications across various sectors, including fisheries, disaster management, and law enforcement. For instance, the NN Marlin app uses machine learning and satellite data to help fishermen locate fish more efficiently, boosting their catch and supporting an industry that contributes nearly 3% to Indonesia’s GDP. Significant investments from companies like Nvidia and Microsoft underscore AI’s vital role in shaping the nation’s economic future.

-

Research – LLM See, LLM Do: Guiding data generation to target non-differentiable objectives (Cohere for AI)

Researchers from Cohere for AI, a non-profit machine learning research lab run by Radical portfolio company Cohere, explored the impact of synthetic data on LLMs. They introduced “active inheritance,” curating synthetic data to enhance desirable attributes like lexical diversity and reduce negatives like toxicity. This approach enables targeted improvements in LLMs’ behaviors, offering a cost-effective way to steer models towards specific, non-differentiable objectives during training. The study highlights synthetic data’s role in influencing models’ biases, calibration, and textual attributes, providing a pathway for fine-tuning LLMs for better performance and ethical alignment.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)