The Neural Information Processing Systems (NeurIPS) conference is often referred to as the “Olympics of AI”. The 38th NeurIPS, which took place in December, received 15,600 submissions, showcasing 4,000 papers, 56 workshops, 14 tutorials, and 8 keynote talks. With such a vast array of content, we are taking a moment to highlight the emerging themes from the conference. Among these are questions around whether scaling has truly hit a wall, the shift toward AI systems with real-world relevance, and the rising trend of global academic collaboration.

Theme 1: Scaling has hit a wall – or has it?

The debate over the fate of scaling laws prompted heated discussions both on-site and at NeurIPS side events (see debate with Dylan Patel of SemiAnalysis and Jon Frankle of Databricks and MosaicML). Fuelling interest, Ilya Sutskever, co-inventor of AlexNet and Co-Founder of OpenAI, provided the keynote for the Test of Time Paper Award on the paper that first outlined the scaling hypothesis: “If you have a very big data set and you train a very big neural network then success is guaranteed.” With the emergence of massive models trained on equally massive datasets, his thesis supports many of the recent advances we have seen in AI. In his talk, Sutsekever pointed to the continued growth in compute from better hardware, algorithms, and larger clusters. He conceded the limits of data sourced from the internet, suggesting scaling can continue so long as new approaches to data emerge.

Theme 2: AI that has real-world relevance

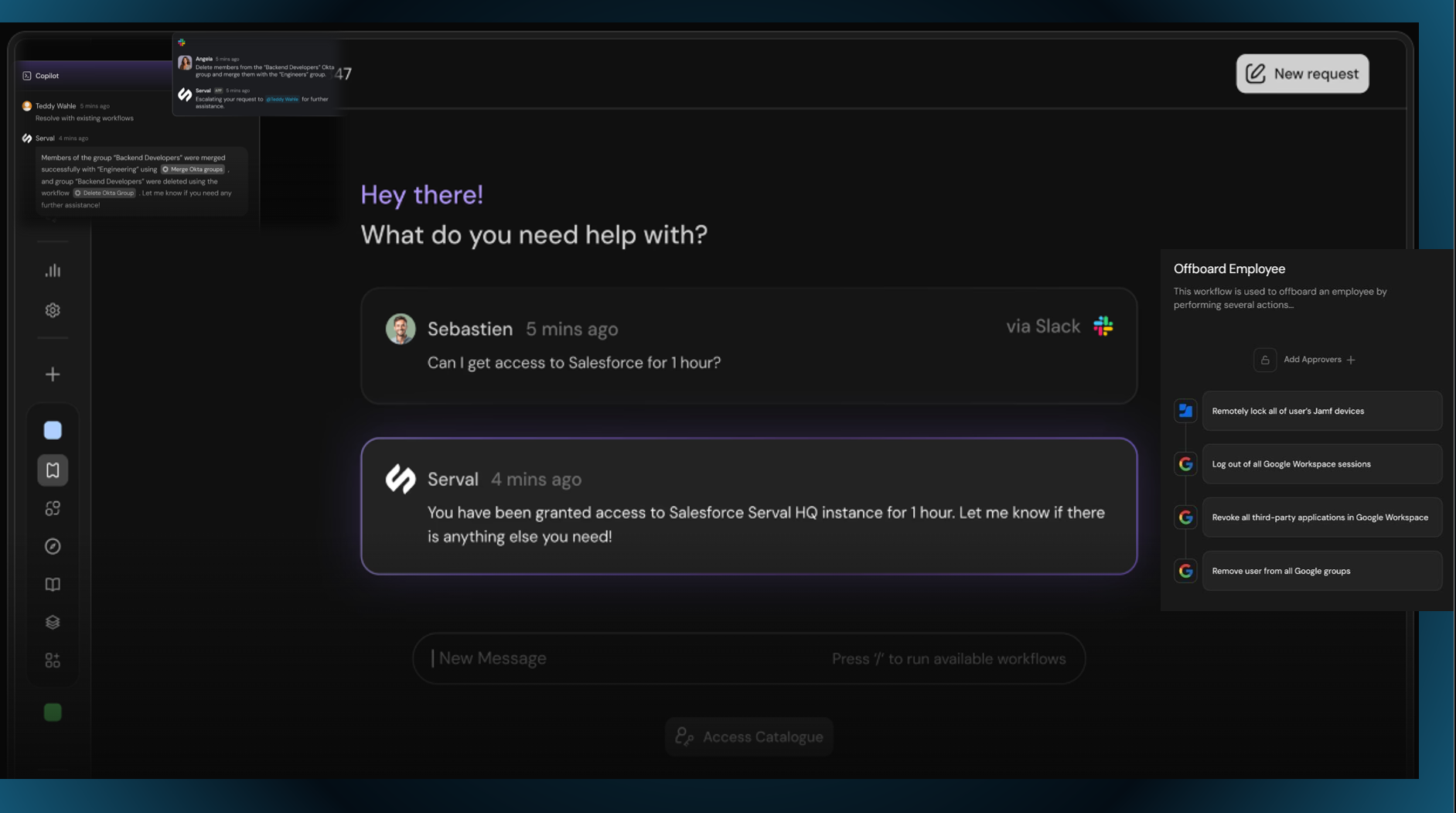

NeurIPS 2024 witnessed a notable spike in interest around embodied intelligence and agents capable of learning through interaction, aligning closely with the growing emphasis on visual intelligence. The significant number of papers and workshops focused on multimodal learning and reinforcement learning in real-world environments echoed this shift toward more integrated, interaction-driven AI systems. Fei-Fei Li, Radical Scientific Partner and Co-Founder of Radical portfolio company World Labs, captured these emerging themes in her talk “From Seeing to Doing: Ascending the Ladder of Visual Intelligence,” where she discussed the progression from passive perception (e.g., object recognition) to active, actionable understanding (e.g., interpreting visual cues to enable autonomous decision-making and interaction). Li’s insights reflect a fundamental shift toward integrating vision and decision-making, emphasizing the development of AI systems that go beyond raw computational scale to focus on usability, adaptability, and real-world relevance. This holistic approach connects the technical advancements in scaling laws to practical applications, where AI systems are designed to reason, interact, and better meet human needs in real-world contexts.

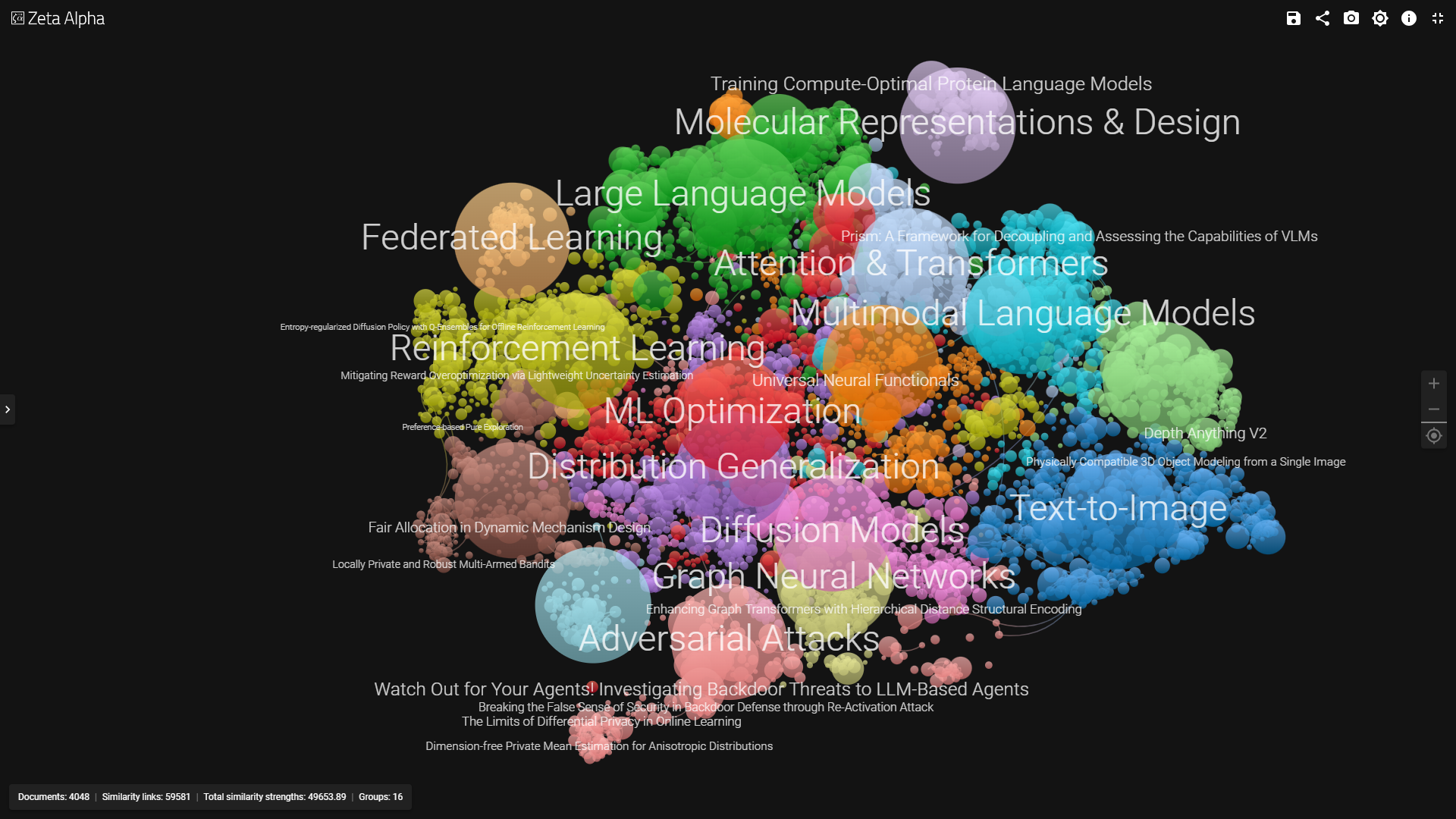

Theme 3: Global collaboration grows

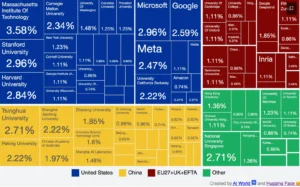

Source: Pankaj Singh, Analytics Vidhya, 2025

This year’s conference underscored the growing trend of industry-academic collaboration, driven by the increasing difficulty of achieving AI innovation independently. The rapid pace of AI development demands vast resources, expertise, and partnerships that exceed what most individual organizations can provide. Research contributions at NeurIPS continue to be led by US institutions, with MIT (3.58%), Stanford (2.96%), Microsoft (2.96%), and Harvard (2.84%) leading the way. However, 21 key AI models emerged from industry-academic partnerships last year (a new record) highlighting academia’s continued relevance and crucial role in advancing cutting-edge AI research.

While US institutions continue to dominate, Asia, particularly China, remains a strong contributor, with larger participation from Tsinghua University (2.71%) and the National University of Singapore (2.71%). Canada also made significant contributions, with institutions like the University of Montreal and the University of Toronto. This trend demonstrates that academic institutions, where students are encouraged to explore specialized topics, remain critical to AI innovation.

AI News This Week

-

How hallucinatory A.I. helps science dream up big breakthroughs (The New York Times)

Scientists are harnessing creative outputs from AI hallucinations to accelerate discoveries. Recently, Nobel Prize winner David Baker used AI hallucinations to design 10 million new proteins not found in nature, leading to breakthroughs in cancer treatment and viral infections. Unlike language model hallucinations, these AI-generated patterns are constrained by physical laws and empirical data, requiring experimental validation through established scientific protocols. Experts like DeepMind’s Pushmeet Kohli view this AI creativity as key to unlocking future scientific discoveries, from weather prediction to drug development.

-

Four AI predictions for 2025 (The Financial Times)

Financial Times columnist Richard Waters, reflecting on AI’s trajectory after ChatGPT’s initial impact, outlines four predictions for 2025. AI model scaling will show diminishing returns as the focus shifts to reasoning systems like OpenAI’s o3 model. No breakthrough “killer apps” will emerge despite increasing AI integration. Nvidia will maintain chip market leadership through its Blackwell cycle. Finally, the AI spending boom will continue amid market volatility. Engaging in a similar exercise, Radical Partner Rob Toews recently published his 10 AI predictions for 2025 in his Forbes column.

-

The next generation of neural networks could live in hardware (MIT Technology Review)

Presenting at NeurIPS 2024, Stanford researcher Felix Petersen introduced neural networks built directly from computer chips’ logic gates instead of traditional software-based perceptrons. The technique uses “differentiable relaxations” to enable backpropagation training by creating functions that work like logic gates on 0s and 1s. Despite longer training times, the resulting hardware implementation used fewer than one-tenth of the logic gates and one-thousandth of the processing time compared to binary neural networks for image classification while consuming a fraction of the energy of perceptron-based systems.

-

How AI is unlocking ancient texts — and could rewrite history (Nature)

Researchers are using neural networks to unlock previously unreadable historical texts. The Vesuvius Challenge successfully revealed 16 columns of Greek philosophical text from scrolls damaged by Mount Vesuvius, while Oxford’s Ithaca system, combined with expert analysis, achieved 72% accuracy in restoring ancient inscriptions. Researchers are also applying recurrent neural networks to decipher Mycenaean tablets, and multilingual transformers on documents from the Korean royal archives. Neural networks have the potential to provide researchers with unprecedented amounts of data, offering insights that were previously inaccessible.

-

Research: Foundation models enable automated discovery of artificial life (MIT/Sakana AI/OpenAI/IDSIA)

Researchers introduce Automated Search for Artificial Life (ASAL), the first framework using vision-language foundation models to discover and evaluate artificial life simulations (a field of study looking at natural life, its processes, and its evolution, through the use of simulations with computer models, robotics, and biochemistry). Historically, the field relies heavily on manual design and trial-and-error to make discoveries to contribute to endeavours such as biomimicry. The ASAL framework enables three key capabilities: finding simulations that produce specific target phenomena, discovering simulations that generate ongoing novelty, and mapping diverse simulation spaces. ASAL marks a shift in how researchers can explore artificial life systems by providing quantitative measures for previously qualitative aspects of artificial life.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)