This week, Radical portfolio company Cohere released its latest model, Command A. While it rivals and outperforms industry leaders in key business use cases, what truly sets this launch apart is the model’s groundbreaking efficiency. In today’s Radical Reads, Nick Frosst, Cohere’s Co-founder, shares his reflections on the launch and the drive to create more efficient AI.

As enterprises move beyond AI experimentation to production-grade deployments, priorities are shifting. Compute costs, scalability, and infrastructure demands now sit at the forefront of decision-making—particularly for industries like finance, healthcare, energy, manufacturing and the public sector where data privacy and regulatory compliance add layers of complexity. This is the problem space Cohere’s latest model, Command A, is designed to solve.

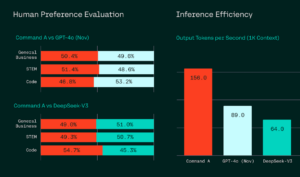

Command A is optimized for agentic tasks that execute multi-step workflows and it is the engine that powers Cohere’s AI workspace, North. As cloud costs rise and regulators scrutinize data handling, enterprises need models that align with operational realities. Across a wide range of metrics that matter for enterprise use cases, Command A’s performance stacks up favorably to models such as GPT4o and DeepSeek, while being significantly more compute efficient – it can run on just two H100 or A100 GPUs, compared to DeepSeek, which can require up to 32, or GPT4o, which is even less efficient to deploy.

Head-to-head human evaluation win rates on enterprise tasks. All examples are blind-annotated by specially trained human annotators, assessing enterprise-focused accuracy, instruction following, and style. Throughput comparisons are between Command A on the Cohere platform, GPT-4o and Deepseek-V3 (TogetherAI) as reported by Artificial Analysis.

Our approach at Cohere underscores a divergence in the AI market. While competitors have relied on tens of billions in funding to scale, we built Cohere and Command A with under $1 billion in capital. This capital efficiency isn’t incidental—it reflects a focus on solving targeted enterprise problems rather than chasing parameter counts. In fact, we built Command A spending less than $30 million to train it, compared to others who talk about billion dollar models.

By decoupling performance from compute intensity, Command A challenges the assumption that enterprise-grade AI requires hyperscale resources. Its architecture makes advanced AI accessible to every business—even those operating on the tightest of margins—allowing efficiency, transparency, and deployability to become the new benchmarks for enterprise success.

Read more in Bloomberg: AI Companies Embrace Efficient Models That Run on Fewer Chips

AI News This Week

-

How the AI talent race is reshaping the tech job market (The Wall Street Journal)

According to data from the University of Maryland’s AI job tracker, nearly 25% of U.S. tech job postings in 2025 now require artificial intelligence skills. 36% of IT jobs posted in January were AI-related. Companies in finance and professional-services industries, such as banks and consulting firms, also are looking for technology staff who know how to use or build AI algorithms and models. After ChatGPT’s launch in 2022, AI job postings increased by 68%, even as overall tech job listings declined by 27%. Industry experts note that workers with AI skills command premium salaries and enjoy greater job security in the current market.

-

AI models are dreaming up the materials of the future (The Economist)

AI is revolutionizing materials science by analyzing billions of potential metal-organic frameworks (MOFs), sponge-like molecules that capture and release carbon dioxide. For example, Radical portfolio company Orbital Materials has trained custom AI models using supercomputer simulations to generate training data for predicting chemical interactions. Unlike purely virtual labs, Orbital maintains in-house facilities to verify and manufacture their AI-discovered MOFs at scale. The company recently partnered with Amazon Web Services to reduce carbon emissions at AWS data centers.

-

Should we be moving data centers to space? (MIT Technology Review)

Space-based data centers are emerging as a potential solution to Earth’s computing challenges. Earth-bound facilities currently consume 1-2% of global electricity, which is projected to double by 2030. Radical Partner Rob Toews explores the idea of AI data centers in space in his list of 2025 AI predictions, pointing out that “a computing cluster in orbit can enjoy free, limitless, zero-carbon power around the clock.” Space-based data centers also offer natural cooling, and enhanced security from physical threats, natural disasters, and power outages. Early prototypes are already testing key technologies, with commercial deployment projected by 2027 as launch costs decrease with innovations like reusable rockets.

-

AI tools are spotting errors in research papers: inside a growing movement (Nature)

New AI initiatives employ large language models to detect errors in scientific papers, representing a shift in research integrity verification. These systems use sophisticated reasoning models with tailored prompts to identify factual inaccuracies, calculation errors, and methodological flaws. While promising, with one project analyzing 37,000 papers, false positives remain a hurdle, occurring in approximately 10% of cases. Ultimately, these tools aim to reliably flag errors before publication due to the difficulty retractions pose.

-

Research: Factorio learning environment (Jack Hopkins/Anthropic)

Researchers have unveiled the Factorio Learning Environment (FLE), a benchmark testing AI’s long-term planning and resource optimization capabilities. Based on the popular game Factorio, FLE provides exponential scaling challenges without a completion state unlike most benchmarks that AI systems eventually master. Models particularly struggle with spatial reasoning, coordinating more than six machines, and producing items requiring over three ingredients. This benchmark serves as a meaningful proxy for evaluating AI’s potential for technological bootstrapping, the ability to optimize complex systems, measuring production throughput from basic automation to processing millions of resources per second.

Radical Reads is edited by Ebin Tomy.