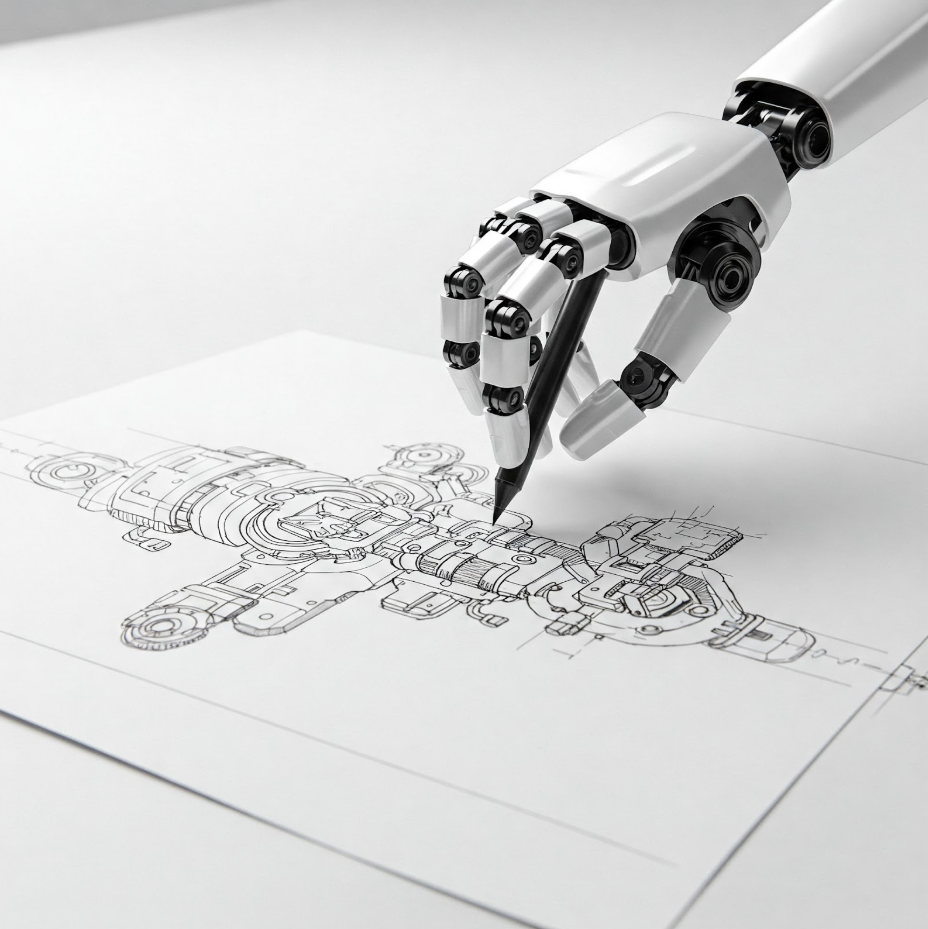

This week, Radical Ventures announced our lead investment in P-1 AI, a company developing artificial general engineering intelligence to help people design complex physical systems more efficiently. Founded by world-class engineers, P-1 is reimagining engineering workflows.

As the adage goes, we were promised flying cars, but instead got 140 characters. Can AI finally help us build things we don’t know how to create?

AI is transforming software, giving people new digital superpowers. With LLMs, users can generate and understand text; burgeoning capabilities of agents promise the automation of rote digital tasks. But the world of bits is just one sliver of human experience. We live our lives in the built environment of atoms. How can AI enhance and transform those physical systems—planes, trains, and automobiles—and design new, unprecedented products?

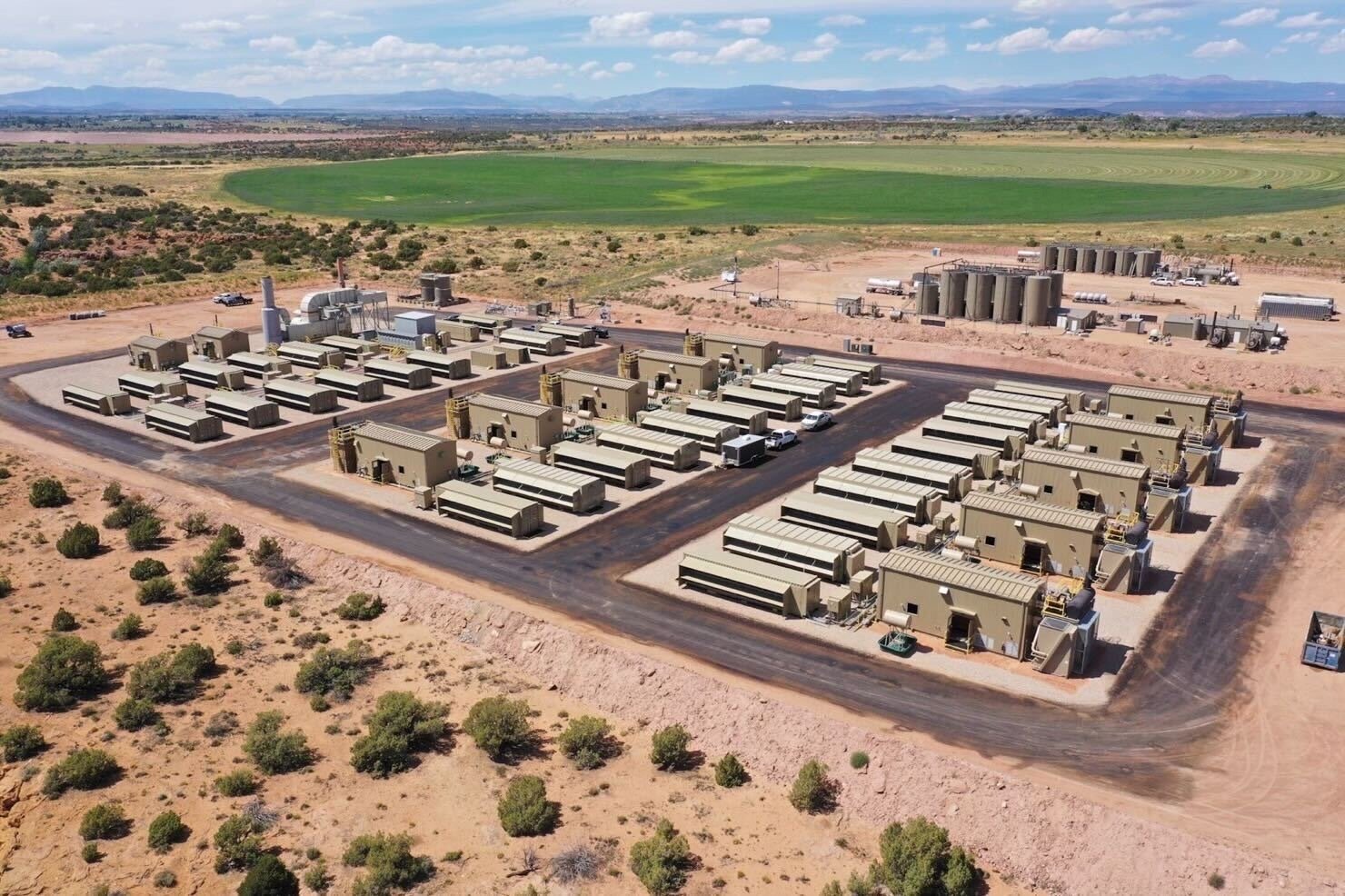

This is the animating principle for Radical’s newest investment, P-1, a company developing artificial general engineering intelligence to help engineering teams design physical systems more efficiently and at unprecedented levels of complexity. P-1’s AI agent, nicknamed Archie, is trained on a synthetic design data set to learn the underlying physics of the product domain and perform quantitative and spatial reasoning tasks. These basic engineering tasks, including distilling key design drivers from requirements, generating product concepts and derivatives, conducting first-order design trades, and selecting and utilizing the right engineering tools for detailed design, can help enhance team productivity and ultimately enable us to design new products.

P-1 is led by a team with both deep engineering domain knowledge and world-class AI expertise. CEO Paul Eremenko is a decorated aerospace leader. Paul was formerly CTO at Airbus and later United Technologies Corporation (now RTX). He spent several years at DARPA, where he launched the agency’s advanced design tools and manufacturing initiatives and headed DARPA’s Tactical Technology Office, as well as time as an engineering director at Google. Cofounder Aleksa Gordić hails from Google DeepMind, and cofounder Adam Nagel was formerly an engineering director at Airbus Silicon Valley, United Technologies and Raytheon.

Archie is starting with simpler product domains in the built world that are facing commercial bottlenecks. Over time, leveraging data and human feedback, P-1 plans to expand to broader aerospace applications. Someday, their ambition is to develop entirely new systems as fantastical and impactful as starships and Dyson spheres.

We’re thrilled to be backing Paul, Aleksa, Adam and team in leading their seed financing, alongside outstanding angels including Jeff Dean, Peter Welinder, and Zak Stone. P-1 is hiring world-class applied AI researchers and engineers to pursue their ambitious mission to build engineering general intelligence. Apply here to join them.

Read more about P-1’s journey in this week’s feature in Fortune.

AI News This Week

-

The Diverging Future of AI (Financial Times)

There is an ongoing debate on whether AI’s future belongs to general agents that handle any task or specialized digital assistants for narrow functions. For example, general AI agents could handle product research and recommendations from a single query, upending traditional consumer funnels in e-commerce. On the other hand, specialized AI agents with a narrower focus are significantly cheaper to build and run, enabled by new techniques like distillation, which involves imbuing smaller models with intelligence from larger models. A shift to efficiency in the post-DeepSeek era has seen emphasis on post-training reinforcement learning and test-time computational techniques to drive efficiency. Regardless of which approach prevails, these innovations promise to introduce more intelligent and cheaper agents to benefit enterprises and consumers using them.

-

Here’s Why We Need to Start Thinking of AI as “Normal” (MIT Technology Review)

Researchers are advocating for AI to be viewed as a “normal” general-purpose technology with gradual adoption similar to electricity or the internet. They emphasize a critical distinction between rapid AI development in labs and slower real-world adoption, which historically lags by decades. The authors contend that terms like “superintelligence” are too speculative to be useful, and predict AI won’t fully automate work but create new human roles for monitoring and supervising AI systems.

-

The Great Language Flattening (The Atlantic)

There are indications that AI-generated writing is causing an evolution in human language. For example, researchers have observed that, unlike technologies like text-messaging and social media that promote concision, humans emulate the verbose style of AI after exposure to the technology. AI makes language and communication more accessible, eliminating the effort-to-length ratio of writing by generating 10,000 words as easily as 1,000. Beyond length, some researchers note that as AI increasingly trains on internet text containing its generated output, a new standardized form of language may emerge aligned with AI patterns, distinct from human-to-human communication patterns.

-

AI Scientist ‘Team’ Joins the Search for Extraterrestrial Life (Nature)

Researchers have unveiled AstroAgents, an AI system comprising eight specialized agents that autonomously analyze data and generate scientific hypotheses about the origins of life. This system processes mass spectrometry data through a workflow of data analysts, planners, scientists, and critics. AstroAgents generated over 100 potential hypotheses about extraterrestrial organic molecules in tests using meteorite and soil samples. NASA plans to use this technology to analyze samples from Mars in the 2030s to search for evidence of life beyond Earth.

-

Research: Welcome to the Era of Experience (DeepMind/University of Alberta)

AI luminaries David Silver and Richard Sutton outline a pivotal shift in AI development they call “the era of experience,” in which agents will learn primarily from their interactions with humans rather than curated datasets. The authors identify four key characteristics that will define this new paradigm: agents operating in continuous streams of experience rather than isolated interactions, rich environmental grounding rather than just dialogue, rewards derived from environmental feedback rather than human judgment, and reasoning systems based on experience rather than human thought patterns. Under this perspective, superintelligence is viewed as an achievable engineering challenge through self-learning autonomous systems.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)