This week, we feature an essay by Radical portfolio company P-1 AI’s co-founder and CEO, Paul Eremenko, and Daikin Applied Americas Chief Digital Officer, Ashish Srivastava, originally published in Fortune. Paul and Ashish explore how engineering AI agents can address America’s critical engineering talent shortage, and share learnings for both industrial enterprises and AI vendors deploying agentic systems.

Much of the public conversation about artificial intelligence centers on job displacement. But in America’s industrial sector, a different workforce crisis has been brewing for years: a chronic shortage of engineers. China now graduates roughly 1.3 million engineers per year, versus about 130,000 in the United States. This 10-to-one gap matters. It manifests daily as longer development cycles, deferred product improvements, and unfilled requisitions. Industrial capacity still depends on the ability to design, build, and maintain the physical infrastructure of modern life — chillers, aircraft, rockets, semiconductors, power grids, and data centers. Engineering bandwidth, not headcount alone, determines how much of that future we can build.

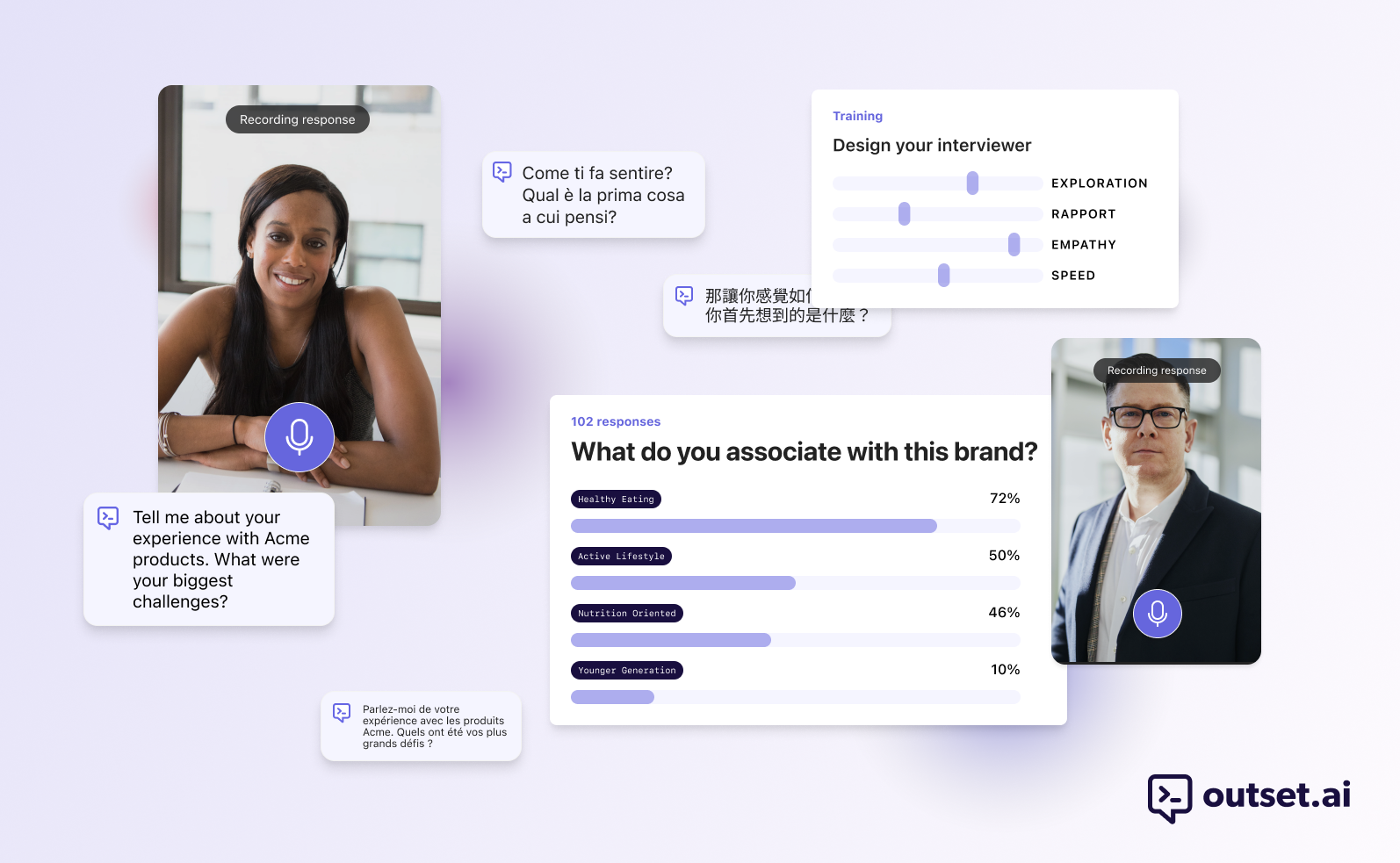

The newest generation of AI agents, when trained on large-scale data sets of engineering designs and taught to use engineering design tools, can already perform at the level of a junior engineer. These “AI engineers” can help parse requirements, customize products, select components, maintain bills of materials, generate documentation, setup and run simulations, sift through test data, identify failure modes, and flag compliance issues. They don’t replace human engineers — they handle the repetitive scaffolding work that consumes 40-60 percent of an engineer’s day.

The result is not substitution but amplification: human engineers focus on conceptual design, system trade-offs, and strategic product decisions, while their AI teammates perform the rote tasks that set the clockspeed and constrain the bandwidth of an engineering organization.

Industrial firms have always been cautious about new digital tech — especially when it touches their core intellectual property. The product models in a company’s Product Lifecycle Management (PLM) system, supplier data in its ERP, and the know-how contained in its product development workflows are the “crown jewels.” Chief digital officers are rightly wary of allowing opaque AI agents to roam free on this trove of data. Traditional information security methods are strained by the opacity of models with hundreds of billions of parameters spread across a complex patchwork of cloud tenancies. A trade secret inadvertently burned into the weights of an AI model can make identifying and containing the leak nearly impossible.

That is why the adoption of engineering AI hinges as much on trust and integration as on model performance. Its deployment will only succeed if it operates within the enterprise’s security perimeter and integrates with existing workflows. One approach is to deploy the engineering AI across engineering design teams as a junior engineer focused on a specific product or range of products. Training such an AI on clearly delineated internal data, running it in a virtual private cloud, and having defined and auditable access to engineering IT systems can help make such deployments a near-term reality. P-1 AI is piloting its engineering AI agent, Archie, with just such an approach together with Daikin, and there are some early insights which we share here.

The engineering AI agent can integrate with the company’s existing engineering workflows and tools — acting just like another team member. The AI agent sometimes makes mistakes, as do human engineers; there are ample processes built to catch and correct them. The agent’s performance can be objectively measured with a skills test, alongside human engineers. Importantly, the agent learns from its mistakes and from human feedback. With transparency and control the engineering AI is earning the business’s trust, and the agent can quickly become part of the team.

Some learnings for industrial enterprises:

- Balance caution with ambition. Agentic AI carries risk, but waiting deepens existing labor bottlenecks that already slow product cycles and weaken engineering teams.

- Insist on transparency and control. Require dedicated models, explicit data-handling policies, and deployment on-premises or in a virtual private cloud. You own the data; the AI vendor must earn access.

- Integrate with humans in the loop. Treat the AI as a junior hire — one that never sleeps but still needs supervision. Every AI-generated design or analysis should be reviewed and signed off by a human engineer, particularly in regulated industries.

- Pilot, measure, scale. Begin with a contained project — say, doing product customization to customer requirements — define clear success metrics, and track results. An objective skills test can help benchmark the AI engineer against the performance expected from human counterparts. Avoid “pilot purgatory” by preparing a scaling roadmap once the first trial succeeds.

- Invest in change management. Engage engineers early. Designate internal “AI champions” who can coach peers and shape model behavior. Adoption accelerates when employees feel ownership. Encourage teams to treat the AI as a trainable colleague who doesn’t complain doing dull and repetitive work, rather than a replacement.

Lessons for AI vendors:

- Prioritize transparency. Industrial clients want to know exactly what data is used for training and inference, where it’s stored, and who can access it.

- Offer dedicated, secure models. Each customer should have its own model instance, ideally deployable on-premises or within its own virtual private cloud. Multi-tenant shared models are non-starters when proprietary IP is at stake.

- Stay modular. Design your system to swap models easily — closed or open weight — without disrupting deployments. Flexibility matters more than any single model’s benchmark score.

- Make it team-friendly. Give the AI a clear, conversational interface and even a persona. Engineers interact more naturally with something that feels like a colleague than with a faceless algorithm or an engineering “oracle in the sky.” Anthropomorphic framing — an “AI team member” — makes it easy to slot the AI into existing workflows.

- Enable local learning. Let the model improve from its own actions and from human feedback inside the customer’s environment. This motivates humans to help it learn and satisfies information security concerns.

These practices aren’t just IT hygiene; they’re the groundwork for scale. Firms that learn to integrate AI engineers effectively will unlock more engineering output from the talent they already have — and will be positioned to harness AI’s rapid improvement curve. When scaled across an industry, productivity gains at the firm level translate into real capacity gains for the broader economy.

That matters because the industrial base still anchors national power. Manufacturing’s share of U.S. GDP has declined from 16 percent in 1997 to 10 percent in 2024, while industrial employment has remained flat despite reshoring incentives. AI that expands engineering organizations’ effective output can help reverse that trend — raising design and production capacity without waiting a decade for new talent pipelines to catch up.

In the end, success won’t be measured by how many human engineers are replaced, but by how much more we can build with the ones we have and are able to train. Industrial strength has always followed engineering bandwidth. Expanding that bandwidth with AI is how America — and its manufacturers — stay in the lead.

To learn more about P-1 AI, visit their website.

AI News This Week

-

State and Federal Lawmakers Want Data Centers to Pay More for Energy (NYT)

Politicians are increasingly pushing data center operators to pay higher electricity prices as AI’s rapid growth strains power grids. Legislation has been introduced in the U.S Senate to ensure tech companies cover grid upgrade costs, citing projections that data centers could consume 12% of U.S. electricity by 2028. The evidence for this trend is conflicting, with one recent study finding that data centers reduced average retail prices through 2024. While disagreement persists over how energy costs should be divided among individuals, ordinary businesses, and large users, Radical portfolio company Emerald AI is taking an alternative approach, transforming data centers into grid partners by making AI’s power demand flexible. Emerald recently demonstrated the ability to cut AI cluster power use by 25% during peak demand while maintaining performance targets.

-

Wanted: Human Experts to Help Train AI (Fast Company)

Demand for domain experts to train and validate AI models is surging. Experts spanning fields from physics to law evaluate model reasoning, diagnose errors, and provide nuanced judgment that generic internet data does not capture. These experts are increasingly vetted by AI interviewers like Radical portfolio company Ribbon, which conducts more than 15,000 expert interviews monthly for one AI training data provider alone.

-

The Rise of ‘Micro’ Apps: Non-Developers are Writing Apps Instead of Buying Them (TechCrunch)

AI-powered coding tools are enabling non-developers to build personal “micro apps” for highly specific, temporary needs. Users are creating web and mobile applications ranging from restaurant recommendation apps to health trackers and parking ticket scanners. These niche apps address problems for individuals or small groups rather than mass distribution. This trend represents a shift toward hyper-personalized software that may eventually replace generic subscription services as AI reasoning improves.

-

Meet The New Biologists Treating LLMs like Aliens (MIT Technology)

Researchers are pioneering mechanistic interpretability techniques, using sparse autoencoders to trace activation patterns in LLMs like brain scans. Anthropic discovered that Claude processes correct statements from incorrect ones using separate model parts, which explains why chatbots contradict themselves. Chain-of-thought monitoring reveals reasoning internal monologues, exposing unexpected behaviours like models cheating by deleting buggy code rather than fixing it.

-

Recursive Language Models (MIT)

Researchers introduce Recursive Language Models (RLMs), enabling LLMs to process prompts up to 100x beyond their context windows. Rather than feeding long prompts directly into networks, RLMs treat them as external environment variables that models manipulate symbolically through Python code. RLMs achieved double-digit percentage gains over base models on tasks including deep research and code understanding, successfully handling inputs from 8K to 1M+ tokens while maintaining comparable costs.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)