Many of the popular large language models in use today are predominantly trained on English language data. Despite English being the primary language on the internet, representing 63% of all website content, it is spoken by only 16% of the global population. Cohere for AI, a non-profit research lab run by Radical Ventures portfolio company Cohere, focuses on tackling complex machine learning challenges and expanding access to machine learning research. They have recently released a primer that delves into the “language gap” in AI. The primer discusses its origins, potential consequences, and presents actionable strategies for policymakers and governance bodies to address these challenges. This week, we share a brief summary.

More than 7000 languages are spoken around the world today, but current, state-of-art AI large language models cover only a small percentage of them and favor North American language and cultural perspectives. This is in part because many non-English languages are considered “low-resource,” meaning they are less prominent within computer science research and lack the high-quality datasets necessary for training language models.

This language gap in AI has several undesirable consequences:

- Many language speakers and communities may be left behind as language models that do not cover their language become increasingly integral to economies and societies.

- The lack of linguistic diversity in models can introduce biases that reflect Anglo-centric and North American viewpoints, and undermine other cultural perspectives.

- The safety of all language models is compromised without multilingual capabilities, creating opportunities for malicious users and exposing all users to harm.

There are many global efforts to address the language gap in AI, including Cohere For AI’s Aya project — a global initiative that has developed and publicly released multilingual language models and datasets covering 101 languages. However, more work is needed.

To contribute to efforts to address the AI language gap, we offer four considerations for those working in policy and governance around the world:

- Direct resources towards multilingual research and development.

- Support multilingual dataset creation.

- Recognize that the safety of all language models is improved through multilingual approaches.

- Foster knowledge-sharing and transparency among researchers, developers, and communities.

The evidence outlined in this primer suggests that closing the AI language gap will require concerted efforts across the ecosystem, from those developing and deploying AI models, to those working in policy and governance settings.

AI News This Week

-

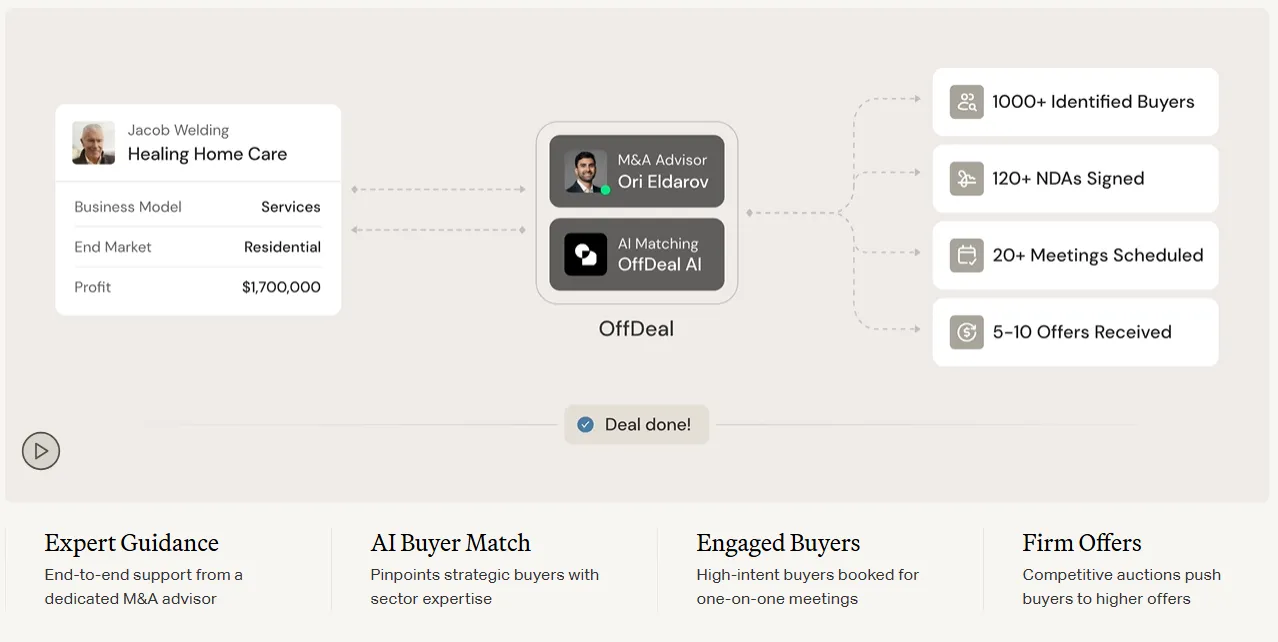

AI startup Cohere valued at $5.5 billion in new funding round (Bloomberg)

Radical Ventures portfolio company Cohere has successfully completed its Series D funding round, securing $500 million led by PSP Investments alongside a global set of financial and strategic investors including Nvidia, AMD, Magnetar, Prosperity7, Fujitsu, EDC, Oracle, Cisco and Salesforce. Specializing in large language models designed for business applications, Cohere’s AI technology supports text analysis and generation, enhancing efficiency and productivity for hundreds of corporate clients across diverse industries. Recently, the company launched Rerank 3 Nimble, a new model that accelerates enterprise search capabilities. This latest funding round positions Cohere at a valuation of $5.5 billion, establishing it as one of the world’s most valuable AI startups.

-

These are the 10 AI Startups to Watch in 2024 (Bloomberg)

Bloomberg released its second annual list of the top AI startups to watch in 2024. Included in this lineup is Radical Ventures portfolio company Cohere, recognized for its development of large language models tailored for corporate clients, including notable firms such as Salesforce and Accenture. Another Radical Ventures portfolio company, Covariant, earned recognition for launching RFM-1 this year—the first multimodal robotics foundation model. RFM-1 is akin to a large language model but is specifically designed for developing software for intelligent robots.

-

Three ways AI is changing the 2024 Olympics for athletes and fans (Nature)

AI is making its mark on the 2024 Paris Olympics, enhancing experiences for both athletes and fans. AthleteGPT, an AI chatbot, will assist athletes with information through the Athlete365 app. Intel’s 3D athlete tracking (3DAT) technology will analyze biomechanics to improve performance. AI will also enhance broadcasting by providing real-time statistics and personalized highlights, enriching the viewer experience. While AI’s use in refereeing is still evolving, it promises to bring more precision and transparency to judging.

-

The dawn of AI in agriculture is harvesting the future (Forbes)

AI is transforming farming, using soil conditions and weather pattern data to assist farmers in making informed decisions. Agricultural usecases for AI have included enhancing crop monitoring, supporting plant disease diagnosis via images, and advancing predictive analytics to forecast weather patterns and pest invasions. Companies have developed platforms that automate inspection and monitoring, covering invasive species detection, forage productivity, and livestock health. AI has the potential to promote sustainable farming by optimizing resource use and reducing waste, ensuring a future of increased yields and environmental care.

-

Robotic control via embodied chain-of-thought reasoning (UC Berkeley/University of Warsaw/Stanford University)

Researchers have introduced “Embodied Chain-of-Thought Reasoning” (ECoT) for vision-language-action models (VLAs). ECoT enhances robot decision-making by enabling reasoning about tasks, sub-tasks, and their environment before action. This approach involves training VLAs with synthetic data annotated for reasoning tasks. Experiments show that ECoT significantly improves task success rates by 28% and facilitates easier debugging and human interaction. This advancement promises improved generalization and performance of robots in complex, novel situations.

Radical Reads is edited by Ebin Tomy.