One of the most powerful examples of the disruptive potential of large language models is software engineering. Few applications of LLMs have demonstrated stronger product/market fit, and the success of GitHub’s Copilot has shown just how big the market opportunity is here. That said, software development today remains slow, expensive, and error-prone as the “AI Coding” space is largely defined by one interaction model: code completion, with AI providing suggestions as developers type in their editor.

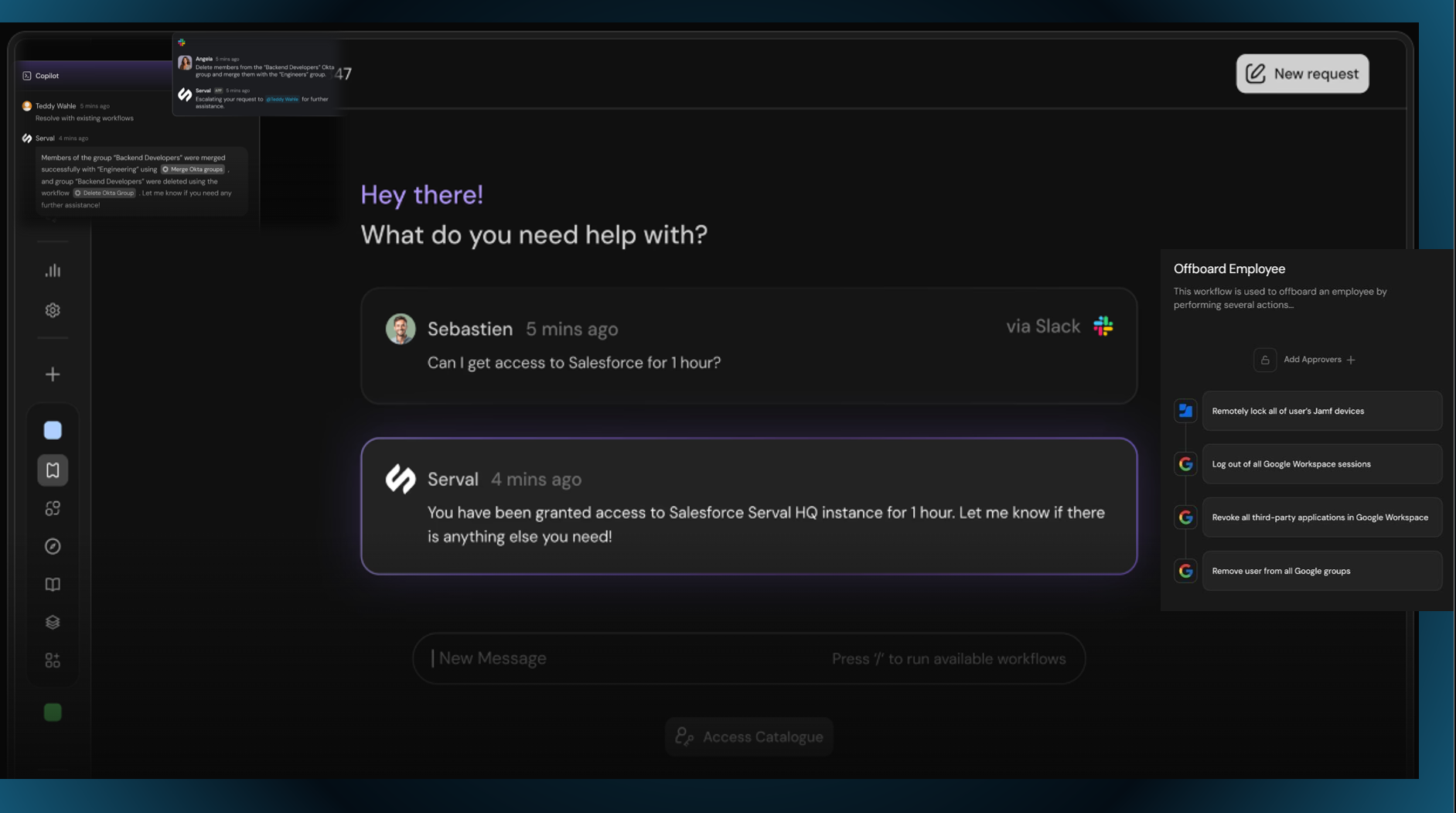

But what does the next generation of software development look like? Is it possible to build a platform that completes not just lines of code, but full repository-level tasks, ultimately acting as an autonomous agent for software development? This is the vision behind this month’s launch of Solver, an AI agent that completes software development tasks on its own, becoming a true AI member of any software development team.

Built by Radical portfolio company Laredo Labs, Solver development platform is trained on both code and the work it took to produce it, drawing on the complete histories of over one hundred million software projects – one of the most comprehensive software engineering datasets in existence today.

The team behind Laredo Labs includes CEO/co-founder Mark Gabel, former Chief Scientist at Viv Labs (acquired by Samsung), who wrote one of the first papers to apply “natural language modeling” to computer code, and co-founder Daniel Lord, the former platform engineer at Siri (acquired by Apple). The team has decades of experience crafting deployment-ready code, including the Viv software stack which was shipped across the Samsung ecosystem.

The Laredo Labs team has taken decades of software engineering experience and trained Solver to work just like they do. In other words, they have built an AI agent that’s ready to advance the field of software development.

To learn more about Solver, check out the company’s blog post here.

AI News This Week

-

Software from Toronto helps power the AI chip boom (The Logic)

As companies seek to break Nvidia’s dominance in the AI chip market, they are looking to a highly skilled pool of talent in Toronto. The city has become a critical hub for software development in the booming AI chip industry, with tech giants such as Nvidia, AMD, Intel, and AWS, along with startups like Radical Ventures portfolio company Untether AI leveraging the city’s software engineering talent to make their semiconductors more competitive. Untether AI specializes in power-efficient semiconductors for deep-learning systems, with more than half of its team focused on software.

-

ALS stole his voice. AI retrieved it. (The New York Times)

Casey Harrell, who lost his voice to ALS, has regained it through brain implants and AI in an experiment. Surgeons implanted electrodes that link his brain to a computer, enabling an AI system to interpret his brain signals and convert them into words, even mimicking his pre-ALS voice. Over eight months, Harrell used nearly 6,000 words with 97.5% accuracy, surpassing previous technologies. This system has not only restored his communication but also revived personal connections. The technology offers hope for people with speech impairments and signals a promising future for brain-computer interfaces in transforming human communication.

-

AI could actually change the gaming industry (Financial Times)

AI is reshaping the gaming industry by reducing development costs and accelerating production. Companies are increasingly leveraging AI for animation, 3D backgrounds, and gameplay testing. Artificial Agency, a Radical Ventures portfolio company, recently raised $16 million to advance game development with their generative AI-powered behaviour engine, enabling unscripted, dynamic gameplay for a more immersive experience. These innovations are poised to transform the $225 billion gaming industry, making game creation faster, more efficient, and immersive.

-

AI scientists are producing new theories of how the brain learns (The Economist)

Researchers are exploring new theories on how the brain learns with the aim to advance AI. Building on the work of Geoffrey Hinton who pioneered the use of neural networks to create today’s modern AI architecture, researchers at Oxford have developed a “prospective configuration” system which closely mimics human learning. While still at the experimental phase, these kinds of emerging theories may hold the key to better understanding how the brain works while furthering AI innovation.

-

Research: Analysis and insights from holistic evaluation on video foundation models (Twelve Labs)

Unlike language or image foundation models, many video foundation models are evaluated with differing parameters (such as sampling rate, number of frames, pre-training steps, etc.), making fair and robust comparisons challenging. Radical Ventures portfolio company Twelve Labs designed an evaluation framework for measuring two core capabilities of video comprehension: appearance and motion understanding. Their findings reveal that existing video foundation models face limitations. As an alternative, the company introduced TWLV-I, a new video foundation model that constructs robust visual representations for both motion- and appearance-based videos. Trained exclusively on publicly available datasets, TWLV-I demonstrates notable performance across both appearance and motion-centric action recognition benchmark datasets. It achieves competitive performance on various video-centric tasks, including temporal action localization, spatiotemporal action localization, and temporal action segmentation. This multifaceted proficiency highlights TWLV-I’s spatial and temporal understanding capabilities.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)