Across nearly every imaginable industry, business as usual increasingly means using artificial intelligence technologies to get the job done. This is according to McKinsey & Company’s 2022 Technology Trends Outlook, which lists applied AI as one of the most significant technology trends unfolding today.

The analysis defines applied AI as intelligent applications used “to solve classification, prediction, and control problems to automate, add, or augment real-world business use cases.” Since 2020, AI adoption is up 50% and private investment has doubled. But is adopting AI translating into performance results? According to McKinsey, leaders reporting AI adoption have demonstrated stronger financial performance with a 2.1x increase in revenue and 2.5x total return to shareholders.

Like electricity and other transformative horizontal technologies, early adoption tends to concentrate in industries where the application is low cost. Early industrial adoption of AI was led by technology-centric industries using AI for product and service development, as well as service operations, and marketing and sales functions. Often analytics and statistical tools are not a new budget item for these areas, so AI is seen as a significant improvement over existing processes rather than something completely new. Examples of common use cases include recommending products at purchase, using various sensor inputs to perform tasks (e.g. checkout for retail), and detecting fraudulent behaviour.

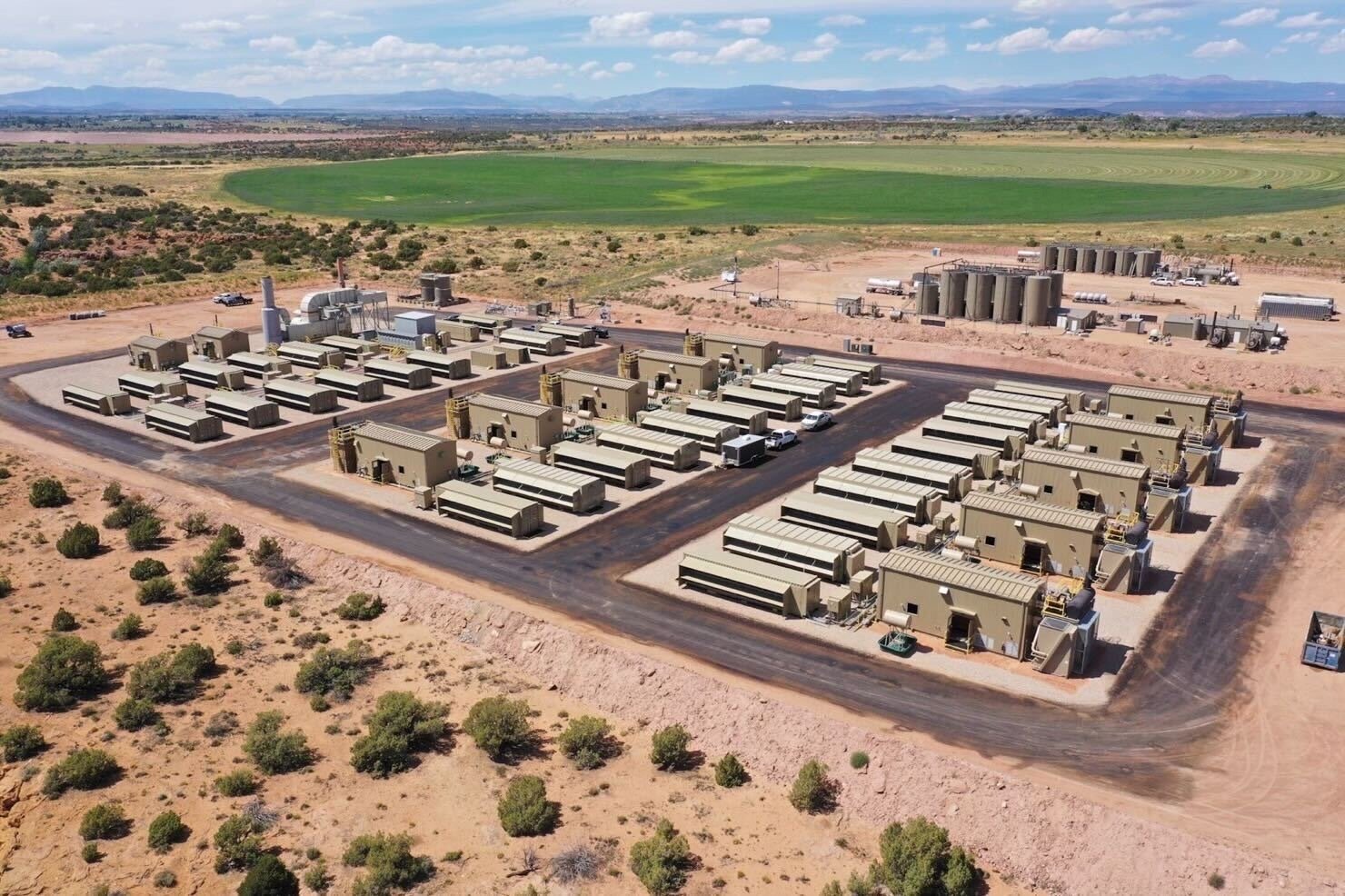

In recent years, AI adoption has accelerated to encompass every industry. In agriculture, AI is enabling process optimization through capabilities like productivity forecasting (e.g. Radical portfolio company ClimateAi) and driverless tractor applications. In construction, autonomous machinery and robots, computer-vision enhanced safety procedures, and 3-D design optimization software are being used to improve efficiency (e.g. Radical portfolio company Promise Robotics). And in healthcare and life sciences, AI has already unlocked one of the grand challenges of biology while other applications enhance services, processes, and diagnostic tools (e.g. Radical portfolio companies Signal 1, Ubenwa, Synex, and Unlearn).

In the future, AI will be infused in most software to the point that ‘AI’ and ‘software’ may be synonymous. Another way to think about this is that AI is eating software, and all companies will be AI companies. With AI capabilities, companies in all industries can transform themselves into ‘smart’ organizations, in which their data enables their software to continually learn and improve. As use-cases continue to expand and AI platform technologies (e.g. Radical portfolio company Cohere) are readily available, AI is becoming firmly entrenched in the business mainstream.

AI News This Week

-

I Was There When: AI helped create a vaccine (MIT Technology Review)

MIT Technology Review’s In Machines We Trust series shares stories of watershed moments in AI as told by the people who witnessed them. This episode spotlights Dave Johnson’s experience as the chief data and AI officer at Moderna. The company used AI to review the data of hundreds of parallel experiments to start predicting outcomes and build better experiments. It took ten years to build the engine that produced a vaccine candidate within 42 days. Dave notes, “What’s fundamentally different about the artificial intelligence approach from the typical pharmaceutical development is it’s much more of a design approach. We’re saying we know what we want to do and then we’re trying to design the right information molecule, the right protein, that will then have that effect in the body. And if all of that looks good, then you’re finally moving off to human testing and you go through several different phases of clinical trials.”

-

Why DeepMind is sending AI humanoids to soccer camp? (Wired)

Learning the fundamentals of soccer could one day help robots move around our world in more natural, human ways. Control at the level of joint torques with longer-term goal-directed behaviour is an enormous challenge for humanoid robotic agents. The agents’ movements are already constrained by their humanlike bodies and joints that bend only in certain ways, and being exposed to data from real humans constrains them further. To learn soccer, an AI must re-create everything human players do, even the things we do not consciously think about. DeepMind researchers are working to strike the “subtle balance” between teaching the AI to do things the way humans do them, while also giving it enough freedom to discover its own solutions.

-

Research: AI-enabled detection and assessment of Parkinson’s disease using nocturnal breathing signals (Nature)

There are currently no effective biomarkers for diagnosing Parkinson’s disease (PD) or tracking its progression. Researchers have developed an AI model to detect PD and track its progression from nocturnal breathing signals. The model can assess PD in the home setting by extracting breathing from radio waves that bounce off a person’s body during sleep.

-

The Animal Translators (New York Times – subscription required)

Earlier this month, we covered The Earth Species Project (ESP) and Project CETI, which aim to decode animal communication via machine learning. The New York Times shared a deep dive on the scientists decoding animal communication using machine-learning algorithms. The projects range from identifying when mice are stressed based on their squeaks to understanding why fruit bats ‘shout’, creating a comprehensive catalogue of crow calls, mapping the syntax of sperm whales, and even building technologies that allow humans to talk back. Amongst humans, advances in natural language processing have given rise to voice assistants that recognize speech, transcription software that converts speech to text and digital tools that translate between languages. Beyond deciphering the syntax and semantics of animal communication, the ambitious projects looking to achieve a two-way dialogue will also have to solve for a willingness to communicate and discover if we experience the world similarly enough to even begin passing ideas through language.

-

AI-guided fish harvesting is more humane and less wasteful (TechCrunch)

Fish harvesting is currently low-tech and focused on the catching portion, rather than the slaughtering portion, of the process. A robotic system has been created to kill fish with surgical-level accuracy. A computer vision system identifies the species and shape of the fish it is holding, locates the brain and other important parts, and kills the fish quickly and reliably. The delicate process is modelled on a manual technique called ike jime, which typically requires a skilled practitioner, making it difficult to industrialize. Ike jime is increasingly popular among upscale seafood restaurants both within and outside Japan, where it was developed. The technique is more humane and results in better-preserved meat that can go days or weeks longer than suffocated fish.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)