IT departments face a fundamental bottleneck: as companies scale, support demand grows faster than headcount. Consider the tasks involved in provisioning a new employee: creating accounts across Okta, Google Workspace, Slack, GitHub, and dozens of other systems; assigning role-based permissions; provisioning hardware; and ensuring compliance. In legacy platforms, this “simple” workflow requires extensive manual configuration — defining each step, building conditional logic, handling errors, while maintaining integrations as APIs evolve. A single broken integration halts the entire automation.

The global IT Service Management market exceeds $250 billion, dominated by legacy platforms built as systems of record rather than systems of action. ServiceNow alone generates $12 billion in annual revenue, but implementations can require 6-12 months and armies of consultants to configure workflows.

For every dollar spent on ITSM software, organizations spend multiples more on people configuring workflows, maintaining brittle integrations, and manually resolving tickets. Most enterprises still automate only 20-35% of requests despite massive investment. This represents one of the largest automation opportunities in enterprise software.

The first-generation of AI-powered IT service management startups validated enterprise demand but mostly layered conversational interfaces on top of existing systems. They improved the front-end experience — better chatbots, smarter routing — without removing the configuration burden that makes automation expensive and fragile.

Addressing this challenge requires AI-native architectures that enable agentic resolution, where AI understands natural language requests, orchestrates multi-step workflows across systems, executes actions within guardrails, and continuously learns from results.

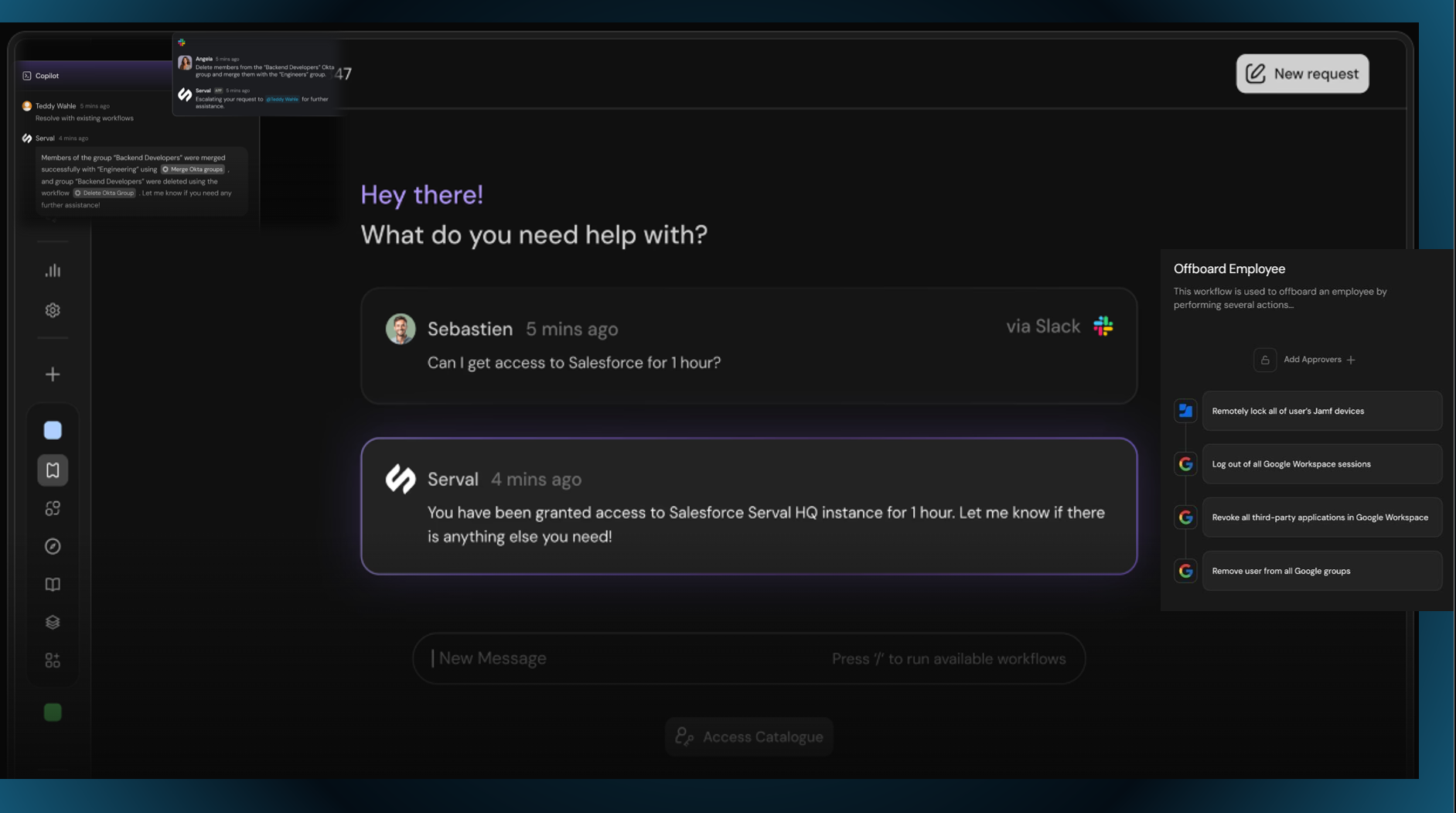

AI-native companies like Serval are fundamentally reimagining ITSM architecture. Serval, a Radical Ventures portfolio company, built its platform from the ground up around two cooperating AI agents. One acts as a developer, generating automation scripts and workflows from natural language prompts. The other serves as a help desk assistant, executing those automations within IT-defined guardrails. This dual-agent architecture enables deployment in minutes rather than months, with automation capabilities that expand through an embedded insights engine that observes patterns and suggests new workflows.

Results validate the approach: Verkada achieved 90% faster ticket resolution, Perplexity automates over 50% of requests as Serval customers rapidly expanded beyond IT into HR, Finance, Legal, and Security. Organizations now use Serval to automate employee lifecycle management, procurement, contract approvals, and compliance reporting.

AI-driven workflow automation is becoming foundational to how companies operate, and Serval is positioned at the forefront of this transformation.

Radical Talks: Rare Earth Magnets and the Future of AI Infrastructure

John Maslin, CEO and Co-Founder of Vulcan Elements, joins Radical Partner Molly Welch to unpack the physical infrastructure behind AI.

While chips dominate most conversations around the AI supply chain, John explains why rare earth magnets are a foundational dependency across data centers, semiconductor manufacturing, robotics, EVs, and defence systems, and why the current market concentration poses real economic and national security risk.

Drawing on his work rebuilding domestic magnet manufacturing, the discussion explores what it takes to reshore critical industrial capacity, why execution matters more than policy alone, and how physical constraints will increasingly shape AI at scale.

Listen to the podcast on Spotify, Apple Podcasts, or YouTube.

AI News This Week

-

Can A.I. Generate New Ideas? (NYT)

AI systems are accelerating research in mathematics, biology, and chemistry, though debate continues over whether they generate truly novel insights. AI is proving invaluable as a research tool, with experts noting that AI systems can suggest hypotheses, allowing teams to focus on five experiments instead of 50. These systems analyze and store far more information than the human brain, delivering insights experts may have overlooked.

-

Aspect Biosystems Expands Partnership with Novo Nordisk to Develop Diabetes Treatment (Globe and Mail)

Radical Ventures portfolio company Aspect Biosystems is acquiring the pharma giant’s stem cell-derived islet cell and hypoimmune cell engineering technologies to lead development of curative diabetes therapies. Aspect’s AI-powered system produces synthetic tissues from living cells and hydrogel polymers, designed to reset the functioning of damaged or malfunctioning organs (such as the pancreas in the case of diabetes) when implanted. Since 2023, Aspect and Novo Nordisk have collaborated to develop cellular medicines designed to replace, repair, or supplement biological functions, delivering disease-modifying therapies. This new phase of the partnership builds on the momentum achieved in the existing collaboration.

-

Get Ready for the AI Ad-pocalypse (Verge)

According to a 2025 Interactive Advertising Bureau study, 90% of advertisers are using or planning to use generative AI for video ads, with projections indicating AI will appear in 40% of all ads by 2026. AI drastically reduces production costs. One NBA Finals commercial created using Google’s Veo 3 cost just $2,000 to produce in two days, compared to traditional campaigns that cost millions.

-

Chinese AI Models are Popular. But Can They Make Money? (Economist)

China has become the global leader in open-source AI models, with downloads on Hugging Face surpassing those of American alternatives. Alibaba’s Qwen recently dethroned Meta’s Llama as the platform’s most popular model. Despite technical prowess, monetization remains elusive. Challenges include China’s overcrowded domestic market, and limited enterprise spending. Beyond near-term revenue, the true test is whether Chinese companies can convert open-source ubiquity into a technical lock-in that drives long-term value and revenue.

-

Research: On the Slow Death of Scaling (Adaption)

This essay challenges the decade-long assumption that scaling compute always improves AI performance. The essay argues smaller models like Llama-3 8B now routinely outperform far larger ones like Falcon 180B, suggesting the relationship between compute and performance is increasingly uncertain. The author contends that algorithmic improvements, including instruction finetuning, model distillation, and chain-of-thought reasoning, drive progress more effectively than adding parameters. According to her, future innovation will likely come from inference-time compute, synthetic data optimization, and improved interfaces rather than simply training larger models.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)