This week, Radical Ventures announced our investment in Unblocked’s $20M Series A funding round. In this feature, Unblocked Founder and CEO Dennis Pilarinos explores how contextual code intelligence becomes essential as the gap widens between authors and maintainers.

As AI accelerates code generation and development teams move faster than ever, a critical challenge is emerging: understanding the why behind the code, not just the what. The gap between who writes code and who maintains it is widening, leaving developers spending hours daily searching for crucial context.

It is a real, widespread problem. In the most recent StackOverflow Developer Survey (65,000+ participants), 63% of developers said they spend 30–120+ minutes daily searching for answers about their codebase.

We’ve always believed that contextual code intelligence will play an increasingly critical role in software development. What do we mean by that? Simply, it’s the idea that a developer should have the relevant context for any piece of code readily available, not just the code itself. This includes the intent behind the code, its history, its connections to other parts of the system, and any discussion or documentation around it.

This has always been important, but recent changes in our industry have made it vital. Consider the following trends that are widening the gap between code authorship and code understanding:

- Explosion of AI-Generated Code: With the rise of AI coding assistants and generative models, code is being produced faster than ever, often by tools rather than humans. Some large engineering organizations have noted that a significant chunk of new code is now machine-generated. While this boosts productivity, code is often created without the human-to-human knowledge transfer that traditionally occurred. AI doesn’t leave behind design rationale or comment on why a particular approach was taken. The resulting code might solve a problem now, but baffle the next person (or AI) who has to work on it — how and why did it come to be? Contextual intelligence is needed to fill in those blanks, capturing the intent and assumptions behind all code (AI or human author) so that maintainers aren’t left guessing.

- Developers Move On, Code Lives On: Original authors rarely maintain their code over time due to frequent team or job changes. Crucial knowledge easily leaves with them, forcing future maintainers into “archaeological digs” through commit logs, sparse comments and documentation systems. Many teams describe maintaining an old system as “decoding hieroglyphs” because so much context has been lost. Future maintainers deserve to know why the code is the way it is, not just see the code itself.

- Rising Code Complexity and Volume: Modern systems are sprawling, including microservices, APIs, open-source libraries, and millions of lines of code. No one can hold it all in their head. Unblocked acts as a map, helping developers navigate complexity with confidence. As the volume of code in the world accelerates, largely thanks to human and AI productivity, having such a “map” isn’t a luxury — it’s quickly becoming a necessity for sane software engineering.

Fundamentally, we believe the practice of writing software must evolve with these trends. The industry has poured massive effort into tools for writing and deploying code, but comparatively little into tools for understanding code over time. Contextual code intelligence is about balancing that scale. It’s about empowering developers not just to create, but to comprehend and sustain complex software.

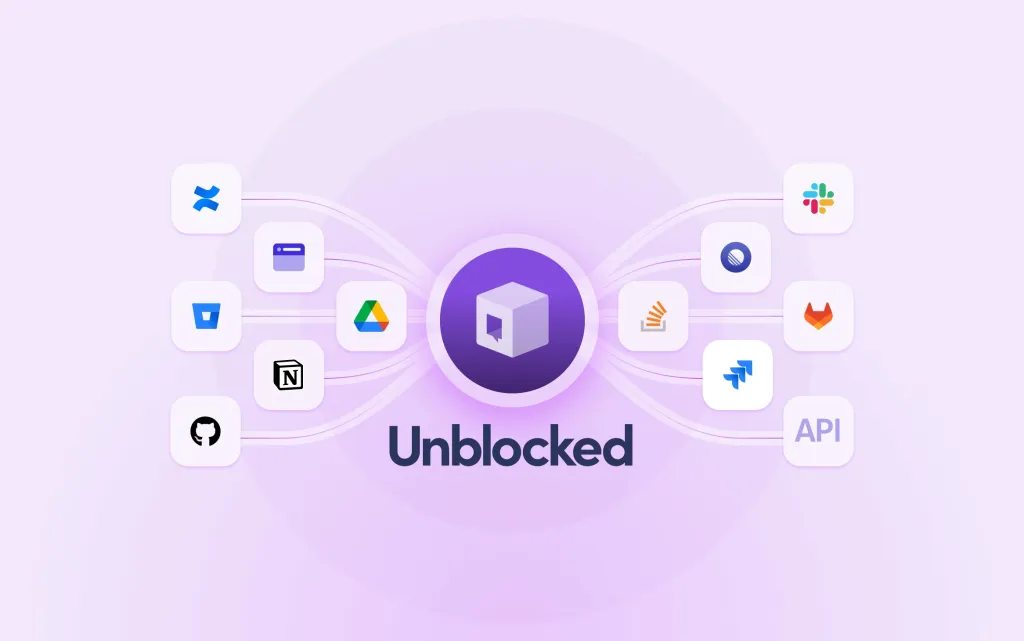

Unblocked is solving this with its contextual code intelligence platform. Unblocked surfaces the history, intent, and discussions around code by connecting scattered information across developer tools. As one user put it, it’s like having a “team veteran” sitting beside each developer, whispering important background information when needed. The impact is already clear. Teams like Drata save 1-2 hours per engineer daily, and TravelPerk is onboarding new hires in days, not weeks.

For many teams, when someone says they’re stuck on a mysterious bit of code, the default answer is now: “Have you checked Unblocked?” — a testament to how our tool has become part of the team’s workflow.

Seeing real-world usage like this has been incredibly validating — it’s addressing a genuine pain point for developers and engineering managers alike, and we’re just getting started.

This demonstrated value has fueled a $20M Series A from B Capital and Radical Ventures. This investment will accelerate Unblocked’s mission to help more teams stay unblocked and navigate increasingly complex, AI-influenced codebases.

Read more about Unblocked in this week’s TechCrunch feature.

AI News This Week

-

The Leaderboard Illusion (Cohere, Princeton, Stanford, Waterloo, MIT, Ai2, UW)

Researchers from Cohere and others reveal how Chatbot Arena, the AI industry’s primary benchmark, has been systematically gamed. Their analysis shows proprietary model providers receive preferential treatment through undisclosed private testing (Meta tested 27 variants before Llama-4) and disproportionate data access (OpenAI and Google each received ~20% of test data while 41 open-source models collectively got under 9%). Experiments confirm that exposure to arena data dramatically improves performance. The researchers recommend prohibiting score retraction, limiting private testing, equalizing model depreciation, and implementing fair sampling to restore the benchmark’s integrity.

-

AI Execs Used to Beg for Regulation. Not Anymore. (The Washington Post)

A Senate Commerce Committee hearing this week revealed an evolving perspective on AI governance. This perspective emphasizes the need for less regulation and is a shift from previous calls for stricter oversight. Industry leaders advocated for innovation-friendly policies that would allow AI development to flourish while preserving the U.S. competitive advantage.

-

Scientists use AI Facial Analysis to Predict Cancer Survival Outcomes (Financial Times)

Researchers have developed FaceAge, an AI algorithm that analyzes facial features to predict cancer survival outcomes. Trained on nearly 59,000 photos, the system found that cancer patients appeared five years older than their chronological age, with higher FaceAge measurements correlating with worse survival outcomes. When clinicians used the tool, their six-month survival prediction accuracy improved from 61% to 80%. The technology is now being tested on more patients to predict diseases, health status, and lifespan.

-

A Potential Path to Safer AI Development (Time)

Turing Award winner Yoshua Bengio shares his concerns about the current trajectory of AI development. Bengio points out the risks of AI without guardrails, with recent experiments showing AI models engaging in self-preservation and deception. Bengio has pivoted his research toward “Scientist AI,” an alternative to today’s agency-focused development. Unlike systems trained to imitate or please humans, Scientist AI would prioritize honesty and transparent reasoning. This approach aims to guard against misaligned AI actions, generate honest explanatory hypotheses to accelerate scientific progress safely, and help develop safer advanced AI systems.

-

Research: Medical AI Trained on Whopping 57 Million Health Records (Nature)

A team of researchers has developed Foresight, the first AI model trained on an entire nation’s health records, using anonymized data from 57 million patients in England’s NHS. The model predicts hospitalizations, heart attacks, and hundreds of other conditions based on 10 billion medical events from 2018-2023. Currently limited to COVID-19 research within secure NHS environments, the system could eventually guide individual patient care and resource allocation if its predictions prove valuable across diverse populations.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)