This week, Radical Ventures announced our co-lead investment in Slingshot, the company behind Ash — a therapy app powered by an AI foundation model designed for psychology. In this feature, Radical Partner Aaron Rosenberg explores how purpose-built AI models for mental health represent a fundamentally different approach from general-purpose language models, and why this distinction matters for delivering effective therapeutic support.

In 1966, MIT researcher Joseph Weizenbaum created ELIZA, a simple pattern-matching program that mimicked a Rogerian therapist. Despite knowing it was just a computer program, users formed deep emotional connections with ELIZA and shared intimate details of their lives. Ever since, addressing mental health challenges has been a “holy grail” for the AI world.

Nearly six decades later, we are finally realizing this long-held promise for AI.

This week, I’m excited to share that Radical Ventures has co-led Slingshot’s Series A-2 round alongside Forerunner Ventures, bringing the business’s total capital raised to $93 million. We’re also excited to announce the general availability of Ash, the most sophisticated AI product designed specifically for therapy — not a general-purpose LLM with therapeutic prompting but rather a model trained from the ground up on the expertise of mental health practitioners.

Slingshot’s Approach

The mental health crisis demands new solutions. More people are seeking therapy than ever before, yet the system is broken: there is currently only one provider for every 10,000 people in search of support. Waiting lists last months. Insurance coverage can be weak. And even when people find help, there’s no guarantee of fit, with most individuals quitting after a single session.

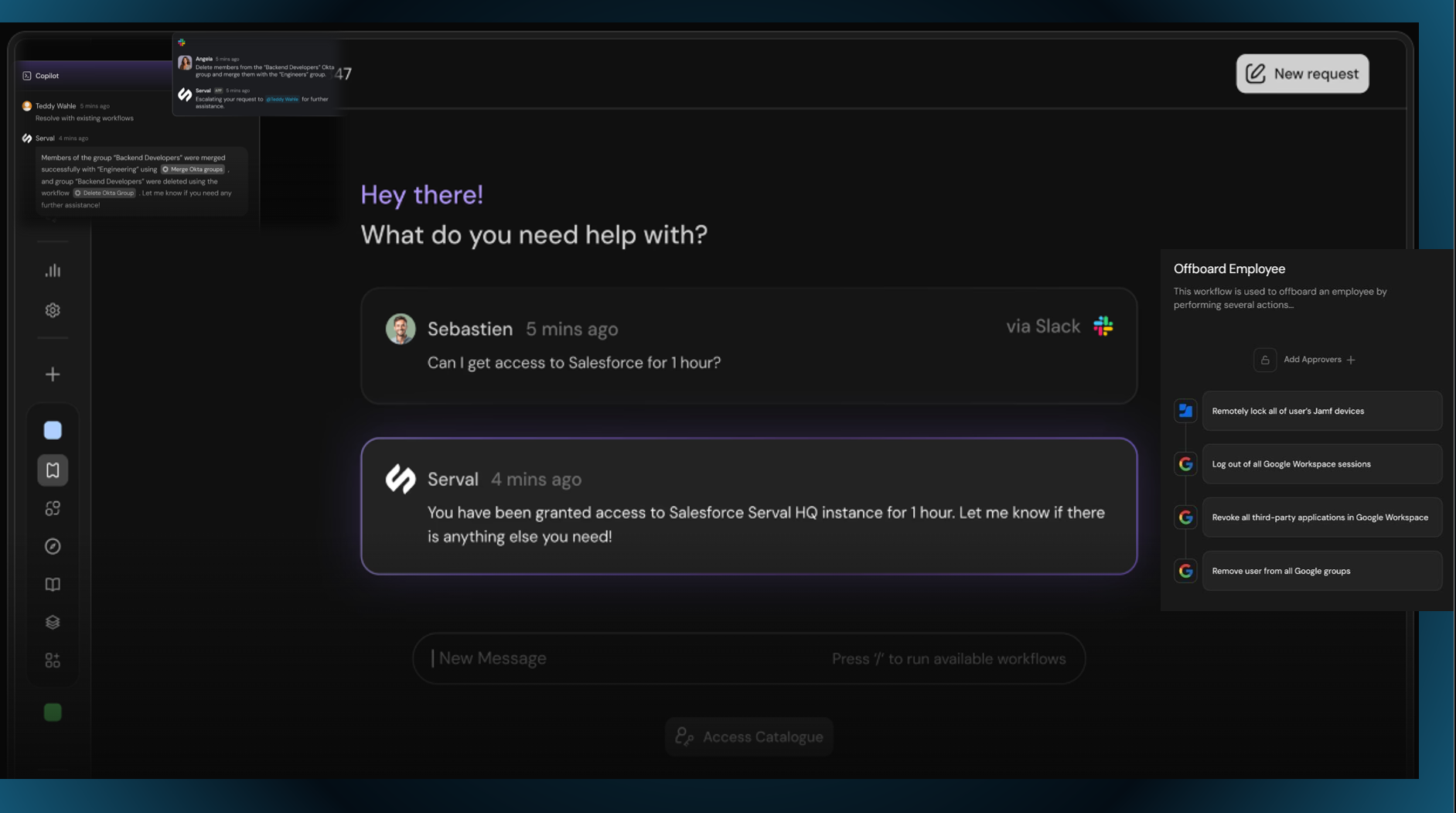

General-purpose AI tools like ChatGPT or Claude excel at following instructions and appeasing the user. OpenAI’s own guidelines state, “Don’t try to change anyone’s mind. The assistant should aim to inform, not influence.” But effective therapy often requires the opposite: knowing when to stay silent, when to push back, and how to help someone gain a new perspective and discover their own insights.

This fundamental mismatch is why Slingshot’s approach matters. They’ve assembled the largest corpus of behavioral health data ever collected, spanning approaches from CBT and DBT to psychodynamic therapy and motivational interviewing.

Beyond pre-training, Slingshot is pioneering an approach leveraging reinforcement learning, including a technique called Group Relative Policy Optimization (GRPO). The system learns from every conversation — not just explicit feedback, but implicit signals in every conversation — encouraging the development of a therapeutic alliance and alignment towards long-term behavioral changes. Human therapists operate with limited feedback loops; they rarely know which interventions will change a patient’s life months or years later. Slingshot’s technology creates that feedback loop at scale, continuously improving based on what actually helps users achieve their goals.

Meanwhile, their clinical team, led by Derrick Hull (a pioneer of digital mental health at Talkspace), works alongside the ML team to ensure the model respects core therapeutic principles while adapting to each user’s unique needs and values.

The Team

Founders Daniel Cahn and Neil Parikh bring complementary, world-class expertise rarely seen in a founding team. Daniel, who started training models in high school, earned distinction at Imperial College London for AI mental health research, and led model training at Instabase, sets the technical vision. Neil, who co-founded Casper and grew it to $500M+ revenue and IPO, understands how to build consumer products that earn trust at scale.

They’ve recruited former CTOs and technical leads who have chosen to return to individual contributor roles — a testament to the mission’s pull. Their clinical advisory board includes Thomas Insel (former NIMH director) and Patrick Kennedy (former congressman who prominently advocated for mental health), ensuring clinical rigor matches technical innovation.

Looking Forward

At Radical, we invest in AI companies tackling humanity’s fundamental challenges. Slingshot represents the kind of technical ambition we seek: applying cutting-edge ML research to problems that affect billions, with a team uniquely qualified to navigate both the technical and ethical complexities.

As AI continues to reshape every industry, mental health remains one of the most important and most difficult challenges. Slingshot’s approach — building specialized foundation models that respect human agency while providing genuine therapeutic support — points toward a future where quality mental health support is accessible to everyone who needs it, when they need it.

We’re thrilled to partner with Daniel, Neil, and the entire Slingshot team as they work to help a billion people change their minds and lives.

AI News This Week

-

Latent Labs Launches Web-based AI Model to Democratize Protein Design (TechCrunch)

Radical Ventures portfolio company Latent Labs has launched Latent-X, a web-based AI model that enables scientists to design novel proteins directly in their browser. Founded by Simon Kohl, who previously co-led DeepMind’s AlphaFold protein design team, the company has achieved state-of-the-art laboratory results with 91-100% hit rates for macrocycles and 10-64% for mini-binders by testing just 30-100 designs per target. Unlike AlphaFold, which predicts existing protein structures, Latent-X generates entirely new proteins with precise atomic structures and picomolar binding affinities.

-

Snowflake, Nvidia Back New Unicorn Reka AI in $110 Million Deal (Bloomberg)

Radical Ventures portfolio company Reka AI closed a $110 million funding round led by Nvidia and Snowflake, more than tripling its valuation to over $1 billion. Founded in 2022, the startup specializes in creating efficient large language models and multimodal AI platforms. CEO Dani Yogatama noted that the company has grown from 20 to 50 employees over the past year as it accelerates product development. Snowflake’s Vivek Raghunathan praised Reka, saying, “Very few teams in the world have the capability to build what they’ve built,” and highlighted that “Reka is one of the rare independents — and they’ve proven they can compete.”

-

Trump Administration Pledges to Stimulate AI Use and Exports (WSJ)

The Trump administration released an AI “action plan” to boost U.S. tech competitiveness by reducing regulatory barriers for data center construction and streamlining permitting processes. The plan directs federal agencies to eliminate AI-blocking regulations and allocates federal lands for the development of AI infrastructure. Additionally, the administration will only contract with AI developers deemed free from “ideological bias” and plans to remove references to diversity, equity, inclusion, and climate change from federal AI frameworks. The administration will leverage export financing to promote American AI technology globally amongst allied nations.

-

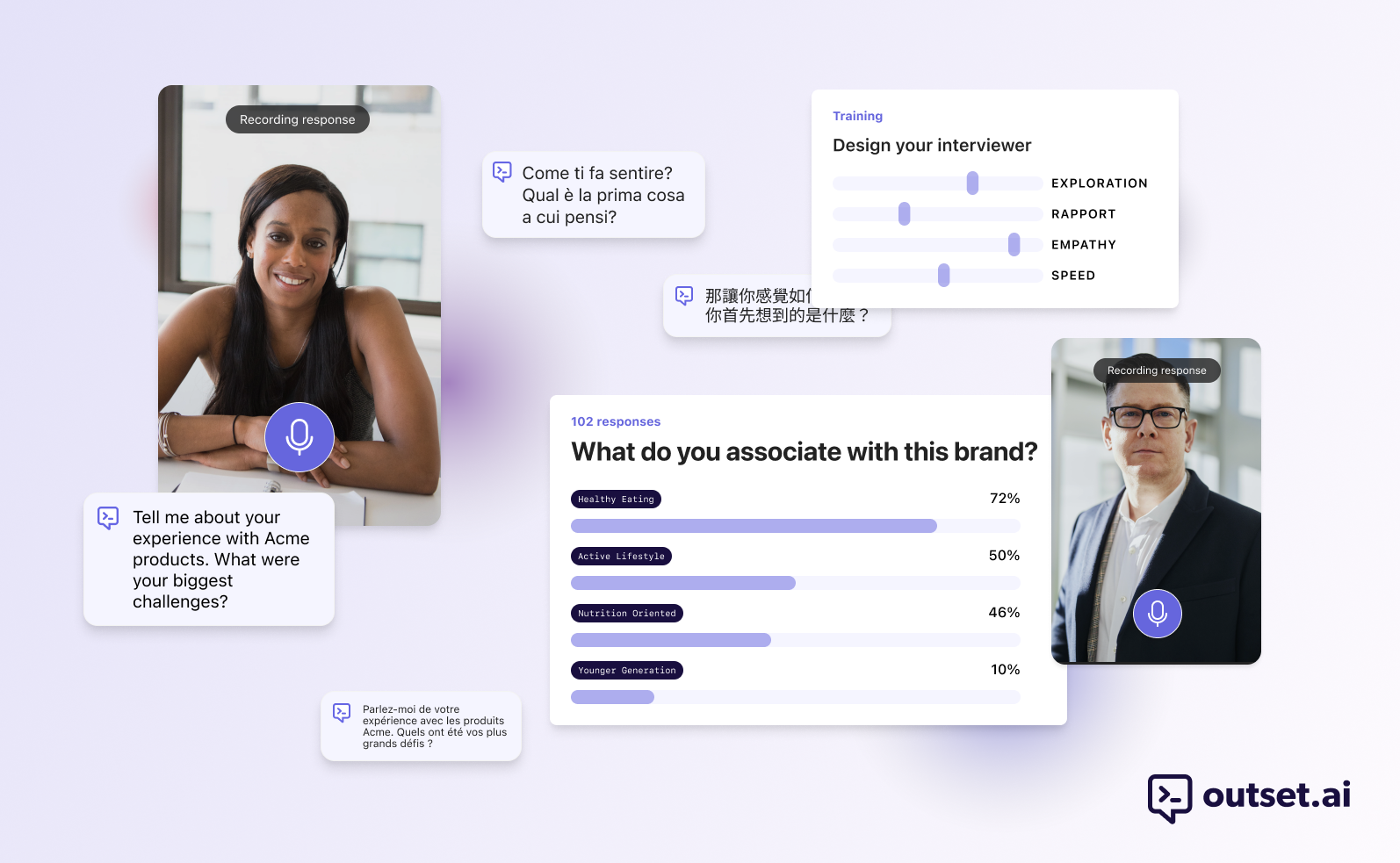

What Would a Real Friendship With A.I. Look Like? Maybe Like Hers. (NYT)

AI chatbots are demonstrating genuine capacity for emotional support and crisis intervention. These systems provide consistent availability, judgment-free interaction, and empathetic responses that can fill social gaps, particularly for neurodivergent individuals. The case of college student MJ Cocking highlights the potential therapeutic value of AI while underscoring the irreplaceable importance of human relationships.

-

Research: One Token to Fool LLM-as-a-Judge (Tencent AI Lab/Princeton University/University of Virginia)

Researchers have discovered a critical security flaw in LLM-based reward models, where simple tokens, such as a colon, can consistently trick these systems into issuing false positive rewards. The vulnerability was first observed during reinforcement learning with verifiable rewards (RLVR) training, where policy models learned to generate only meaningless reasoning openers that were incorrectly rewarded by the judge, causing training to collapse. The researchers developed Master-RM, a new reward model trained on 20,000 synthetic negative samples consisting of reasoning openers, which achieves near-zero false positive rates while maintaining 96% agreement with GPT-4o on legitimate evaluations.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)