“A trillion dollars of installed global data center infrastructure will transition from general purpose to accelerated computing as companies race to apply generative AI into every product, service and business process.”

The demand for high performance chips, the type required to power AI models and the incredible surge of generative AI that will underpin a massive software replacement cycle, far exceeds the available and foreseeable global supply.

The convergence of accelerated compute and powerful large language models is credited for catalyzing this profound moment where virtually every company, large and small, is contemplating and planning for how AI will transform their business and industry. Yet the supply of the chips is a global concern (Radical Partner Rob Toews recently wrote an article in Forbes about the geopolitics of AI chips). The most sought after chips remain highly concentrated in the hands of just a few chip producers, and the Hyperscalers and cloud resellers are scrambling for allocation to meet the needs of their compute-hungry internal teams and external customers.

With this intense demand, one would think that allocation of limited supply would be highly surgical. Yet, in the race for the most power efficient chips, the market is anything but efficient. Access to the latest chips are reserved for enterprises and start-ups with the deepest pockets who can commit to large advanced orders and can afford the high cost of training and serving AI models. These companies are often unsure of their needs or lack insight into how hardware might be optimized based on the models they are running. As a consequence, usage is often characterized by long run times, coupled with costly periods of underutilization for this most scarce resource. Similarly, the cloud providers and resellers provide advanced order discounts to reserve capacity, while they struggle to find capacity for higher priced on-demand customers, and have legacy chips unallocated in inventory.

There are very few companies capable of addressing the global compute bottleneck. CentML, which emerged from stealth this week with a founding $3.5 million seed investment from Radical, is one of them. CentML is led by Vector Institute for AI faculty member and University of Toronto Assistant Professor Gennady Pekhimenko, a recognized expert in machine learning compilers and efficient computer systems. Gennady and his team have deep experience working with the largest tech companies, helping them to solve complex hardware utilization problems. CentML software uses AI to help enterprises and model providers better predict the run time of their models under different hardware configurations, monitor system performance to identify pockets of underutilization, and provide automated optimization to help their clients save costs and bring their products to market faster. Their cloud partners benefit from a more efficient allocation of their latest chips, while activating the use of legacy chips to improve the yield from their inventory.

Read more about CentML and Radical’s investment in this VentureBeat feature.

AI News This Week

-

AI Startup Cohere Raises $270 Million in Round Backed by Oracle, Nvidia (Bloomberg)

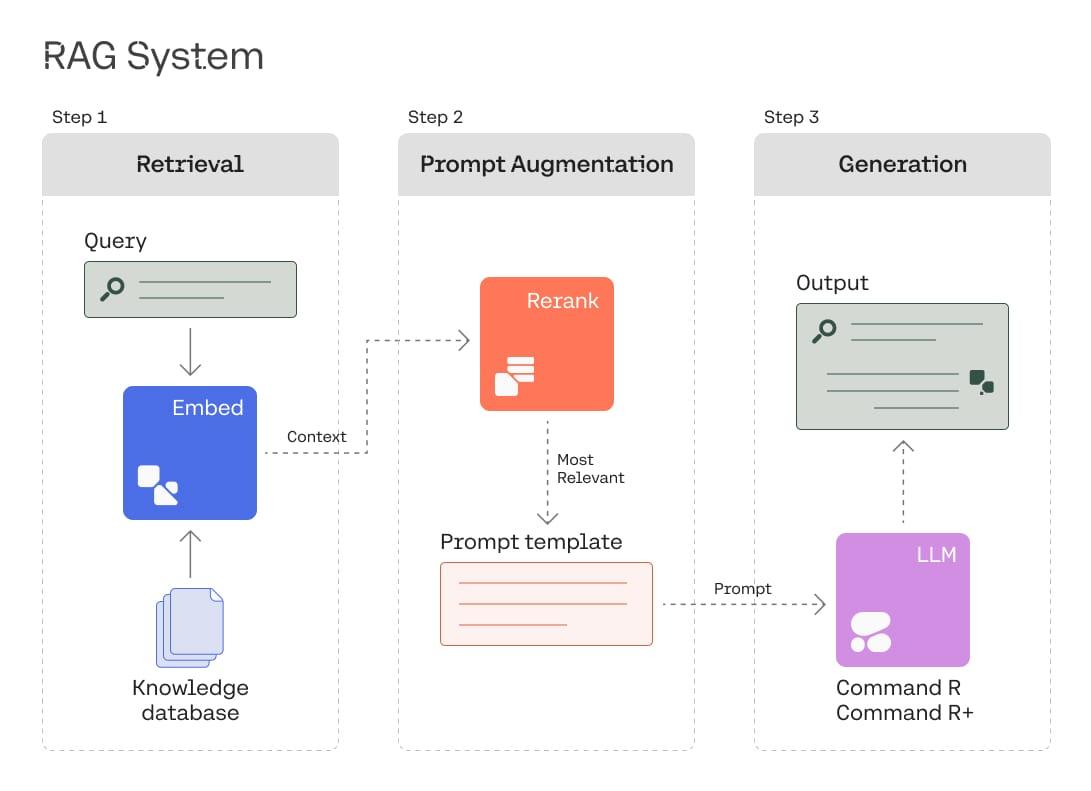

Cohere, a world-leading generative AI company which Radical Ventures helped launch as founding investor in 2019, raised US$270 million in a funding round that saw participation from major investors, including Oracle, NVIDIA and Salesforce. Cohere specializes in the development of customizable LLMs that power enterprise software products including interactive chat, text generation, classification and search. Unlike OpenAI, Cohere is cloud-agnostic, enabling its customers to use its products on any cloud or on-prem. Cohere also addresses concerns surrounding proprietary data associated with other models, by ensuring that customer data is used to fine-tune models that benefit only that customer, not the general model subsequently sold to competitors as with other generative AI offerings.

-

Listen: Open AI VP of Product Peter Welinder and Radical Ventures Partner Rob Toews on AI’s future (Unsupervised Learning)

In an episode of the Unsupervised Learning podcast, Radical Ventures Partner Rob Toews guest hosts with Jacob Effron in a thought-provoking conversation with Peter Welinder, VP of Product and Partnerships at OpenAI. Welinder delves into OpenAI’s overarching strategy, shedding light on their areas of focus, as well as the boundaries they have set in terms of what they will and will not build. The discussion extends to the future of open source models and the intriguing question of when we might witness the advent of Artificial General Intelligence (AGI). Welinder shares a speculation, suggesting that we may have a real chance of achieving superintelligence – characterized by autonomous systems capable of economically valuable human-level work – prior to the year 2030.

-

Microsoft to move top AI experts from China to new lab in Canada (Financial Times)

Microsoft reportedly will relocate top AI researchers from Beijing to Vancouver, in what is known as the “Vancouver Plan.” This strategic decision not only reflects efforts to safeguard talent from domestic poaching and mitigate geopolitical tensions but also positions Canada as a burgeoning hub for AI innovation. Microsoft Research Asia (MSRA), a vital contributor to China’s AI ecosystem, has been instrumental in nurturing Chinese tech talent.

-

Help make AI that’s not just English based (Cohere For AI)

In a world where over 7,100 languages are spoken, the lack of multilingual support in most language models poses a formidable challenge for building inclusive products and projects. Cohere’s non-profit research lab, Cohere For AI, is spearheading an ambitious initiative called Aya. This groundbreaking open science project aims to revolutionize generative modeling by creating a state-of-the-art multilingual language model. By harnessing the collective wisdom and contributions of individuals worldwide, including AI experts from academia, industry, non-profits, and independent researchers, Aya seeks to bridge the language gap and provide access to cutting-edge machine learning research for all. Cohere For AI is paving the way for increased inclusivity and expanding the frontiers of language understanding in the field of AI.

-

Research Spotlight: Voyageur – An Open-Ended Embodied Agent with Large Language Models (Nvidia/Caltech/UT Austin/Stanford/ASU)

Adding to the autonomous agent space, Voyageur is a lifelong learning agent in Minecraft powered by LLM technology. The agent continuously explores the world, acquires diverse skills, and makes novel discoveries without human intervention. Voyageur exhibits proficiency in the game, acquiring 3.3 times more unique items, traveling 2.3 times longer distances, and unlocking key milestones up to 15.3 times faster compared to previous attempts. Limitations for Voyageur and the future of agents include the major expenses related to the GPT-4 API. Most agents require the code generation quality which less costly solutions such as GPT-3.5 and open-source LLMs cannot yet provide. Agents also face inaccuracies and hallucinations where recursive loops can compound false answers. For example, the agent may be instructed to create a “copper sword” or a “copper chest plate,” both of which do not exist in the game. Even so, Voyageur represents an important step forward for autonomous agents.

Radical Reads is edited by Leah Morris (Senior Director, Velocity Program, Radical Ventures).