Specialized AI chips are essential for modern AI, but they are facing a supply bottleneck, with long production lead times due to limited manufacturing capacity and complex supply chains. Geopolitical tensions, and the reliance on Taiwan’s TSMC as a single point of failure for the industry, are exacerbating this dynamic.

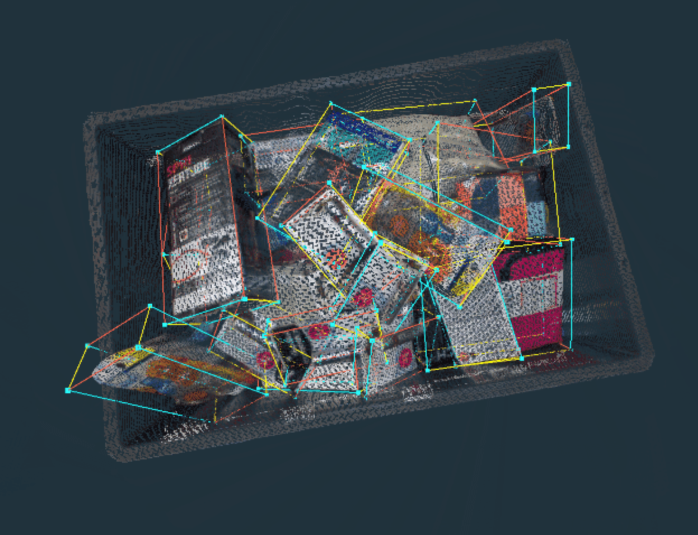

Meanwhile, the surge in generative AI adoption has significantly increased global demand, further challenging already strained production capacities. Driven by these constraints, the sector is buzzing with innovation in Platform-as-a-Service (PaaS) offerings like CentML (a Radical Ventures portfolio company), that optimize GPU chip performance for new and legacy chips and streamline GPU management.

Demand for high-performance computing is largely being fueled by the development of foundation models and the enormous computational resources required to train Large Language Models (LLMs). These models are what underpin a generation of new AI applications.

Historically, compute needs for AI models doubled approximately every 20 months. In the deep learning era, this rate has increased, doubling every 6 to 7 months with GPUs (see image below).

As AI becomes more pervasive, the focus is shifting from training to inference in computational demand. Inference, unlike the compute-intensive training phase, uses pre-trained models to process real-world data in smaller batches to deliver accurate results with fewer computational resources in real time. Challenging Nvidia’s dominance, major tech firms and traditional chipmakers are racing to introduce innovative chips to satisfy the demands of enterprise cloud services and their own product lines. Companies like Radical portfolio company Untether AI pre-empted this movement and have developed chips optimized for AI inference, delivering faster, more energy-efficient, and cost-effective operations.

The compute value chain and its complexities continue to be integral to AI advancement. The pace of chip production and performance validation, coupled with the significant investments necessary for large-scale adoption, will remain a recurring focus in the AI infrastructure sector.

Radical Reads is edited by Ebin Tomy.