This week Peter Chen, the CEO and Co-founder of Radical portfolio company Covariant, explores why robotics may be heading towards its own ChatGPT moment as advances in robotics foundation models accelerate thanks to growing access to highly diverse data.

The genius of foundation models built with Generative Pre-trained Transformers – or GPTs – is their capacity to train on vast amounts of data to enable AI models that can generalize. Instead of having separate models for specific tasks such as natural language processing and image recognition, GPT-based AI can perform a wide variety of tasks using a single foundation model.

Foundation models are neural networks “pre-trained” on massive amounts of data without specific use cases in mind. As that generalized model is trained on a wider set of tasks, performance on each specific task gets better, because of ‘transfer learning’.

Foundation models have transformed AI in the digital world — powering large language models (LLMs) such as ChatGPT and DALL-E. We’ve seen foundation models enable these applications to understand and react to the most unusual situations with human-like dexterity. But when it comes to applications in the physical world, like robotics, these foundation models have, for good reason, taken a little longer to build.

In 2017, Pieter Abbeel, Rocky Duan, Tianhao Zhang, and I co-founded Covariant to build the first foundation model for robotics. Previously, we had pioneered robot learning, deep reinforcement learning, and deep generative models at OpenAI and UC Berkeley.

There had been some promising academic research in AI-powered robotics, but we felt there was a significant gap when it came to applying these advancements to real-world applications, such as robotic picking in warehouses.

One key factor that has enabled the success of generative AI in the digital world is a foundation model trained on a tremendous amount of internet-scale data. However, a comparable dataset did not previously exist in the physical world to train a robotics foundation model. That dataset had to be built from the ground up — composed of vast amounts of real-world data and synthetic data.

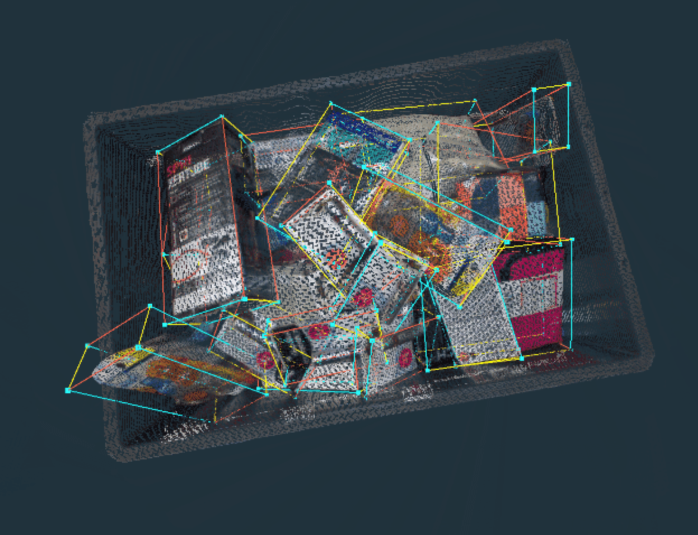

So how did we build this dataset in a relatively short time? Covariant’s unique application of fleet learning enables hundreds of connected robots across 4 different continents to share live data and learnings across the entire fleet. This real-world data has extremely high fidelity and surfaces new unknown factors that researchers would not have been able to imagine. Added to this is synthetic data that provides infinite variations of known factors. This data is multimodal, including images, depth maps, robot trajectories, and time-series suction readings. Covariant’s robotics foundation model relies on this mix of data. General image data, paired with text data, is used to help the model learn a foundational semantic understanding of the visual world. Real-world warehouse production data, augmented with simulation data, is used to refine its understanding of specific tasks needed in warehouse operations, such as object identification, 3D understanding, grasp prediction, and place prediction.

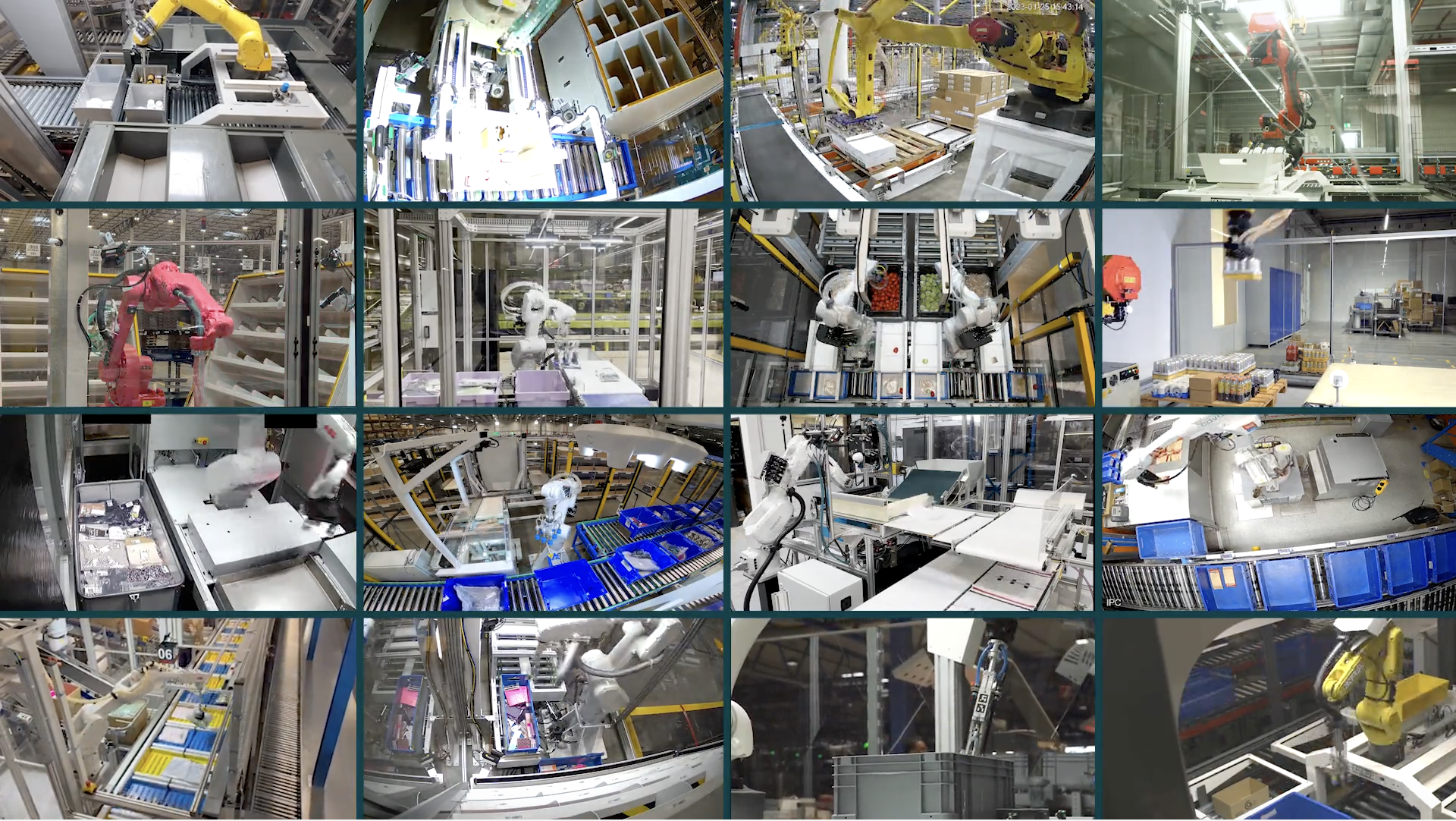

Covariant’s current robotics foundation model is unparalleled in terms of autonomy (level of reliability) and generality (breadth of supported hardware and use cases), and we’re continuing to further expand its reliability thanks to the unique data of (rare) long-tail errors we collect in production.

Autonomous robots, as they become more versatile and reliable, are making warehouses and fulfillment centers more efficient. They can perform tasks with greater accuracy, which pays dividends for consumers and businesses alike.

Most importantly, these advancements enable a future world where robots and people are working side by side. Robots handle dull, dangerous, and repetitive tasks while enabling the investment needed to create opportunities for meaningful work within environments that are critical to the economy but have struggled to maintain an engaged workforce.

AI News This Week

-

Cohere on a trajectory to go public (BNN Bloomberg)

Radical Ventures Managing Partner, Jordan Jacobs, discusses Cohere’s competitive advantages as demand for generative AI solutions from large enterprise customers accelerates. Cohere remains the only proprietary large language model provider that is cloud agnostic with models that can also be served on-prem – a critical benefit for privacy-sensitive industries including healthcare and the financial services sector.

-

OpenAI unveils AI that instantly generates eye-popping videos (New York Times)

OpenAI introduced Sora, its new text-to-video model which can generate high-quality videos up to a minute long based on user prompts. The company is sharing the new technology with a small group of early testers as it tries to understand the potential risks. OpenAI joins Google, Meta and startups like Runway in creating instant video generation tools that stand to profoundly impact the future of entertainment and visual media.

-

Cohere for AI launches LLM for 101 languages (VentureBeat)

Cohere for AI, the nonprofit research lab established by Cohere in 2022, unveiled Aya, an open-source large language model (LLM) supporting 101 languages — more than twice the number of languages covered by existing open-source models. The new model and dataset aims to help researchers unlock the potential of LLMs for dozens of languages and cultures largely ignored by most advanced models on the market today. The project involved 3000 collaborators around the world, including teams and participants from 119 countries.

-

How AI will change asset management (Financial Times)

Asset management is an aspect of financial services where generative AI offers great promise. In this opinion column, Mohamed El-Erian, President of Queens’ College, Cambridge University, suggests it is not hard to envisage a world where AI engines play an integral part of all the higher-skill tasks of asset allocation including modeling portfolios, security selection and risk mitigation. These engines will be trained on the enormous data sets that reside in the sector and, currently, are grossly under-exploited. El-Erian goes on to describe how generative AI tools will help create and structure new asset classes, trained by a combination of actual and virtual data.

-

Research: A Vision Foundation Model for the Tree of Life (Arxiv)

Researchers at Ohio State University have developed the largest dataset of biological images suitable for use by machine learning—and a new vision-based artificial intelligence tool to learn from it. The TreeOfLife-10M dataset contains over 10 million images of plants, animals and fungi covering more than 454,000 taxa in the tree of life. By comparison, the previous largest database ready for machine learning contains only 2.7 million images covering 10,000 taxa. The diversity of this data is one of the key enabling features of BioCLIP, an algorithm developed by the researchers. This machine learning model is designed to learn from the dataset by using both visual cues in the images with various types of text associated with the images, such as taxonomic labels and other information. Early results showed that BioCLIP performed 17% to 20% better at identifying taxa than existing models.

Radical Reads is edited by Leah Morris (Senior Director, Velocity Program, Radical Ventures).