The last century has brought us transformative technological advancements, from computers and the internet, to advanced computing, and now artificial intelligence. These advancements have influenced every field of study, every industry, and every facet of human life.

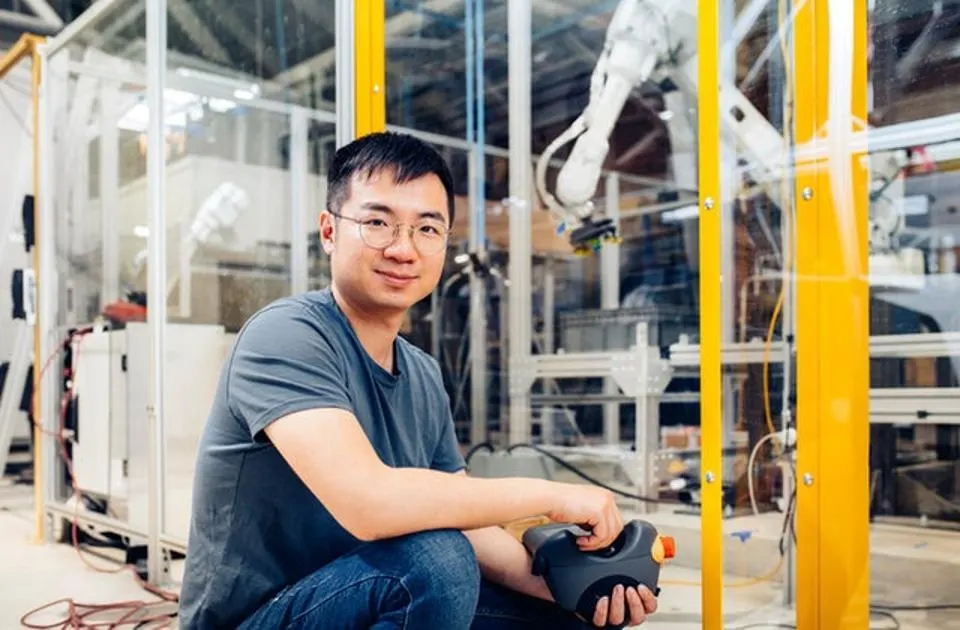

At Covariant, we believe that the next major technological breakthrough lies in extending these advancements into the physical realm. Robotics stands at the forefront of this shift, poised to unlock efficiencies in the physical world that mirror those we’ve unlocked digitally.

Recent advances in foundation models have led to models that can produce realistic, aesthetic, and creative content across various domains, such as text, images, videos, music, and even code. The general problem-solving capabilities of these remarkable foundation models come from the fact that they are pre-trained on millions of tasks, represented by trillions of words from the internet. However, partly due to the limitation of their training data, existing models still struggle with grasping the true physical laws of reality, and achieving the accuracy, precision, and reliability required for robots’ effective and autonomous real-world interaction.

RFM-1 — a Robotics Foundation Model trained on both general internet data as well as data that is rich in physical real-world interactions — represents a remarkable leap forward toward building generalized AI models that can accurately simulate and operate in the demanding conditions of the physical world.

Foundation model powered by real-world multimodal robotics data

Behind the more recently popularized concept of “foundation models” are multiple long-standing academic fields like self-supervised learning, generative modeling, and model-based reinforcement learning, which are broadly predicated on the idea that intelligence and generalization emerge from understanding the world through a large amount of data.

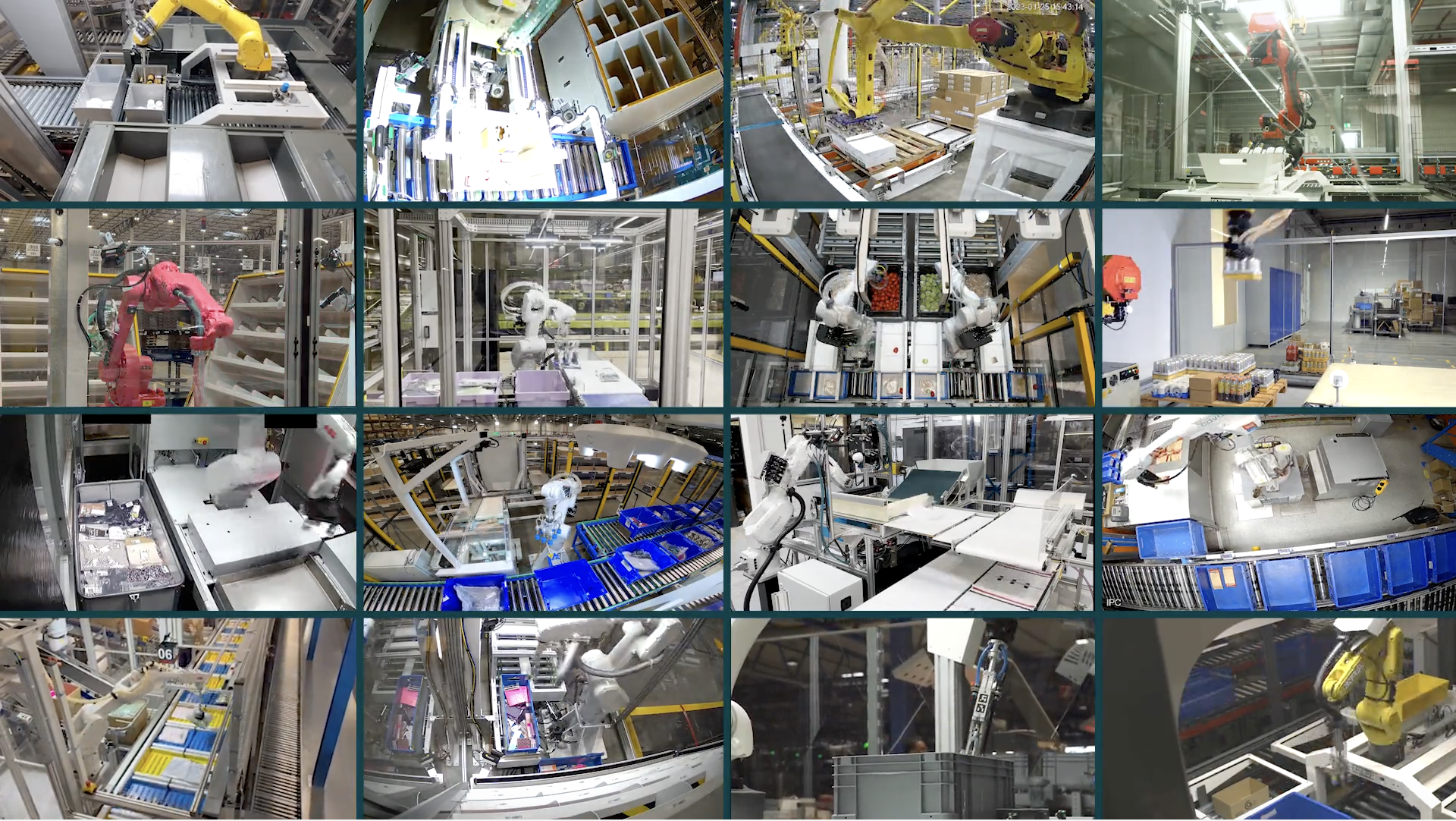

Following the embodiment hypothesis that intelligent behavior arises from an entity’s physical interactions with its environment, Covariant’s first step toward this goal of developing state-of-the-art embodied AI began back in 2017. Since then, we have deployed a fleet of high-performing robotic systems to real customer sites across the world, delivering significant value to customers while simultaneously creating a vast and multimodal real-world dataset.

Why do we need to collect our own data to train robotics foundation models? The first reason is performance. Most existing robotic datasets contain slow-moving robots in lab-like environments, interacting with objects in mostly quasi-static conditions. In contrast, our systems were already tasked with working in demanding real-world environments with high rates of precision as well as performance. In order to build robotics foundation models that can power robots to achieve high rates in the real world, the training data has to contain robotic interactions in those demanding environments.

Understanding physics through learning world models

Learned world models are the future of physics simulation. They offer countless benefits over more traditional simulation methods, including the ability to reason about interactions where the relevant information is not known apriori, operate under real-time computation requirements, and improve accuracy over time. Especially in the current era of high-performing foundation models, the predictive capability of such world models can allow robots to develop physics intuitions that are critical for operating in our world.

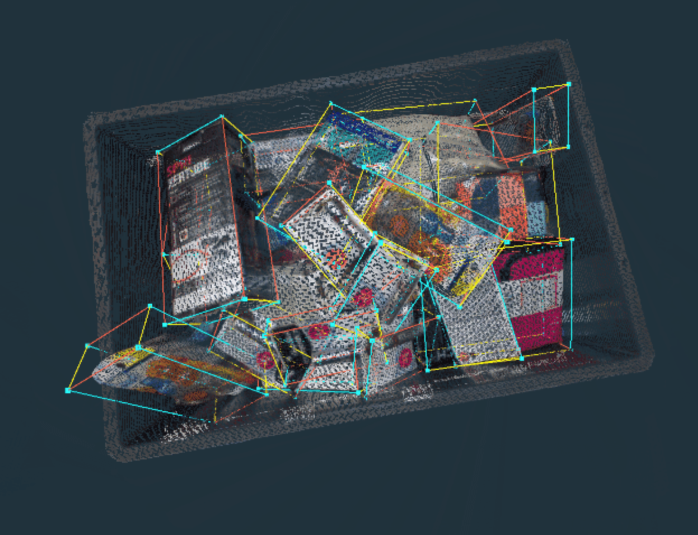

We have developed RFM-1 with exactly this goal in mind: To deal with the complex dynamics and physical constraints of real-world robotics, where the optimization landscape is sharp, the line between success and failure is thin, and accuracy requirements are tight, with even a fraction of centimeter error potentially halting operations. Here, the focus shifts from merely recognizing an object like an onion, to managing its precise and efficient manipulation, all while minimizing risks and coordinating with other systems to maximize efficiency.

Leveraging language to help robots and people collaborate

For the past few decades, programming new robot behavior has been a laborious task for experienced robotics engineers only. RFM-1’s ability to process text tokens as input and predict text tokens as output opens up the door to intuitive natural language interfaces, enabling anyone to quickly program new robot behavior in minutes rather than weeks or months.

RFM-1 doesn’t just make robots more taskable by understanding natural language commands, it can also enable robots to ask for help from people. For example, if a robot is having trouble picking a particular item, it can communicate that to the robot operator or engineer. Furthermore, it can suggest why it has trouble picking the item. The operator can then provide new motion strategies to the robot, such as perturbing the object by moving it or knocking it down, to find better grasp points. Moving forward, the robot can apply this new strategy to future actions.

RFM-1 is the start of a new era for Covariant and Robotics Foundation Models.

By giving robots the human-like ability to reason on the fly, RFM-1 is a huge step forward toward delivering the autonomy needed to address the growing shortage of workers willing to engage in highly repetitive and dangerous tasks — ultimately lifting productivity and economic growth for decades to come.

AI News This Week

-

How AI is being used to accelerate clinical trials (Nature)

Innovative applications of AI are expediting clinical trials, addressing the slow pace of drug development described by Eroom’s law. AI applications range from trial design optimization, improved patient recruitment, to outcome analysis. Innovations like predictive algorithms and AI-driven tools for summarizing research and refining patient selection are streamlining the trial process. A key advancement is Radical Ventures portfolio company Unlearn’s ‘digital twins’, reducing the need for large control groups and enhancing trial efficiency. This integration of AI into clinical trials promises faster drug development, wider participation, and more adaptive research methods, amidst increasing trial complexity and regulatory challenges.

-

Cohere releases powerful ‘Command-R’ language model for enterprise use (VentureBeat)

Cohere, a Radical Ventures portfolio company, unveiled Command-R, its latest language model, distinguishing itself through enhanced retrieval augmented generation (RAG) capabilities, large context windows, and an emphasis on cost-efficiency aimed at transforming business applications on a large scale. In a move to foster research, Cohere has also made the model’s weights publicly available for non-commercial research.

-

Artificial Intelligence Act: MEPs adopt landmark law (European Parliament)

Last week, the European Parliament passed the Artificial Intelligence Act aiming to balance innovation with fundamental rights. It bans “high-risk AI applications”, mandates transparency, and seeks to support SMEs. The bill has received a mixed response. The largest tech companies operating in the region are publicly supportive of the legislation in principle, while wary of the specifics.

-

AI talent is in demand as other tech job listings decline (Wall Street Journal)

Despite a rise in layoffs in the broader technology sector, the increasing demand for AI-related jobs remains. With the ability to draft emails, presentations, and images, AI-powered technology continues to reshape white-collar work. A recent survey of 5,800 people revealed that 68% of people polled have used AI at least occasionally at work. Another survey found that the majority of people claiming to use AI at work feel that it improves productivity. Despite increased layoffs over the past year, most tech workers are finding new jobs shortly after beginning their search, reflecting the continued demand for tech talent in a tight labour market.

-

Research: Evo: DNA foundation modeling from molecular to genome scale (ARC Institute)

The Arc Institute, a new nonprofit research organization, has released Evo, “a foundation model” that enables prediction and generation tasks from the molecular to genome scale.” Utilizing a unique StripeHyena architecture with 7 billion parameters, it excels in pattern recognition within extensive sequences, outperforming traditional models. Trained on comprehensive bacterial and viral genomes, Evo demonstrates exceptional capabilities in predicting protein and RNA mutations and generating coherent genome sequences. Despite being a first-generation model with inherent limitations, Evo’s initial performance reinforces the first article shared this week: algorithmic systems will increasingly advance scientific research. Specifically, Evo illustrates the transformative potential of next-token prediction paradigms, signalling a future where generative models become pivotal in accelerating discoveries and innovations across various scientific fields.

Radical Reads is edited by Ebin Tomy.