Ten years ago, AI pioneer Geoffrey Hinton, with his grad students Alex Krizhevsky and Ilya Sutskever, revolutionized the world of artificial intelligence, with their seminal paper “ImageNet Classification with Deep Convolutional Neural Networks.” It proved a watershed moment for the field, triggering a decade of rapid innovations and world-changing applications.

This week at NeurIPS, the world’s largest AI research conference, their paper was awarded the conference’s “Test of Time” award for its huge impact on the field. But in his keynote talk to an audience of thousands at the conference, Geoffrey made it clear that his work is not done, offering a vision for an entirely new form of computing built upon a new algorithm, Forward Forward.

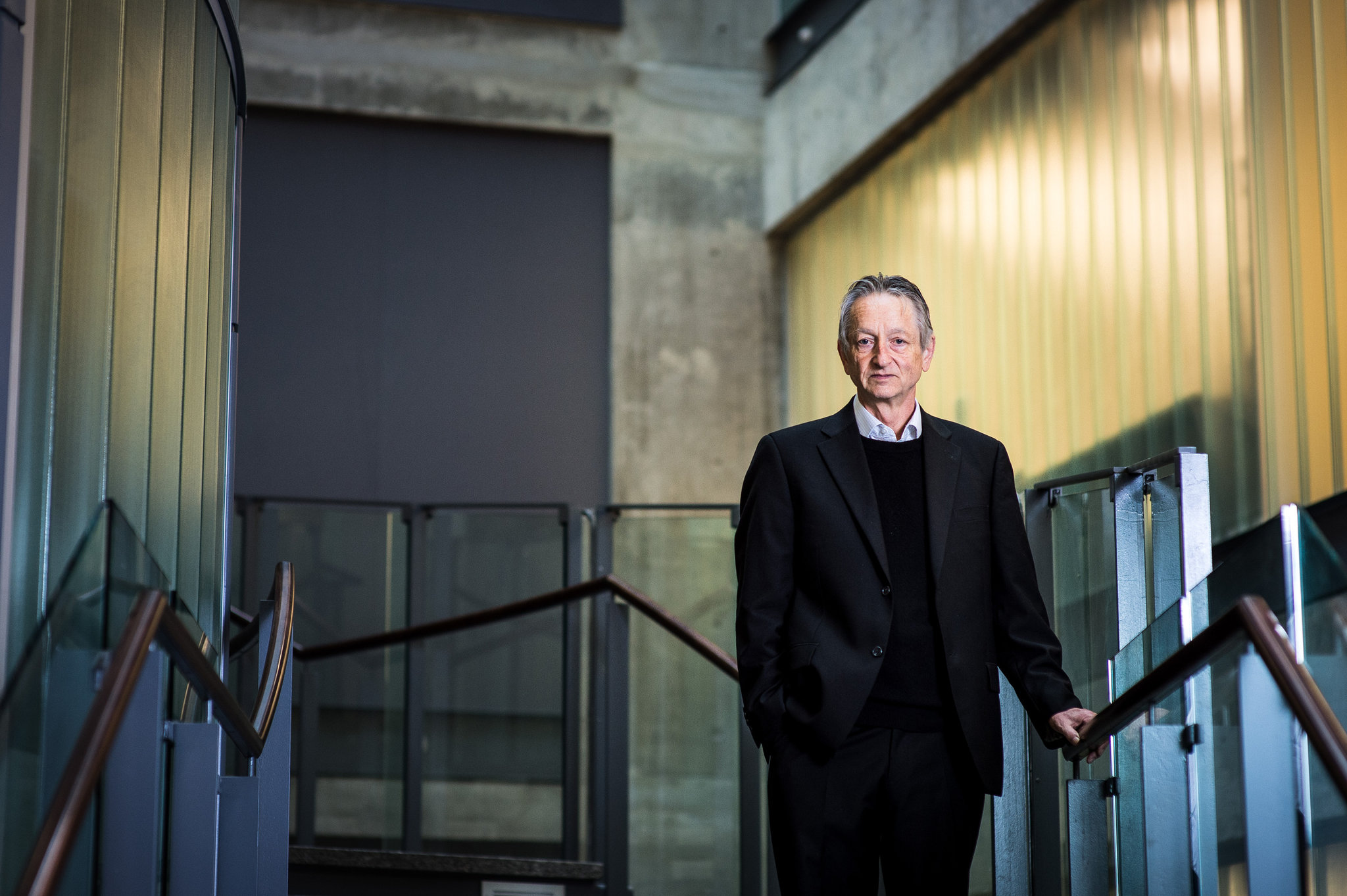

Radical Partner Aaron Brindle spoke with Geoffrey Hinton (who is an investor in Radical Ventures) after his talk. The following excerpt of their conversation was edited for length and clarity.

Aaron Brindle (AB): So much of your work has been in the pursuit of better understanding the brain and how it works. Does Forward Forward bring you closer to that goal?

Geoffrey Hinton (GH): That’s the hope. Although I may be the only person that thinks that. Forward Forward refers to two forward passes of data through the multi-layers of artificial neurons. One is real data and the other is negative data. I was inspired many years ago by this idea of contrastive learning and the powerful role negative data can play in tuning our capacity to build models of the world. With Forward Forward, this negative data can be run through the network when it’s offline – or asleep.

Most people doing research on learning algorithms don’t think about sleep and may not wonder why we spend all that time sleeping. In the early 1980s, I was very focused on sleep and my work with Terry Sejnowski on Boltzmann Machines was inspired by the idea of what the brain is doing when it sleeps.

AB: In the list of references in your paper you cite a Nature article from almost 40 years ago, on the function of dream sleep. Do the early results from Forward Forward provide further insight into the mystery of why we dream?

GH: Why do dreams – which are always so interesting – just disappear? Francis Crick (who played an important role in deciphering the structure of DNA) and Graeme Mitchison had this idea that we dream in order to get rid of things that we tend to believe, but shouldn’t. This explains why you don’t remember dreams.

Forward Forward builds on this idea of contrastive learning and processing real and negative data. The trick of Forward Forward, is you propagate activity forwards with real data and get one gradient. And then when you’re asleep, propagate activity forward again, starting from negative data with artificial data to get another gradient. Together, those two gradients accurately guide the weights in the neural network towards a better model of the world that produced the input data.

AB: The Forward Forward algorithm does not rely on backpropagation, why is this important?

GH: So the state-of-the-art in deep learning has been backpropagation, which works like this: You have an input which is maybe the pixels of an image, and you run a forward pass through the neural network and you get an output such as a categorization that says: “that’s a cat.” Then you do a backward pass through the network, sending information backwards through the same connections and change the neural activities to make the answer better or more accurate. If, for example, you input a picture of a cat and you go forward through the network and it says “that’s a dog,” backprop sends information backwards to tell the neurons how they ought to change their activities. So in future, when it sees that picture, it will say “cat” and it won’t say “dog.”

The problem with backpropagation is it’s very hard to see how it would work in the brain. It interrupts what you’re doing. If I’m feeding you pictures very fast – like in a video – you don’t want to stop and run the network backwards in time which is what backpropagation would have to do. You want to just be able to keep going forwards as new data comes in.

AB: What’s wrong with relying on backpropagation?

GH: So there is one reason why you might not want to rely on backprop and that’s if you don’t actually know how the hardware works. Backpropagation relies on having a perfect model of what happens when you do the first forward pass. It knows what all the neurons are up to and exactly how they respond to inputs. To achieve this perfect model of the forward pass, Backprop relies on energy-intensive, digital hardware. It can’t run on low-wattage analog hardware.

AB: Like a brain?

GH: Some people talk about uploading themselves to a computer because they’d like to be immortal. It’s nonsense. An individual’s knowledge is reflected in the weights of their neural network. And what those weights mean depends entirely on where the connections are and what the input and output properties of each neuron are and so on. So there’s no way I could take the weights in your brain and put them into my brain. Wouldn’t work at all. And if you wanted to upload what you know into a computer, the connection settings wouldn’t be enough, you’d have to upload all the connectivity including all of the time delays in transmission and all the properties of the neurons, exactly how they behaved and exactly how this behavior changes as a function of their recent history of activity and all the chemicals in their local blood supply. It’s impossible. So, for biological systems, there is no easy way to separate hardware from software.

AB: This is your idea of mortal computers.

GH: Yes. We are used to computers where the knowledge in the program or the weights is immortal: It does not die when the hardware dies because it can be run on other hardware that behaves in exactly the same way. But If you want your trillion parameter neural net to only consume a few watts, mortal computation may be the only option. Computer scientists don’t like this idea because you can’t write a program once and then upload it to a billion computers. If you want a billion computers to do something, you have to train each of them separately. It’s like having a billion toddlers. You can’t tell them what to do. They have to learn from examples.

AB: What are some of the applications of Forward-Forward?

GH: Well, imagine you want something with the power of GPT-3 in your toaster. It will only cost a few dollars and it will run on just a few watts. But when you first get it, the toaster will be remarkably stupid. Over time, however, it will become smarter and you’ll be able to tell your toaster that you just took the bread out of the fridge, it’s sourdough and it’s a couple of weeks old. So even though it’s cold, it’s probably going to toast very fast. So watch out!

AI News This Week

-

V7 snaps up $33M to automate training data for computer vision AI models (TechCrunch)

As data proliferates, intelligent data orchestration is essential for businesses looking to deploy machine learning models. V7, Radical Ventures’ newest portfolio company, aims to become the industry-standard for managing data in modern AI workflows. V7 is redefining how computer vision teams manage their training data. By leveraging automated annotation tools and cutting-edge data orchestration techniques, V7 is able to automate the annotation and categorization of data for training AI models, while also providing a key orchestration layer to manage how and when training data is deployed into AI workflows.

-

While everyone waits for GPT-4, OpenAI is still fixing its predecessor (MIT Technology Review)

Tech forums have exploded with screenshots of users’ conversations with ChatGPT, released this week. Built on GPT-3.5, the model is designed to interact via dialogue to hold a conversation, create art and poetry, and write and edit code. The sentiment from the research community is that ChatGPT makes significant improvements particularly in answering follow-up questions and rejecting nonsensical requests. The model is currently in a free research preview to source users’ feedback and learn about its strengths and weaknesses.

-

Did physicists create a wormhole in a quantum computer? (Nature)

Physicists have sent quantum information through a simulated wormhole in a ‘toy’ universe that exists only inside a quantum computer. The tunnel is analogous to passages through space-time that might connect the centres of black holes in the real Universe. Machine learning was used to determine a quantum system that preserves gravitational properties. The authors note, “The surprise is not that the message made it across in some form, but that it made it across unscrambled.” Experiments like this could help unite quantum mechanics and gravity, leading to a real-world quantum gravity theory.

-

137 emergent abilities of large language models (Jason Wei)

Google Brain research scientist Jason Wei has pulled together more than 130 examples of ’emergent abilities of large language models.’ While undetectable in smaller models, novel abilities emerge as models get larger. Evaluating the capabilities of large models is an emerging field of scientific study as benchmarking efforts becomes an increasing area of focus within the industry.

-

Disney made a movie quality AI tool that automatically makes actors look younger (or Older) (Gizmodo)

Photorealistic digital re-aging of faces in video is becoming increasingly common in entertainment and advertising. But the predominant 2D painting workflow often requires frame-by-frame manual work that can take days to accomplish, even by skilled artists. Researchers have developed an AI visual-effects tool that alters the age of actors, making them appear older or younger in videos. FRAN, or face re-aging network, suffers less from facial identity loss, poor resolution, and unstable results across subsequent video frames that are present in other approaches.

Radical Reads is edited by Ebin Tomy.