In our periodic series on seminal papers from the history of AI, we jump to the mid 1980s. Prior to that, the “connectionism” approach (neural networks based artificial intelligence) had gone through an ‘AI winter.’ Everything changed when Geoffrey Hinton and his fellow researchers discovered a way to get neural networks to work. They authored a paper that charted a course for a future AI revolution.

In 1986, Geoffrey Hinton, David Rumelhart, and Ronald Williams published a short paper in Nature titled “Learning representations by back-propagating errors.” The paper showed that a mathematical technique known as backpropagation could be used to train and optimize neural networks efficiently.

The “backprop” algorithm enabled the development of deep learning, a subfield of AI that is responsible for the most significant breakthroughs in recent years, revolutionizing industries from healthcare to finance and powering generative AI technologies.

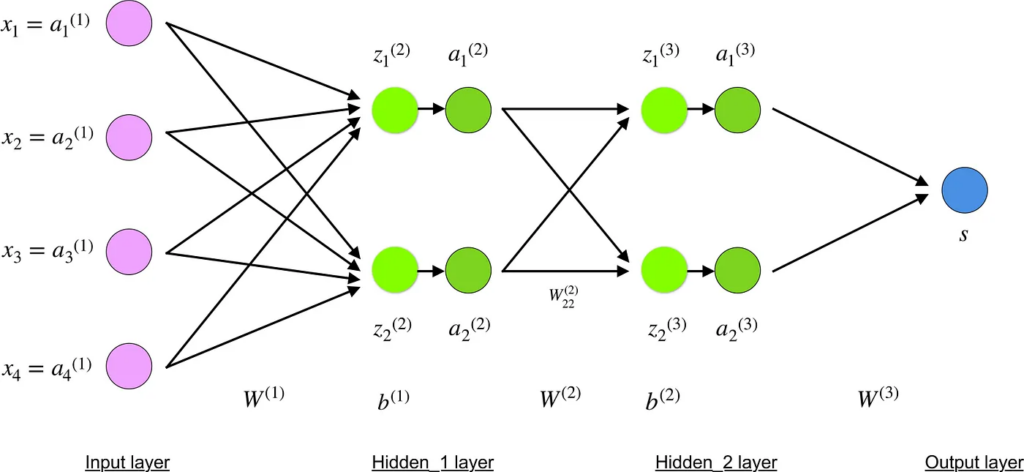

Today, backpropagation is the backbone of modern AI. When we read about large language models with billions of parameters, it is backpropagation that allows us to arrive at the appropriate values for each of the parameters.

However, backpropagation comes at a cost. Training a neural network is akin to adjusting the knob on a radio to catch the right signal. Except there are billions of knobs and each one needs millions of small adjustments. As one can imagine, this approach requires a tremendous amount of computing power.

A number of companies are working on developing hardware and software solutions to reduce the compute and power consumption required to train these models. Radical Ventures portfolio company CentML has developed a software only solution that reduces compute needs by 30-60%. Another Radical portfolio company, Untether AI, is developing AI specific chips that can run an already trained network more efficiently than any commercially available chip. These solutions will be critical in ensuring the progress on building and deploying AI models powered by backpropagation.

Given these compute-intensive demands, researchers are searching for an alternative architecture that overcomes the inherent energy demands of backpropagation. Among those scientists is Geoffrey Hinton – one of the original researchers responsible for making backpropagation an indispensable part of the modern AI system.

We had a chance to ask Geoff Hinton about the 1986 paper and his current research. The following is an excerpt of our conversation:

Radical Ventures: How long had you been working on this problem before the publication?

Geoff Hinton: I started graduate school in 1972 and I was already obsessed with the problem of how to learn the connection strengths in a deep neural network. I quickly realised that the visual system must be using an unsupervised learning procedure and in 1973 I developed an unsupervised way to learn interesting individual feature detectors but I couldn’t figure out a good way to make them all different if they were looking at the same inputs. My adviser kept pressuring me to stop working on this problem, so I did my PhD thesis on a way to do inference in neural networks whose weights were determined by hand. But I kept thinking about the real problem.

Radical: What led you to backprop?

Geoff Hinton: David Rumelhart was a brilliant psychologist who figured out how to extend the delta rule which is normally used to train neural nets in which the inputs are directly connected to the outputs. I encouraged him to formulate his approach in terms of doing gradient descent on an objective function. It seems very obvious now, but at that time the people thinking about synaptic learning rules were typically not trying to derive the rule this way.

Radical: What has surprised you most about the reception to your work since the publication?

Geoff Hinton: There were two things that really excited me most about backpropagation. The first was its ability to do unsupervised learning, discovering hidden patterns or data groupings without the need for human intervention by reconstructing the input at the output. The second was its ability to train recurrent neural nets. It seemed to me that this would allow us to learn big modular networks in which different, locally connected parts of the network computed different things and all the parts collaborated effectively. But it did not work well in practice. The gradients either vanished or exploded if the nets were run for many time steps. Sepp Hochreiter solved this problem in 1997 by inventing LSTMs but his solution looked complicated and it took a very long time before people exploited it effectively. It was 10 years before Alex Graves demonstrated that LSTMs could solve the difficult problem of reading Arabic handwriting and it was this application that convinced many skeptics.

The next big surprise came in 2017 when the research community realized that there was an alternative to recurrent neural networks – transformers – that worked much better on our current computers. The transformers architecture powers generative AI applications like large language models.

While the neural network architecture was inspired by how the brain works, backpropagation is most likely not the way our brain processes information.

Radical: What is your current hypothesis on how the brain learns from new information?

Geoff Hinton: There are several kinds of learning in the brain. Learning which action to choose is very different than learning what features to use in the early parts of the visual pathway. Some kind of model-based reinforcement learning is probably used to select actions, whereas some kind of unsupervised learning is probably needed for learning visual features. But the basic problem of how to make the low-level visual features be useful for high-level vision is unsolved. Backpropagation does this very effectively which is why so much effort has gone into trying to find plausible ways for the brain to do backpropagation, but none of the existing approaches scales up well to big networks — it feels a bit like trying to invent a room temperature superconductor.

Radical: Has that changed since the recent progress in LLMs?

Geoff Hinton: I don’t think that LLMs give us much insight into how the brain learns, but they do demonstrate that really big neural networks can be very smart.

People who have very little understanding of neural networks often say that LLMs are just splicing together bits of text from the web and that they suffer from hallucinations. This does not explain how they can solve novel reasoning problems and it is a very nice example of humans confabulating (which is the correct technical term for hallucinations by LLMs). The critics have little understanding of what is going on inside the net so they just make up a simple story that feels plausible to them. Human memory does not work by retrieving things from storage. All human memories are inventions designed to be plausible. If the subject has enough knowledge in the weights of her network, she will invent something very close to the truth, so it looks like retrieval. If not, the subject may well be convinced she is telling the truth, but the invention will not correspond to what actually happened. As Frederic Bartlett showed in the 1930s, the confabulation will often be a version of the truth that is distorted towards a story that is more plausible than the truth. Rather than claiming that LLMs do not really understand because they often confabulate, we should treat the confabulations as evidence that they are very like us.

Big neural networks trained with backpropagation are proving to be very good at solving the previously intractable computational problems that arise in many different areas of science. It may well be that a big neural net can figure out how the brain can approximate backpropagation, but it’s hard to make predictions in the vicinity of singularities.

Radical Reads is edited by Ebin Tomy.

![[shared] Radical AI Founders promo 1200 x 628](https://radical.vc/wp-content/uploads/2024/09/shared-Radical-AI-Founders-promo-1200-x-628.jpg)