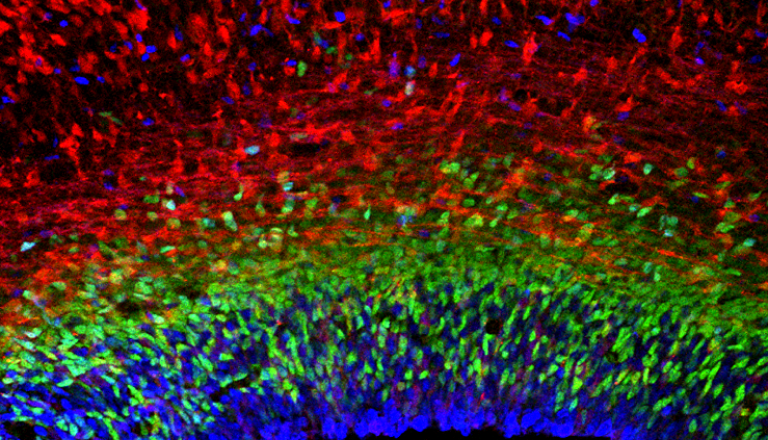

This past year, the Nobel Prize for Chemistry was awarded to the developers of AlphaFold, the first time a scientific breakthrough enabled by artificial intelligence received such recognition. DeepMind’s AlphaFold solved the decades-old problem of protein structure prediction and showcased how machine learning can help us understand biology. Now, the opportunity lies in advancing and applying the latest generative techniques to design proteins from scratch.

That is why we are proud to announce our investment in Latent Labs, which emerged from stealth this past week.

Founded by DeepMind alumnus Dr. Simon Kohl, a core contributor to AlphaFold and the lead of DeepMind’s protein design team, Latent Labs is building AI foundation models to “make biology programmable,” with plans to partner with biotech and pharmaceutical companies to discover new cures for disease.

By empowering researchers to computationally create new therapeutic molecules, such as antibodies or enzymes, Latent Labs will help partners unlock previously challenging targets and open new paths to personalized medicines. What is more, partners can leverage the platform to design proteins with improved molecular features (such as increased affinity and stability), expediting drug development timelines.

Latent Labs has attracted world-class talent, bringing experience from DeepMind, Microsoft, Google, Stability AI, Exscientia, Mammoth Bio, Altos Labs, and Zymergen. With offices in London and San Francisco, the company has its own wet lab facilities where the team has experimentally validated the capabilities of their computational platform.

For more information, you can find coverage of the launch in the FT, The Times, Forbes, TechCrunch, Sifted, EndPoints, and STAT, among others. The company is actively hiring and exploring commercial partnerships, so please get in touch to learn more.

AI News This Week

-

Now more than ever, AI needs a governance framework (Financial Times)

In this opinion piece, Fei-Fei Li, CEO and Co-Founder of Radical Ventures portfolio company World Labs and Radical Scientific Partner, outlines critical principles for AI governance. Li advocates for grounding policy in scientific reality based on empirical data and research rather than speculation, crafting pragmatic policies that minimize unintended consequences while incentivizing innovation, and empowering the entire AI ecosystem through open access to models and tools. She emphasizes that while AI has advanced rapidly, effective governance must maintain open collaboration while ensuring technology advances are rooted in human-centred values.

-

5 notes from the big A.I. summit in Paris (The New York Times)

At the Artificial Intelligence Action Summit in Paris key trends emerged in global AI policy and development. Europe appears to be reconsidering its strict regulatory approach, with French President Emmanuel Macron announcing $112.5 billion in French AI investments while warning against “punitive” regulations. Panelists and speakers sought to balance AI’s potential to accelerate breakthroughs in medicine and climate science with AI safety concerns. Discussions also centred on DeepSeek’s recent breakthrough and the implications of competitive models close to the AI frontier that can be built with modest capital investments.

-

How artificial intelligence is changing baseball (The Economist)

Major League Baseball teams are leveraging AI to revolutionize player analysis and game strategy. Building on the “Moneyball” era of statistical analysis, teams now employ AI models to predict player performance and optimize gameplay using vast datasets from high-speed cameras and radar tracking. Baseball’s discrete nature of individual matchups and rich historical data make it particularly suitable for AI applications. Teams are developing models to analyze pitching mechanics, predict injuries, and identify player tendencies, while employing large language model interfaces to make AI insights accessible to coaches and players.

-

The AI relationship revolution is already here (MIT Technology Review)

AI chatbots are transforming personal relationships and routines, serving as emotional confidants, creative collaborators, and life coaches. Users across different contexts leverage AI for needs ranging from mental wellness support to parenting guidance, highlighting the technology’s role as an accessible alternative to traditional services. While these AI interactions demonstrate the technology’s versatility and constant availability, users that were interviewed maintain awareness of the distinction between algorithmic assistance and authentic human connection, suggesting a nuanced evolution in how people navigate relationships in an AI-enhanced world.

-

Research: Simple test-time scaling (Stanford/University of Washington/Allen Institute for AI/Contextual AI)

Researchers introduce s1, a minimalist approach to enhance language model reasoning through test-time compute scaling. This team demonstrated high-quality reasoning capabilities by fine-tuning Alibaba’s Qwen2.5-32B-Instruct model on just 1,000 carefully curated examples, challenging the assumption that large datasets are necessary. Key innovations include “budget forcing,” which improves model reasoning by dynamically extending compute time, and a strategic data curation process that prioritizes difficulty, diversity, and quality. The approach achieves up to 27% improvement over OpenAI’s o1-preview on competition math questions while requiring significantly less training data and compute resources.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)