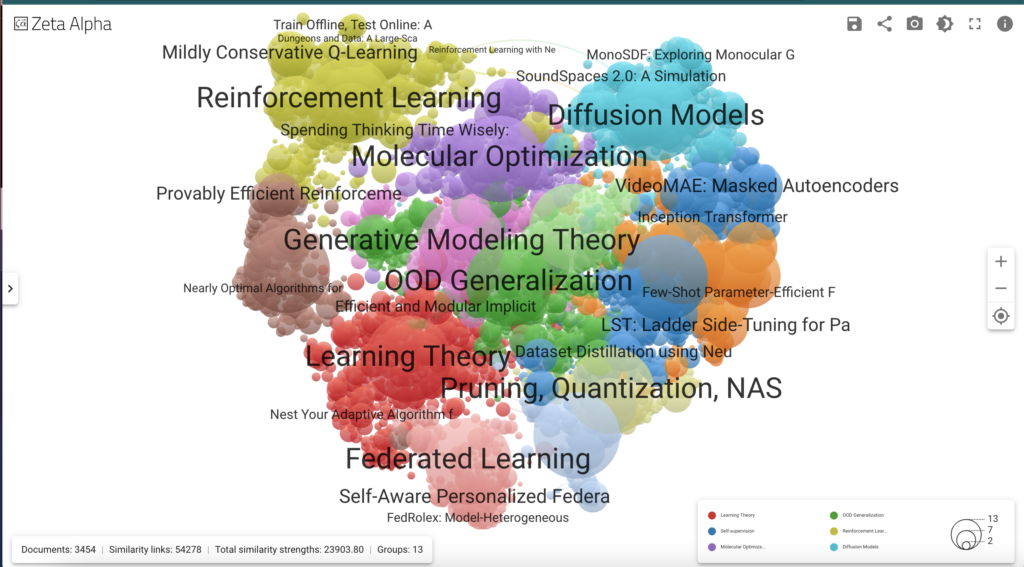

The thirty-seventh conference on Neural Information Processing Systems, or NeurIPS, was held in December. It is the biggest moment on the AI research calendar, and this was an especially buzzy year with more than 16,000 attendees consisting of the world’s top AI researchers and practitioners coming together for the six-day conference. Organizers received a record number of paper submissions: 13,330 in total, compared to 9,634 received last year.

This week, we explore three themes from the NeurIPS conference that will inform the AI landscape in 2024.

- Compute is (still) king

Efficient use of compute resources remains crucial and challenges persist for researchers and companies wanting to leverage AI due to the high cost of required computational power. Notably, several Outstanding Papers emphasized strategies for scaling and optimizing computational efficiency. The “ML with New Compute Paradigms” workshop explicitly underscored the importance of collaboration between machine learning and alternative computation sectors, aiming to create a symbiotic relationship that enhances hardware efficiency. Additionally, CentML, a notable player in the field and Radical Ventures portfolio company, garnered attention for its solutions for increasing GPU efficiency, minimizing latency, and improving throughput. - The race toward the next Transformer

The transformer architecture, while versatile and dominant in NeurIPS submissions, is also a major driver of increasing computational demands. Rob Toews, a partner at Radical Ventures, previously highlighted the transformer’s pivotal role in the current generative AI boom, underpinning groundbreaking companies like Cohere and models and products like GPT-4, Midjourney, Stable Diffusion, and GitHub Copilot. He also shed light on emerging research aimed at supplanting the transformer. This includes efforts to replace its “attention” mechanism with functions that scale more efficiently, thereby reducing computational load and enhancing long sequence processing. A notable example is the S4 lab, led by Chris Ré, who presented an invited talk at NeurIPS. Accepted papers also showcased alternatives such as Hyena and Liquid neural networks. - An increase in responsibility research

This year’s conference highlighted an increased focus on responsible AI, encompassing topics like fairness, transparency, privacy, and governance, evident from the substantial number of related papers, oral sessions, and invited talks. This year, the two Main Track Outstanding Paper awards were given to research focusing on how to make the measurement of privacy computationally cheaper and the other underscoring the importance of evaluation and honest measurement. Discussions around frameworks for bolstering responsible AI practices were prominent, notably surfacing Radical’s Responsible AI Startups (RAIS) framework in the roundtable sessions. Concurrently, an ongoing debate contrasted proprietary and open-source models. The “Beyond Scaling” panel exemplified this, with participants showing reluctance to share detailed model information, leading to more abstract discussions. This sparked conversations about the suitability of discussing proprietary models at NeurIPS, a forum traditionally for open research dissemination. This debate, bridging the proprietary versus open-source divide, is gaining momentum and is set to be a central theme in 2024.

Radical Reads is edited by Ebin Tomy.