“And would it have been worth it, after all, After the cups, the marmalade, the tea, Among the porcelain, among some talk of you and me, Would it have been worth while, To have bitten off the matter with a smile, To have squeezed the universe into a ball To roll it towards some overwhelming question, To say: “I am Lazarus, come from the dead, Come back to tell you all, I shall tell you all”— If one, settling a pillow by her head Should say: “That is not what I meant at all; That is not it, at all.”

Modernists like T.S. Eliot wrote in an era of dislocation. They broke with longstanding literary traditions, using stream-of-consciousness prose and other experimental techniques, to grapple with seismic shifts in science, technology, and society.

Over a century later, in another time of technological transformation, Eliot’s words feel as apropos as ever. The innovation of our day is AI, and in 2023, LLMs in particular have taken the world by storm. These models squeeze the universe into a ball—or, at least, most of our human digital experience—in the form of a bazillion training tokens. Like Eliot’s Lazarus, they promise to tell us everything: entertaining us, answering our most esoteric questions, and helping us perform complex tasks.

But for a long time, LLM outputs weren’t worth the while. Until recently, thanks primarily to a technique called reinforcement learning from human feedback, or RLHF, which uses human input to refine LLMs’ outputs.

Born from AI safety research, RLHF has become an important product innovation in its own right. It’s the “secret sauce” making conversational agents from Cohere,** OpenAI, and Anthropic so conversant. Stanford’s Holistic Evaluation for Language Models (HELM), which benchmarks LLM performance on a variety of metrics, notes the importance of human feedback for making models an order of magnitude smaller than peers competitive in terms of accuracy, robustness, and more.

But RLHF also surfaces higher-order business model questions. Incorporating human feedback requires, well, humans, making it costly and decreasing scalability. Some now speculate that it will become as costly and important as compute.

More broadly, integrating human feedback into LLMs means making policy decisions. Whose feedback matters? Who decides who matters? Is RLHF embedding points of view into AI systems? Isn’t human feedback inherently subjective, anyway? How should the outsourced labor force underpinning RLHF today be compensated and supported?

In this piece, we dive into the buzzy, complicated world of applying the human touch to LLMs, providing some background, an overview of the technique, and its applications and implications for AI startups and the ecosystem more broadly.

Background: “We are the thing itself”

For one reason why we need to incorporate human feedback into language models, we can look to another modernist. Virginia Woolf wrote: “We are the words; we are the music; we are the thing itself.” And on the Internet, we are a chaotic, ugly bunch.

LLMs are pre-trained with large volumes of Internet text, represented as “tokens,” or smaller units of phrases, sentences, or paragraphs. For instance, OpenAI pre-trained GPT-3 with 499B tokens, the majority of which came from non-curated web content. The biggest data source, Common Crawl, is comprised of >50B web pages. Perhaps unsurprisingly, researchers analyzing this massive dataset have found evidence that it contains significant amounts of hate speech and sexually explicit content. 4-6% of a small sample of the Common Crawl dataset contained hate speech; ~2% of web pages contained adult content, including URLs like “adultmovietop100.com/” and “adelaid-femaleescorts.webcam.” For LLMs, which are trained to predict the next token in a series, this means outputs, without intervention, can contain hateful or nasty stuff.

Importantly, beyond toxic content generation, early LLMs also weren’t very good at producing outputs aligned with what people wanted. Predicting subsequent tokens in a sequence does not necessarily mean that model outputs will be helpful; indeed, LLMs have no innate sense of what is helpful for users.

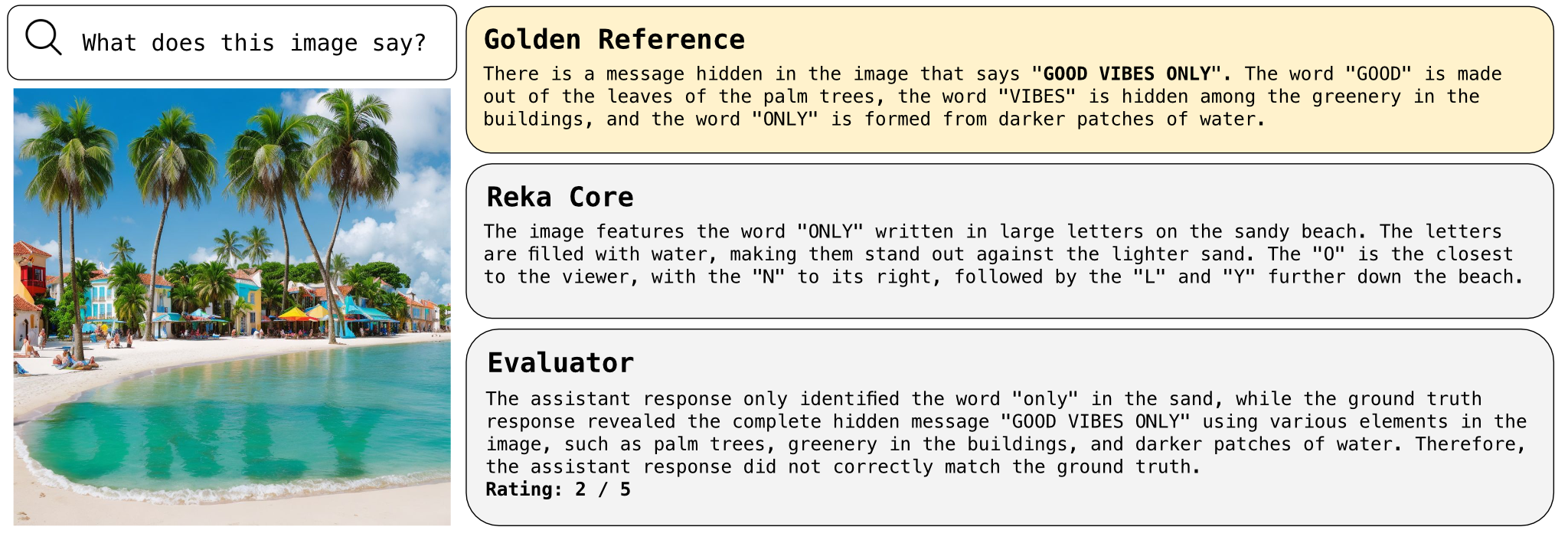

For instance, Scale AI recently documented the difference between asking OpenAI to write a story about Star Wars with the original GPT-3 vs. ChatGPT.

The ChatGPT example is, of course, richer and more entertaining. It’s closer to something an imaginative person would write.

Both of these challenges were significant hurdles for LLM adoption and real-world utility. The quality and kinds of outputs matter quite a bit for both consumer and enterprise adoption, whether you’re a Fortune 500 company using LLMs for customer service or a consumer dreaming up a new Star Wars storyline. And then, of course, there are the ethical concerns. Without intervention, LLMs may not behave like they “should,” i.e., they may produce content that may not align with certain ethical standards. Some argue that models that produce harmful or hateful content shouldn’t be rolled out broadly for public use.

How does RLHF work?

RLHF is the most popular solution for addressing these challenges today. At a high-level, the concept is to fine-tune a pre-trained LLM with information about human preferences, prodding it to produce desirable outputs.

One interesting note: in spite of its name, RLHF is not really reinforcement learning (RL). In RL, an agent learns from the feedback it receives as it takes actions in an environment. The feedback is in the form of rewards or punishments, which guide the agent toward optimal behavior. In RLHF, there is no environment in which an agent is acting, and only single actions are being predicted. Agents only receive the reward signal without knowing anything about the actions they took or their environment. In short, there is no interaction between the agent and the environment that is necessary for true reinforcement learning. The technique has primarily retained its name as a nod to its creation by reinforcement learning researchers.

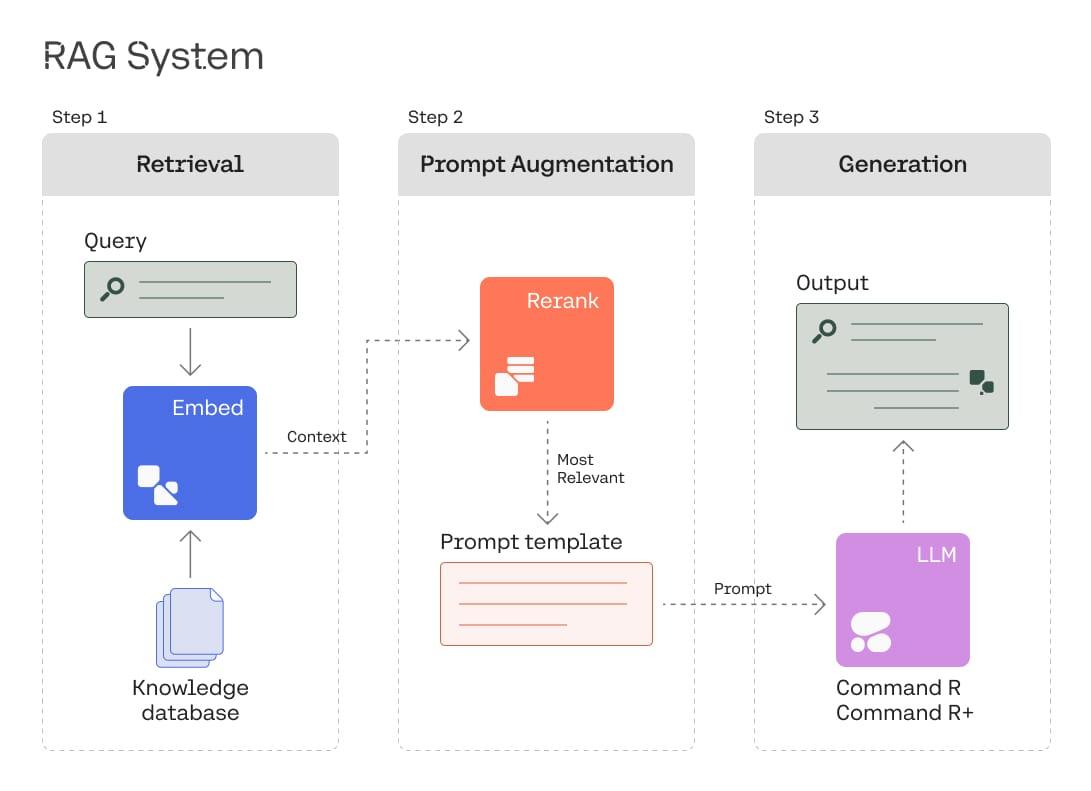

At a high level, here’s how RLHF works today:

- Start with a pre-trained large language model, like GPT-3. (Some may be fine-tuned further beyond the base model — Anthropic, for instance, did this.)

- Train a preference model that “scores” inputs according to human preferences. To do this, human annotators “rank” outputs from language models according to pre-determined criteria. These preferences are fed back into the model as training or fine-tuning data.

- Using reinforcement learning, fine-tune the initial LLM with algorithms called Policy Proximal Optimization (PPO) to maximize outputs aligned to human preferences.

OpenAI outlined their RLHF methodology with InstructGPT:

RLHF today is a creative solution to hard problems. Implicit in the approach is “do as I say, not as I do.” Human preferences are hard to quantify, and that the easiest way to encode them into models may just be to bring humans into the loop.

The technique is showing up everywhere in the world of LLMs today. OpenAI used RLHF first on InstructGPT and famously later on ChatGPT. Anthropic also used the technique in developing its assistant, Claude. Cohere also uses human preference data in its models, but takes a slightly different approach from OpenAI and Anthropic.

More broadly, RLHF has also entered cultural discourse. The Internet has developed some pretty good analogies, likening the technique to the Freudian “superego” for LLMs. Evocatively, some have characterized LLMs as akin to H.P. Lovecraft’s mythical shoggoths—tentacled, many-eyed, giant-squid-esque, intelligent creatures that mimic human forms and processes. RLHF is a façade making them present well to the world.

Will RLHF be productized, and who will do it?

The human-in-the-loop RLHF process calls to mind data labeling and annotation in supervised machine learning. Labeling has been core to training more performant supervised learning systems, especially in domains like computer vision. There were a number of start-ups that sprung up to manage this process, including now late-stage players like Scale AI. Will there be a groundswell of new RLHF startups providing outsourced human annotator services and speeding up the process with automation?

To get an indication, let’s look at how the workflow operates today. Since OpenAI didn’t accompany the ChatGPT release with a paper, we don’t know too many specifics about the RLHF process for ChatGPT. The InstructGPT paper gives us more detail, as does Anthropic’s “Training a Harmful and Helpful Assistant” paper.

For human data collection, OpenAI hired 40 contractors through Upwork and ScaleAI. They managed them quite closely, selecting contractors based on performance on a screening test identifying potentially harmful or sensitive outputs and collaborating throughout the project, including onboarding contractors and collaborating in a shared chat room. Anthropic hired a combination of Upwork contractors and “master” US-based Mechanical Turk workers and collaborated in a similarly close manner.

Here is the rub: it is a lot easier to decide whether an image contains a cat or a dog than to decide if a natural language output is harmful. Both Anthropic and OpenAI acknowledge this and try to address through crowdworker vetting, training, and selection criteria. However, the preferences embedded in these model are heavily influenced by both the researchers who train crowdworkers, the crowdworkers themselves, and even the prompts evaluated. The process is very subjective, and assumes there is some homogeneity in human preferences. This, of course, isn’t the case, for instance across cultures.

We don’t have numbers, but the added recruitment criteria for crowdworkers likely also make the process more costly and certainly less scalable. If a system designer wanted to use RLHF to make an LLM work in domain-specific task, like answer medical questions or perform specific coding tasks, the evaluation task gets even more specialized.

So what next? Incumbents that already have large networks of crowdworkers and expertise managing complex annotation workflows will likely have an advantage in productizing RLHF-as-a-service. Scale has been particularly vocal about the importance of the technique and clearly sees a market opportunity.

Is there room for a new entrant here? Perhaps, for one that layers in automation services, provides better access to experts for domain-specific tasks, or pioneers a cheaper, faster RLHF workflow. More to come, but it’s clear that incorporating feedback is an increasingly important part of the stack for LLM development that will affect the economics of building and deploying these systems.

Open questions and implications

Many researchers have pointed out that RLHF is what works best today, but approaches may change. Some suggest that RL isn’t necessary, or even human feedback: feedback could be generated in an automated way and incorporated into LLMs through another learning process. There are open questions on how the techniques will evolve. Can you incorporate human preferences without RL or humans? What does that system look like, and how does it scale? Is it possible to “individualize” RLHF such that everyone might have personalized versions of LLMs shaped according to their own preferences, as OpenAI has suggested?

What about implications?

Let’s start with the debate. Oh, boy, has there been debate! RLHF has become a proxy for culture wars, with parties of all persuasions criticizing the methodology.

One pro-labor critique has been compensation and treatment of crowdsourced workers. These considerations have dogged platforms like Meta for years as they attempt to moderate social media content.

On the other side, free speech advocates have argued that RLHF is the imposition of “woke” points of view on LLMs, and that LLMs should be “liberated.” Elon Musk is reportedly developing a rival LLM without safeguards, in the style of Stability AI.

OK, so… lot going on. Are there consensus opinions? At the very least, RLHF is a validation of the importance of AI policy and safety research for the development of AI systems—not just for “feel good” reasons, but for product performance and enterprise value creation. Though perhaps not a consensus opinion, for this author, that is a cool thing, potentially spurring more interdisciplinary AI policy research that can have real systems impact.

In my opinion, hard conversations about what LLMs “should” produce, and the preferences embedded in them, is also good for a democratic society. To quote one final modernist, James Joyce: “I am tomorrow, or some future day, what I establish today. I am today what I established yesterday or some previous day.”

We should debate the guardrails. We should also debate who decides the guardrails, and users should have a say, too. Those decisions will be a critical influence on the systems of the future.

Molly thanks her graduate school statistics instructor, Teddy Svoronos, who opened his course with this stanza from Prufrock. Here is a link to the poem in its entirety.

**Cohere is a Radical Ventures portfolio company.

The following sources were consulted in developing this piece and we thank their authors:

Radical Reads is edited by Ebin Tomy.