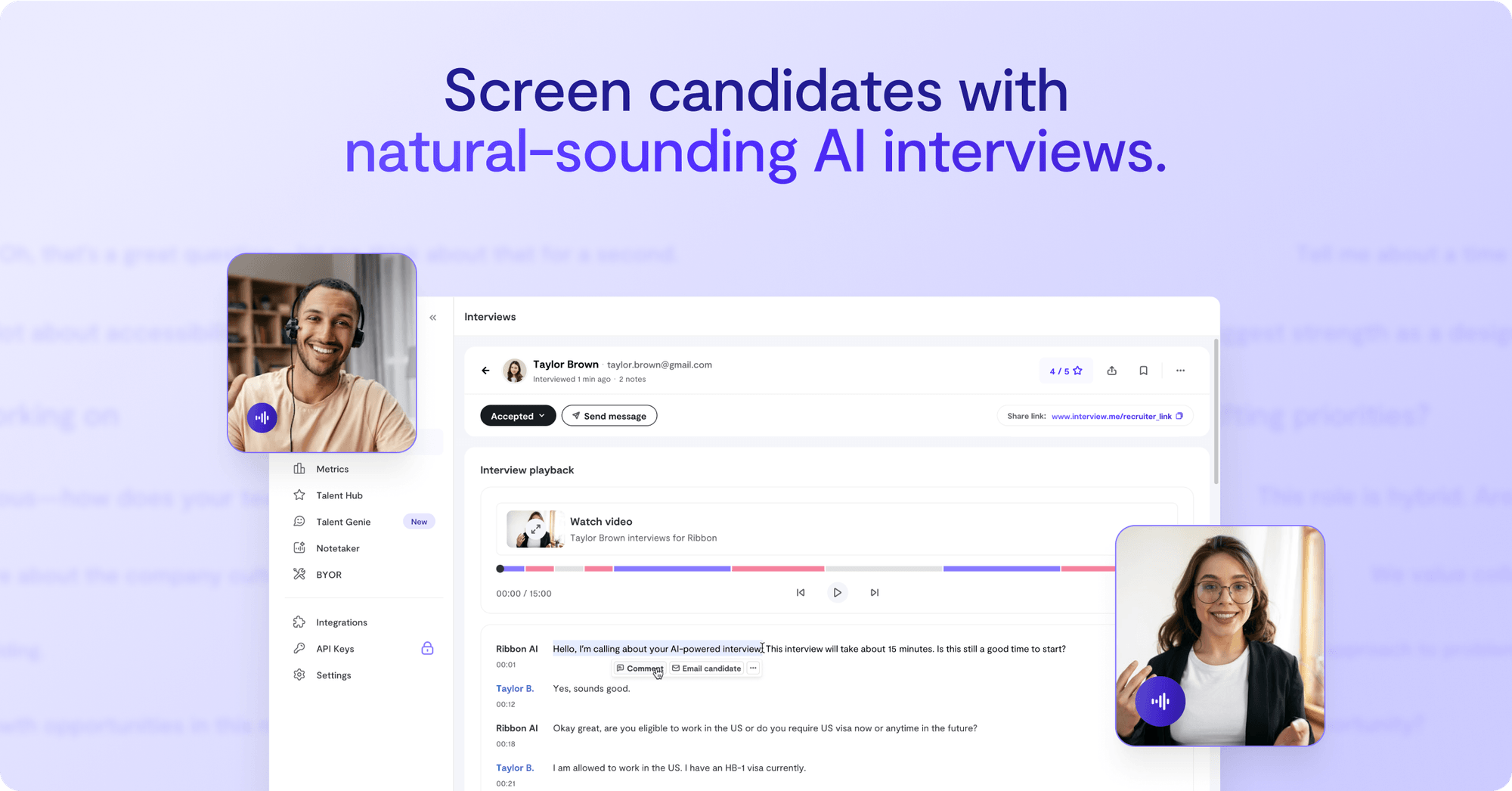

This week, Radical Ventures announced our lead investment in Ribbon. Founded by Arsham Ghahramani, an Amazon AI alum, and Dave Vu, a seasoned talent leader, Ribbon is transforming the hiring process with a best-in-class Voice AI recruiting agent that conducts natural, adaptive interviews at scale.

We are entering a new era of human-machine interaction, one where speaking to AI is not just functional, but genuinely engaging and productive. For years, conversational AI meant clunky keyword voice response systems incapable of real conversation. But recent model breakthroughs in latency, understanding, and intent recognition have led to Voice AI agents offering interactions that are remarkably human-like, natural, and fluid.

This technological leap signifies more than just improved chatbots; it unlocks enormous potential to transform enterprise applications and drive mainstream AI adoption as interactions with AI become virtually indistinguishable from human conversations. It is the opportunity presented by this exciting frontier that informed Radical Ventures’ lead investment in Ribbon AI.

Ribbon stands at the forefront of the Voice AI revolution, tackling a universally acknowledged pain point: the broken hiring process. In high-volume sectors, Ribbon can conduct thousands of screening interviews in hours – a scale impossible for human teams. This drives massive efficiencies, cutting time-to-interview from days to minutes and speeding up the overall hiring process significantly.

Traditional hiring is often slow, inefficient, and frustrating for both companies and candidates. Ribbon addresses this head-on with a best-in-class Voice AI recruiting agent that conducts job interviews for industries including manufacturing, airports, quick service restaurants, and healthcare staffing.

Ribbon’s platform facilitates natural, adaptive hiring interviews, responding to candidates in real-time just like a human recruiter would. It understands nuance, asks relevant follow-ups, and even accommodates candidate nerves. Ribbon goes beyond simple screening, its AI provides smart analytics and summaries for hiring teams, actively promotes the role to candidates, and delivers immediate feedback, resulting in lower drop-off rates, higher candidate satisfaction, and even improved employee retention post-hire.

The magic behind Ribbon is driven by a special founding team. Arsham Ghahramani, an Amazon AI alum, and Dave Vu, a seasoned talent leader, possess a unique chemistry and execute with incredible speed.

Ribbon AI exemplifies the power and potential of the current wave of Voice AI. They are not just improving an existing process; they are redefining how companies connect with talent using sophisticated, conversational technology. We believe Voice AI will become a primary means of interacting with machines, and we are excited to support Ribbon as they lead the charge in building this future.

AI News This Week

-

Researchers lift the lid on how reasoning models actually “think” (The Economist)

Researchers have developed a digital “microscope” that reveals how large language models (LLMs) like Claude process information. Using this tool, researchers discovered LLMs exhibit sophisticated planning, immediately contemplating rhyme options when beginning poetic couplets. Their findings also demonstrate that multilingual models understand concepts across languages before language-specific circuits translate them into words. The hallucinatory tendencies of LLM systems were also observed. Researchers found that when confronted with mathematical problems beyond their capabilities, these systems generate false answers and manipulate calculations to support suggested responses.

-

The first trial of generative AI therapy shows it might help with depression (MIT Technology Review)

The first clinical trial of a generative AI therapy bot showed promising results for treating depression, anxiety, and eating disorder risks. Therabot demonstrated a 51% reduction in depression symptoms, 31% reduction in anxiety, and 19% reduction in eating disorder concerns during an eight-week trial with 210 participants. Unlike many commercial AI therapy bots that use minimally modified foundation models, Therabot was specifically trained in evidence-based therapeutic practices. Participants engaged with Therabot an average of 10 times daily, achieving comparable results to 16 hours of human therapy in half the time.

-

Invasion of the home humanoid robots (The New York Times)

Humanoid robots powered by AI are poised to move into homes. Humanoids are designed to mimic movements like walking, bending, twisting, reaching, and gripping to navigate environments built for humans. These robots learn skills like walking through virtual simulations, in which digital versions practice thousands of times in physics-based environments before transferring this knowledge to physical robots. More complex household tasks like loading dishwashers or folding laundry require real-world data collection, with humans remotely guiding the robots through tasks to capture video and sensor data that AI systems can analyze to identify patterns and gradually build autonomy.

-

AI and satellites help aid workers respond to Myanmar earthquake damage (The Globe and Mail)

Satellite imagery combined with AI is being used to assess building damage from Myanmar’s 7.7 magnitude earthquake. Satellites captured aerial images that Microsoft’s AI for Good team analyzed, helping the team to determine the level of damage that occurred. This data was shared with aid organizations, including the Red Cross, to guide relief efforts. The approach required building an AI model specific to the region due to the high variability in global landscapes, disaster patterns, and satellite imagery quality

-

Research: Neural alignment via speech embeddings (Nature Human Behaviour)

Researchers have discovered an alignment between human brain activity and AI speech model representations during conversations. The study revealed that speech embeddings predicted activity in perceptual regions and motor areas of the brain, while language embeddings better aligned with higher-order language areas. During production, language processing preceded articulation by 500 milliseconds, and during comprehension, speech areas activated shortly after hearing words, followed by language processing. The brain processes speech serially and recursively, whereas transformer-based language models operate in parallel across multiple layers, yet both achieve comparable results when pursuing identical objectives.

Radical Reads is edited by Ebin Tomy.