Rob Toews’ latest Forbes article explores a profound constraint facing today’s advanced AI systems: the very limited capacity AI models have to continue learning. Rob explores why this limitation exists and the early work being done to solve it. The following is an edited excerpt.

The pace of improvement in artificial intelligence today is breathtaking.

An exciting new paradigm — reasoning models based on inference-time compute — has emerged in recent months, unlocking a whole new horizon for AI capabilities.

The feeling of a building crescendo is in the air. AGI seems to be on everyone’s lips.

“Systems that start to point to AGI are coming into view,” wrote OpenAI CEO Sam Altman recently. “The economic growth in front of us looks astonishing, and we can now imagine a world where we cure all diseases and can fully realize our creative potential.”

Or, as Anthropic CEO Dario Amodei put it a couple of months ago: “What I’ve seen inside Anthropic and out over the last few months has led me to believe that we’re on track for human-level AI systems that surpass humans in every task within 2–3 years.”

Yet today’s AI continues to lack one basic capability that any intelligent system should have.

Many industry participants do not even recognize that this shortcoming exists because the current approach to building AI systems has become so universal and entrenched. But until it is addressed, true human-level AI will remain elusive.

What is this missing capability? The ability to continue learning.

What do we mean by this?

Today’s AI systems go through two distinct phases: training and inference.

First, during training, an AI model is shown a bunch of data from which it learns about the world. Then, during inference, the model is put into use: it generates outputs and completes tasks based on what it learned during training.

All of an AI’s learning happens during the training phase. After training is complete, the AI model’s weights become static. Though the AI is exposed to all sorts of new data and experiences once it is deployed in the world, it does not learn from this new data.

In order for an AI model to gain new knowledge, it typically must be trained again from scratch. In the case of today’s most powerful AI models, each new training run can take months and cost tens of millions of dollars.

Take a moment to reflect on how peculiar — and suboptimal — this is. Today’s AI systems do not learn as they go. They cannot incorporate new information on the fly in order to continuously improve themselves or adapt to changing circumstances.

In this sense, artificial intelligence remains quite unlike, and less capable than, human intelligence. Human cognition is not divided into separate “training” and “inference” phases. Rather, humans continuously learn, incorporating new information and understanding in real-time. (One could say that humans are constantly and simultaneously doing both training and inference.)

What if we could eliminate the kludgy, rigid distinction in AI between training and inference, enabling AI systems to continuously learn the way that humans do?

This basic concept goes by many different names in the AI literature: continual learning, lifelong learning, incremental learning, online learning.

It has long been a goal of AI researchers — and has long remained out of reach.

Another term has emerged recently to describe the same idea: “test-time training.”

As Perplexity CEO Aravind Srinivas said recently: “Test-Time Compute is currently just inference with chain of thought. We haven’t started doing test-time-training — where model updates weights to go figure out new things or ingest a ton of new context without losing generality and raw IQ. Going to be amazing when that happens.”

Fundamental research problems remain to be solved before continual learning is ready for primetime. But startups and research labs are making exciting progress on this front as we speak. The advent of continual learning will have profound implications for the world of AI.

To continue reading, see Rob’s full article in Forbes. Rob writes a regular column in Forbes about the big picture of AI.

AI News This Week

-

How A.I. is changing the way the world builds computers (The New York Times)

AI is transforming global computing infrastructure with GPU-powered data centers designed specifically for machine learning capabilities. These facilities pack thousands of specialized chips designed for parallel processing, requiring significant amounts of electricity and water for cooling. A traditional 5-megawatt data center now powers only 8-10 rows of GPU machines, with industry power demands projected to triple by 2028. “My conversations have gone from ‘Where can we get some state-of-the-art chips?’ to ‘Where can we get some electrical power?'” notes Radical Ventures Partner David Katz.

-

Fast Company’s most innovative companies in 2025 (Fast Company)

Radical portfolio companies Nabla Bio and Waabi have been recognized on the Fast Company’s Most Innovative Companies 2025 list. Nabla was selected in the biotech category for its breakthrough in computational antibody design. The company’s generative AI platform can create antibodies “from scratch” using only target protein sequence or structure data and could double the industry’s accessible disease-relevant drug targets. Waabi was selected in the transportation category for building driverless trucks powered by generative AI. The company has developed Waabi Driver, an autonomous driving system capable of humanlike reasoning, and Waabi World, a closed-loop simulator that reduces the need for extensive on-road testing.

-

A Google-backed weapon to battle wildfires made it into orbit (TechCrunch)

Radical portfolio company Muon Space has successfully launched the first satellite in the Google-backed FireSat constellation. The satellite features six-band multispectral infrared cameras specifically tuned for wildfire detection. The initial three-satellite phase will provide twice-daily global imaging coverage by 2026. When fully operational with over 50 satellites, the constellation will deliver five-meter resolution imagery of nearly all of Earth’s surface every 20 minutes, offering firefighters near real-time updates on fire behaviour.

-

She never set out to work in AI. Now she’s ensuring the transformative technology is accessible across more than 100 languages (Fortune)

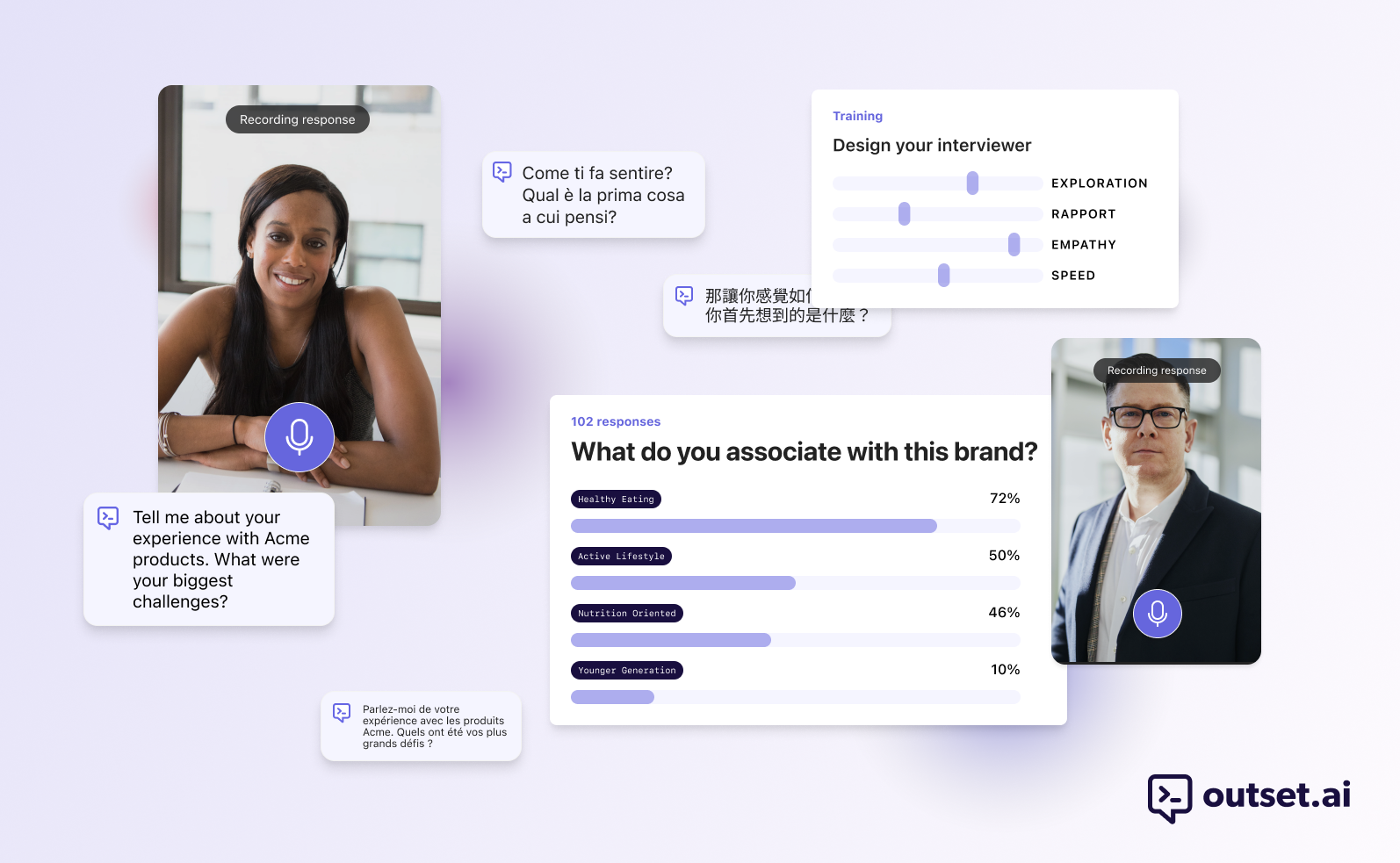

Sara Hooker, Head of Radical portfolio company Cohere’s nonprofit research lab Cohere for AI, is making AI accessible in over 100 languages through multilingual AI research. Drawing from her international upbringing across Africa, Hooker brings a personal perspective to this mission. Since 2022, her team has collaborated with over 3,000 researchers globally through Aya. Last week, they released Aya Vision, which supports visual inputs in 23 languages and enables users to analyze images in their native language for free on WhatsApp.

-

Research: Optimizing generative AI by backpropagating language model feedback (Nature)

Researchers have introduced TextGrad, a framework that optimizes AI systems by backpropagating language model feedback. This approach allows for the automatic improvement of complex AI systems orchestrating multiple large language models (LLMs) and specialized tools. Similar to how backpropagation transformed neural network development, TextGrad leverages natural language feedback to critique and improve various system components. The framework’s versatility has been demonstrated across diverse applications, including solving PhD-level science problems, optimizing radiotherapy treatment plans, designing molecules with specific properties, coding, and improving agentic systems.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)