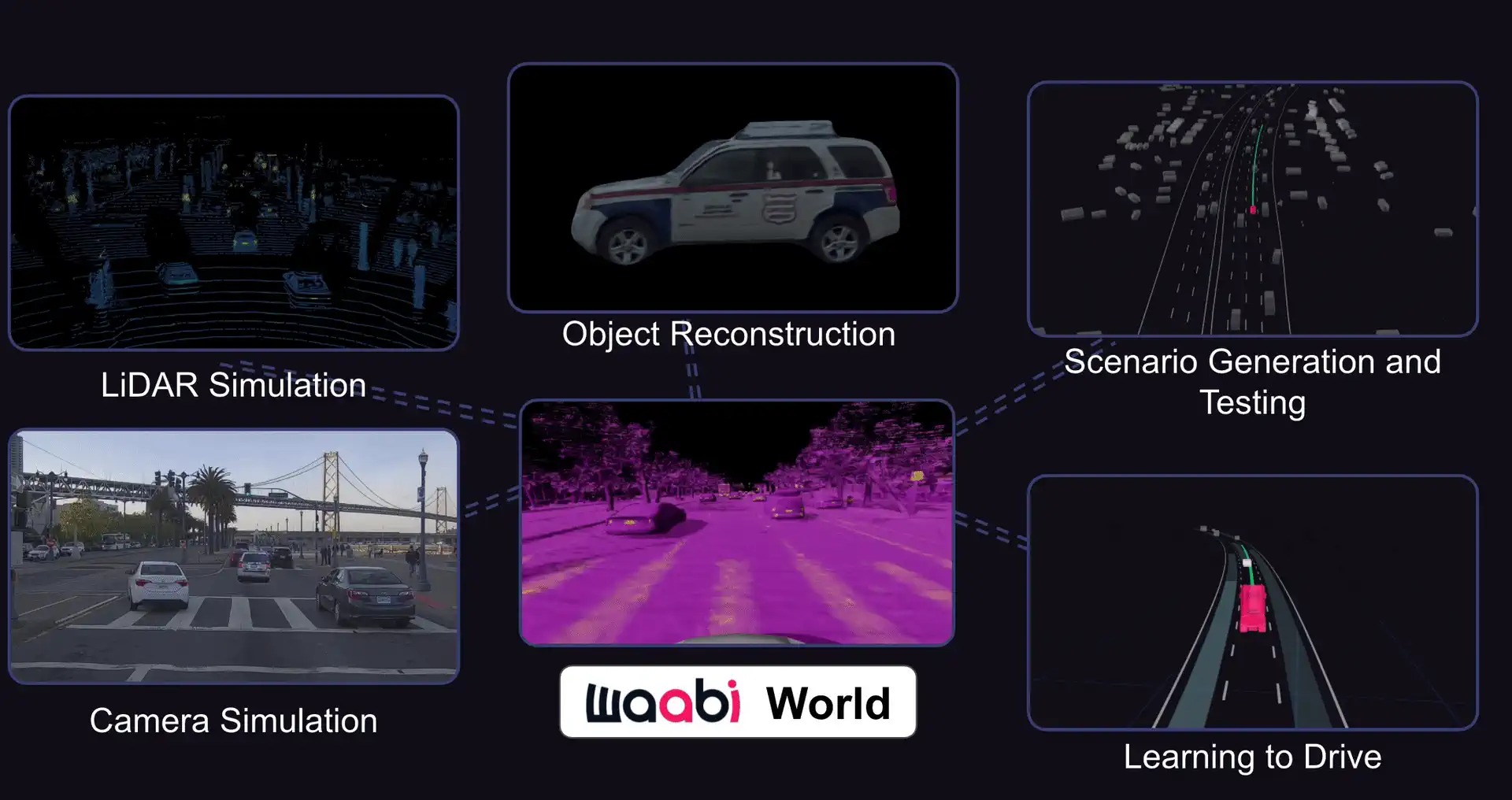

Radical Ventures portfolio company Waabi has achieved a 99.7% realism score in reproducing real-world driving scenarios. Founder and CEO Raquel Urtasun explains how this achievement sets a new safety standard for autonomous vehicles and why simulator realism is crucial for building public trust in self-driving technology.

The Waabi Driver changes lanes to give space to a vehicle on the shoulder, then merges back, comparing the same maneuver in the real world (left) and in simulation (right). Measuring the difference between the two executed trajectories, the realism score of Waabi World in this scenario is 99.7%.

One of the biggest challenges in developing autonomous vehicles (AVs) is gathering enough high-quality data to test the system so that we can be confident that it can operate safely in the real world. Historically, this has involved extensive real-world driving to try to test if the vehicle can handle a wide range of complex interactions. Humans, typically triage engineers, then meticulously analyze the data to identify interesting events, such as interventions where the autonomous systems faltered, and use these instances to refine the system and improve its decision-making capabilities. The process is resource-intensive, inefficient and insufficient as a solution.

Simulation technology has emerged as the key solution for addressing these challenges. Importantly, the utility of simulators scales across three distinct levels as they become more sophisticated. Standard simulators can be used to support software testing, helping catch bugs and regressions that are introduced with new versions of the software. Advanced simulators can be used to understand how the system will perform and in which specific situations it will fail, helping to understand the deficiencies of the system. The final category is the holy grail, where simulators can actually be used to provide robust evidence for scientifically sound safety cases. But relying on simulation for these high-stakes tests introduces a new, critical hurdle: ensuring the realism of the simulator.

Measuring Realism: Outcome Over Visuals

Researchers have attempted to assess simulator realism by analyzing the similarity between the distribution of behaviours in simulation vs the real world. For example, one can compare the distribution of AV speeds, acceleration, hard breaks, safety buffer violations, etc, computed over all scenarios driven in simulation compared to the distribution measured in the real world. The assumption is that if these statistical distributions are similar, then the simulator can be considered realistic. While this approach can provide a general indication of similarity, it falls short and cannot assess if the simulator is realistic enough to assess the specific deficiencies of the system or if it can be used for validation. This is due to the fact that two systems could have similar aggregate distributions but react very differently to every unique situation. For example, a hard brake in simulation could be caused by falsely detecting pedestrians in crowded scenes vs in the real world, where it could be caused by falsely detecting pieces of discarded tire, known as tire shreds, on a highway.

A far superior and trustworthy approach to measuring realism is through pair-setting. This involves meticulously recreating a set of real-world scenarios within the simulator, matching the actors, their appearance, their precise behaviour, the weather, illumination and road conditions, etc, and then measuring the difference in the AV’s trajectories across both environments. This outcome-focused approach provides a concrete, measurable basis for evaluating realism.

Leveraging Digital Twins for Pair-Setting

But how do you create these meticulously matched scenarios, and how do we do this at scale so that we have statistically significant evidence? The key lies in the creation of rich, data-driven digital twins of real-world scenarios, which can then serve as precise, comprehensive inputs that seed the simulation, enabling the systematic generation of paired, identical tests. Recent breakthroughs in neural simulation, such as NeRF, 3D Gaussian Splatting, or UniSim, have made this kind of digital twin generation not only possible, but also possible to accomplish at scale.

Setting an Industry Standard with Waabi World

At Waabi, we’ve leveraged this approach to rigorously validate the realism of our neural simulator, Waabi World. We’ve conducted extensive paired tests and achieved an unprecedented 99.7% realism score.

This astonishingly high degree of realism provides irrefutable evidence that our simulator faithfully replicates the real-world driving experience and is a monumental breakthrough for the industry. This result not only validates Waabi’s approach to safety but also establishes a transformative standard for the industry. Going forward, all AV developers using simulation need to be able to publicly demonstrate the quantified realism of their simulators. Just as we have safety standards for vehicles themselves, we must establish clear and measurable standards for the simulators on which their safety depends.

This transparency and accountability are absolutely paramount for building public trust in AV technology, and I call on all AV companies to embrace this new standard and prioritize simulator realism as a collective responsibility.

To learn more, read Waabi’s full article.

AI News This Week

-

AI ‘application’ start-ups become big businesses in new tech race (Financial Times)

AI application startups built on large language models are generating tens of millions in revenue with nimble teams, scaling much faster than previous waves of software companies. These companies benefit from competition in the LLM market, which has reduced costs for running AI queries. Experts anticipate that these applications will deliver productivity gains in knowledge work without requiring the heavy investment needed to build foundation models, with a growing consensus that significant value will be captured by the AI application layer.

-

Fintech's latest trend: AI agents for investment research (Forbes)

Radical portfolio company Hebbia is at the forefront of fintechs that leverage AI agents to conduct investment research. The company uses AI to help financial institutions analyze private market data in virtual data rooms, answering questions about customer concentration, revenue growth, and management qualifications while identifying potential risks. CEO George Sivulka explains that Hebbia can save private equity firms 20-30 hours per deal by generating comprehensive analysis and draft memos.

-

Teachers worry about students using A.I. but they love it for themselves. (The New York Times)

Many educators are navigating a challenging contradiction: restricting student use of AI tools while increasingly adopting the technology for their own professional tasks. AI has already penetrated classroom environments, with teachers using it for functions such as analyzing student data, creating personalized lesson plans, grading essays, and delivering feedback. Although concerns persist about students using AI for homework completion and essay writing, some educational leaders suggest that cheating concerns have more to do with student engagement in class than access to AI. As schools continue to address these ethical considerations, educators broadly agree that teaching students about AI literacy has become essential for their future.

-

AI models could help negotiators secure peace deals (The Economist)

Researchers are creating specialized AI tools to help diplomats navigate complex international negotiations. One such project, Strategic Headwinds, aims to assist Western officials in Ukraine peace talks by generating draft agreements while quantitatively scoring acceptability to stakeholders. Researchers are enhancing these tools with specialized “bot advisers” trained on speeches and writings of world leaders to help negotiators anticipate reactions. Advanced implementations will incorporate competitive game theory algorithms to predict stakeholder positioning.

-

Research: Tracing language model outputs back to trillions of training tokens (Allen Institute for AI/University of Washington/UC Berkeley/Stanford University)

Researchers have introduced OLMOTRACE, the first system capable of tracing language model outputs back to their original training data in real time. This open-source tool identifies verbatim matches between model-generated text and segments from the multi-trillion-token datasets used during training. The tool operates by pre-sorting all text corpus suffixes lexicographically, allowing for the rapid identification of exact matches from massive datasets. The system delivers results within approximately 4.5 seconds for typical responses by leveraging an extended version of the infini-gram algorithm with a novel parallel processing approach.

Radical Reads is edited by Ebin Tomy.