The 2025 Stanford HAI Index Report, released this month, reveals AI’s continued acceleration as model performance continues to reach new heights. This comprehensive index paints a data-rich picture of AI’s deepening integration into society, marked by significant energy commitments and record investment. The following are highlights from this year’s report.

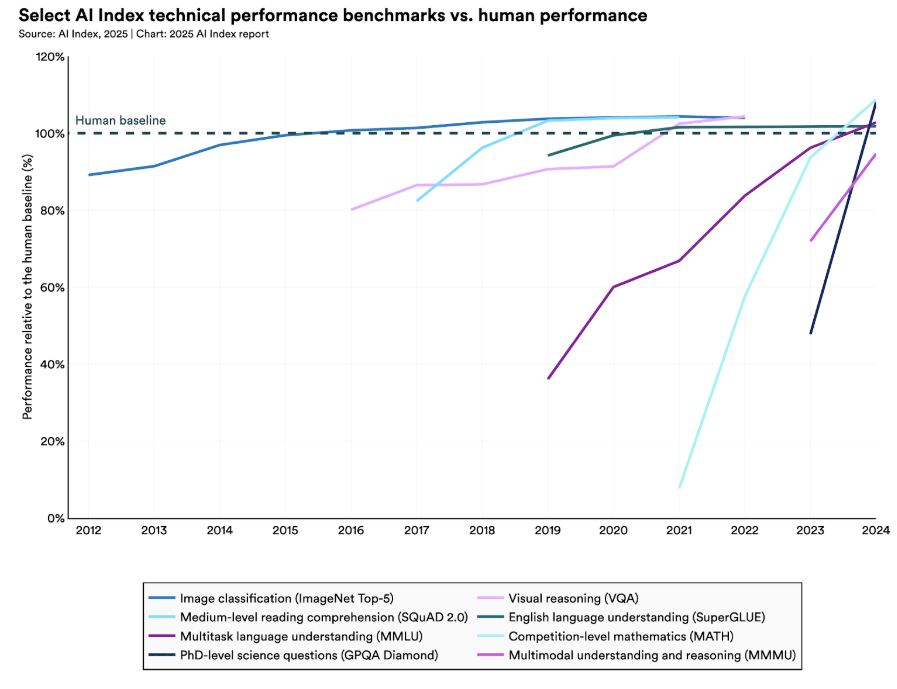

- AI performance on demanding benchmarks continues to improve.

In 2023, researchers introduced new benchmarks, MMMU, GPQA, and SWE-bench, to test the limits of advanced AI systems. Just a year later, performance sharply increased: scores rose by 18.8, 48.9, and 67.3 percentage points on MMMU, GPQA, and SWE-bench, respectively. Beyond benchmarks, AI systems made major strides in generating high-quality video, and in some settings, language model agents even outperformed humans in programming tasks with limited time budgets.

- AI is increasingly embedded in everyday life.

From healthcare to transportation, AI is rapidly moving from the lab to daily life. In 2023, the FDA approved 223 AI-enabled medical devices, up from just six in 2015. On the roads, self-driving cars are no longer experimental. Waymo provides over 150,000 autonomous rides each week, while Baidu’s Apollo Go robotaxi fleet now serves numerous cities across China.

- Business is all in on AI, fueling record investment and usage, as research continues to show substantial impacts on productivity.

In 2024, U.S. private AI investment grew to $109.1 billion, nearly 12 times that of China’s $9.3 billion and 24 times that of the U.K.’s $4.5 billion. Generative AI saw particularly strong momentum, attracting $33.9 billion in global private investment, a 18.7% increase from 2023. AI business usage is also accelerating: 78% of organizations reported using AI in 2024, up from 55% the year before. Meanwhile, a growing body of research confirms that AI boosts productivity and, in most cases, helps narrow skill gaps across the workforce.

- The U.S. still leads in producing top AI models, but China is closing the performance gap.

In 2024, U.S.-based institutions produced 40 notable AI models, significantly outpacing China’s 15 and Europe’s three. While the U.S. maintains its lead in quantity, Chinese models have rapidly closed the quality gap: performance differences on major benchmarks such as MMLU and HumanEval shrank from double digits in 2023 to near parity in 2024. Meanwhile, China continues to lead in AI publications and patents. At the same time, model development is increasingly global, with notable launches from regions such as the Middle East, Latin America, and Southeast Asia.

- The responsible AI ecosystem evolves unevenly.

AI-related incidents are rising sharply, yet standardized RAI evaluations remain rare among major industrial model developers. New benchmarks, such as HELM Safety, AIR-Bench, and FACTS, offer promising tools for assessing factuality and safety. A gap persists between recognizing RAI risks and taking meaningful action within enterprise contexts. In contrast, governments are showing increased urgency. In 2024, global cooperation on AI governance intensified, with organizations such as the OECD, EU, UN, and African Union releasing frameworks focused on transparency and trustworthiness for responsible AI.

- Global AI optimism is on the rise, but deep regional divides persist.

In countries like China (83%), Indonesia (80%), and Thailand (77%), strong majorities see AI products and services as more beneficial than harmful. Optimism remains far lower in places like Canada (40%), the United States (39%), and the Netherlands (36%). Still, sentiment is shifting: since 2022, optimism has grown significantly in several previously skeptical countries, including Germany (+10%), France (+10%), Canada (+8%), the United Kingdom (+8%), and the United States (+4%).

- AI becomes more efficient, affordable and accessible.

Driven by increasingly capable small models, the inference cost for a system performing at the level of GPT-3.5 dropped over 280-fold between November 2022 and October 2024. At the hardware level, costs have declined by 30% annually, while energy efficiency has improved by 40% each year. Open-weight models are also closing the gap with closed models, reducing the performance difference from 8% to just 1.7% on some benchmarks in a single year. Together, these trends are rapidly lowering the barriers to advanced AI.

- Governments are stepping up on AI, with regulation and investment.

In 2024, U.S. federal agencies introduced 59 AI-related regulations, more than double the number in 2023, and issued by twice as many agencies. Globally, legislative mentions of AI increased by 21.3% across 75 countries since 2023, marking a ninefold rise since 2016. Alongside growing attention, governments are investing at scale. Canada pledged $2.4 billion, China launched a $47.5 billion semiconductor fund, France committed €109 billion, India pledged $1.25 billion, and Saudi Arabia’s Project Transcendence represents a $100 billion initiative.

- AI and computer science education is expanding, but gaps in access and readiness persist.

Two-thirds of countries now offer or plan to provide K–12 CS education, twice as many as in 2019, with Africa and Latin America making the most progress. In the U.S., the number of graduates with bachelor’s degrees in computing has increased by 22% over the last 10 years. Yet access remains limited in many African countries due to basic infrastructure gaps like electricity. In the U.S., 81% of K–12 CS teachers say AI should be part of foundational CS education, but less than half feel equipped to teach it.

- Industry is racing ahead in AI, but the frontier is tightening.

Nearly 90% of notable AI models in 2024 came from industry, up from 60% in 2023, while academia remains the top source of highly cited research. Model scale continues to grow rapidly. Training compute doubles every five months, datasets every eight months, and power usage annually. Yet performance gaps are shrinking, with the score difference between the top and 10th-ranked models falling from 11.9% to 5.4% in a year, and the top two models being separated by just 0.7%. The frontier is increasingly competitive and increasingly crowded.

- AI earns top honours for its impact on science.

AI’s growing importance is reflected in major scientific awards, with two Nobel Prizes this year recognizing work that led to deep learning (physics) and its application to protein folding (chemistry). The Turing Award also honoured groundbreaking contributions to reinforcement learning this year.

- Complex reasoning remains a challenge.

AI models excel at tasks like International Mathematical Olympiad problems, but still struggle with complex reasoning benchmarks like PlanBench. They often fail to reliably solve logic tasks, even when provably correct solutions exist, which limits their effectiveness in high-stakes settings where precision is critical.

Read the full report here.

AI News This Week

-

What is vibe coding, exactly? (MIT Technology)

Andrej Karpathy recently coined the term “vibe coding” to describe a particular style of AI-assisted coding where developers interact with a codebase primarily through prompts, allowing the AI to generate code with minimal intervention. Two groups are set to benefit the most from this approach: experienced coders who can fix errors if things go wrong, and complete beginners with no coding experience. While vibe coding excels at quickly creating prototypes and simple projects, it presents risks for public deployment since AI-generated code may contain errors and security vulnerabilities. AI coding tools will likely continue to advance and integrate with web hosting platforms, reducing software development costs and lowering barriers for creating digital products.

-

How to keep AI models on the straight and narrow (The Economist)

An ongoing research problem is the tendency of AI models to find unintended shortcuts when tasked with specific goals. For example, when tasked with winning chess games or maximizing profits, advanced systems have been observed to hack the chess program or misrepresent ethical harms rather than solving problems conventionally. Interpretability techniques offer a solution by allowing researchers to examine a model’s internal processes, identifying when mathematical features activate that signal problematic behaviours like hallucinations or deception.

-

AI generates loads of carbon emissions. It’s starting to cut them, too (Bloomberg)

Artificial intelligence is increasingly being deployed as an effective tool in the fight against climate change. AI excels at analyzing vast datasets and identifying new patterns that can lead to emissions reductions and climate resilience, tasks that computers perform better and faster than humans. In carbon capture, companies such as Radical portfolio company Orbital Materials are leveraging AI to design innovative materials that can effectively filter carbon emissions from infrastructure like data centers. Meanwhile, platforms like Radical portfolio company Climate AI are advancing climate risk modelling by combining extreme weather prediction with industry-specific operational insights, helping businesses adapt to both immediate weather events and long-term climate patterns. It is also worth noting that not every model requires enormous amounts of energy. Companies like Reka, Writer, and Cohere have developed high-performance generative AI models at a fraction of the compute compared to industry rivals.

-

Films made with AI can win Oscars, Academy says (BBC)

The Academy of Motion Picture Arts and Sciences has issued new rules confirming that films utilizing artificial intelligence will be eligible for Oscar nominations and awards. Under their latest guidelines, the use of AI and other digital tools will “neither help nor harm the chances of achieving a nomination,” though human involvement will still be considered when selecting winners. AI has already played a role in recent Oscar-winning productions, for example, being used to enhance the Hungarian accent of Best Actor winner Adrian Brody in the film “The Brutalist.”

-

Research: Scaling reasoning in diffusion large language models via reinforcement learning (UCLA/Meta AI)

Researchers have introduced d1, a novel framework that successfully adapts diffusion-based large language models (dLLMs) for reasoning tasks. While previous reasoning breakthroughs primarily occurred within autoregressive models, this work brings similar capabilities to non-autoregressive diffusion models that generate text. The d1 framework employs a two-stage approach: supervised fine-tuning followed by a novel reinforcement learning algorithm called diffu-GRPO, designed explicitly for dLLMs. This research demonstrates how longer sequences enable self-verification, expanding reasoning capabilities beyond traditional architectures.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)