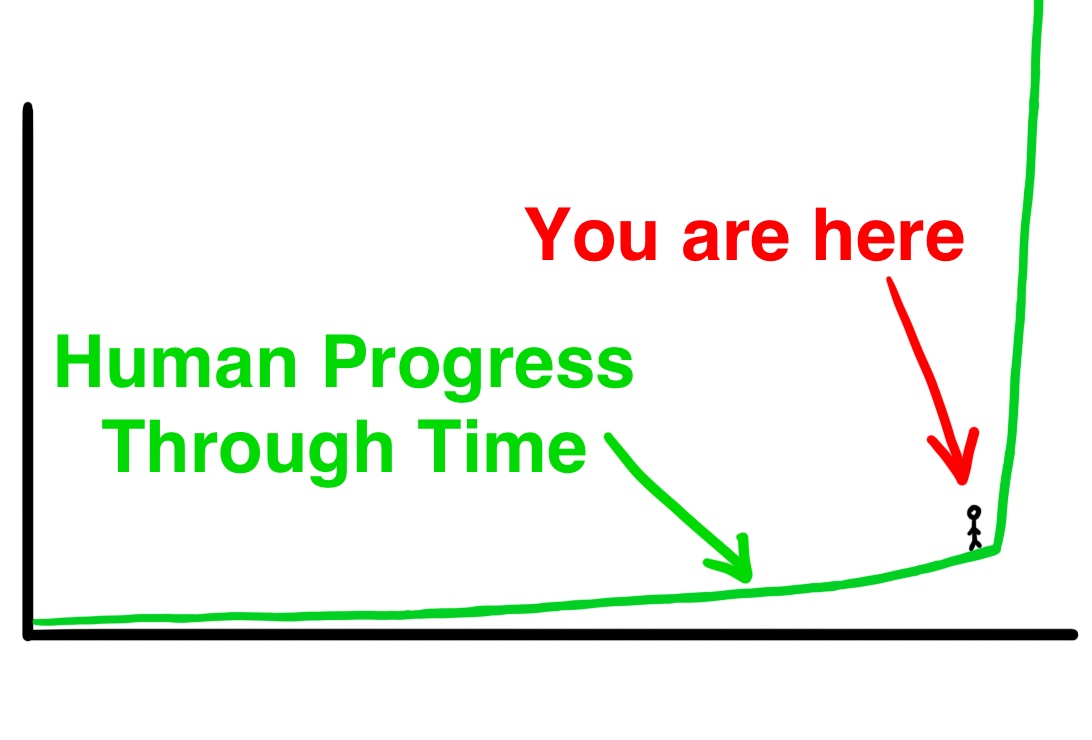

Rob Toews’ latest Forbes article delves into the fascinating world of AI for AI research. This paradigm shift promises groundbreaking advancements, from accelerating scientific discovery to revolutionizing industries. By automating AI research itself, we could be on the brink of an “intelligence explosion” that could reshape the world.

The following is an edited excerpt.

Leopold Aschenbrenner’s “Situational Awareness” manifesto made waves when it was published this summer.

In this provocative essay, Aschenbrenner—a 22-year-old wunderkind and former OpenAI researcher—argues that artificial general intelligence (AGI) will be here by 2027, that artificial intelligence will consume 20% of all U.S. electricity by 2029, and that AI will unleash untold powers of destruction that within years will reshape the world geopolitical order.

Aschenbrenner’s startling thesis about exponentially accelerating AI progress rests on one core premise: that AI will soon become powerful enough to carry out AI research itself, leading to recursive self-improvement and runaway superintelligence.

The idea of an “intelligence explosion” fueled by self-improving AI is not new. From Nick Bostrom’s seminal 2014 book Superintelligence to the popular film Her, this concept has long figured prominently in discourse about the long-term future of AI.

Indeed, all the way back in 1965, Alan Turing’s close collaborator I.J. Good eloquently articulated this possibility: “Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion,’ and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make.”

Self-improving AI is an intellectually interesting concept but, even amid today’s AI hype, it retains a whiff of science fiction, or at the very least still feels abstract and hypothetical, akin to the idea of the singularity.

But—though few people have yet noticed—this concept is in fact starting to get more real. At the frontiers of AI science, researchers have begun making tangible progress toward building AI systems that can themselves build better AI systems.

These systems are not yet ready for prime time. But they may be here sooner than you think. If you are interested in the future of artificial intelligence, you should be paying attention.

Read the rest of Rob’s article here.

AI News This Week

-

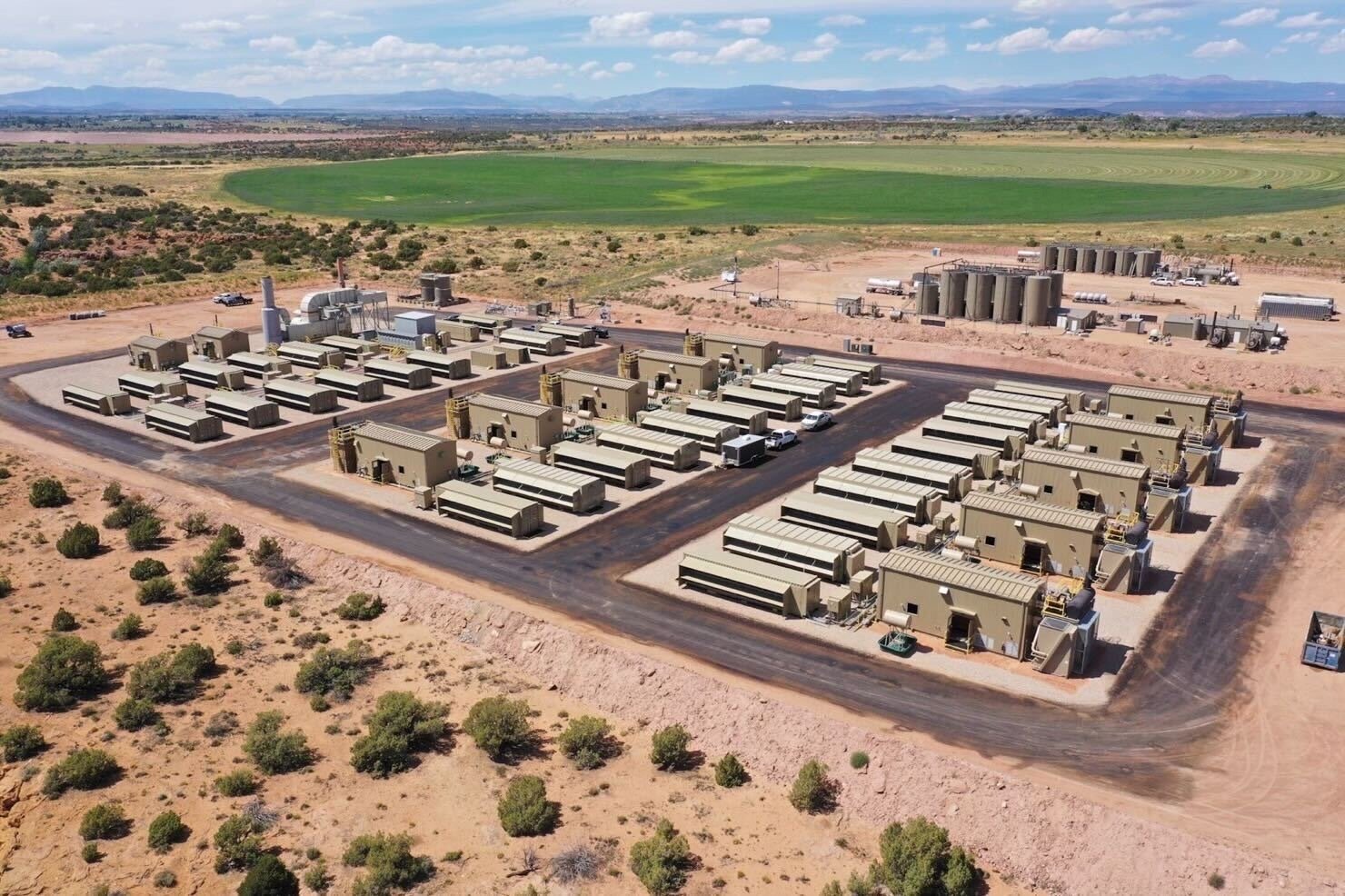

Tech giants see AI bets starting to pay off (Wall Street Journal)

This week, some of the world’s biggest tech companies showed how the tens of billions of dollars they have bet on the AI boom are starting to pay off. Amazon, Microsoft and Google parent Alphabet disclosed a total of $50.6 billion spent on property and equipment last quarter, compared with $30.5 billion in the same period last year. Much of that money went to data centers used to power AI. Revenue from cloud businesses at Amazon, Microsoft and Google reached a total of $62.9 billion last quarter. That figure is up over 22% from the same period last year and marked at least the fourth straight quarter in which their combined growth rate has increased. All three companies suggested their spending will go higher in the coming months, as did Meta Platforms, which invests in the infrastructure for its own AI applications.

-

Exclusive: Chinese researchers develop AI model for military use on back of Meta's Llama (Reuters)

Chinese research institutions linked to the People’s Liberation Army (PLA) have adapted Meta’s open-source Llama AI model for potential military applications, according to academic papers and analysts. Researchers used an earlier version of the Llama 13B model, adding custom parameters to create a military-focused AI tool, ChatBIT, designed for intelligence gathering and operational decision-making. This marks the first substantial evidence of PLA experts systematically studying and adapting open-source LLMs, particularly Meta’s, for defense purposes. Although Meta prohibits military use of its models, its ability to enforce this policy is limited due to Llama’s public accessibility.

-

What if AI is actually good for Hollywood (New York Times)

Hollywood creatives are increasingly viewing AI as an essential tool for movie making. Directors Anthony and Joe Russo have hired a machine-learning expert to explore AI’s potential in their upcoming “Avengers” movies. Tom Hanks views AI as a means to play roles that, traditionally, he would be too old for, emphasizing that it is just another tool for filmmakers. AI’s ability to facilitate rapid idea generation allows artists to fail fast and cost-effectively. As noted by directors like Bennett Miller, AI can drive creative exploration without replacing human talent, ultimately positioning Hollywood to adapt to new challenges and opportunities while preserving the art of storytelling.

-

AI tools show promise in supporting dementia patients and caregivers (BBC)

AI technology is emerging as a valuable tool for people living with dementia, offering solutions for daily routines and independence. The decade-long Florence Project is developing AI-enhanced communication devices, including a smart diary, music player, and photo screen that can be remotely managed by caregivers. These tools use AI to build personalized knowledge banks for each user, adapting content as conditions change. In Japan, researchers are integrating ChatGPT into Hiro-chan, a therapeutic companion robot designed to reduce stress in dementia patients. Early adopters like Pete Middleton, a former programmer diagnosed with dementia, see AI’s potential for maintaining independence and dignity.

-

Research: General instruction following with thought generation (Meta FAIR/UC Berkeley/NYU)

Researchers have developed Thought Preference Optimization (TPO), enabling language models to “think” before responding to any instruction type. Unlike traditional approaches focused on math reasoning, this method improves performance across diverse tasks including creative writing and marketing. The system trains LLMs through an iterative process: models generate thought-response pairs, responses are evaluated, and the best ones optimize the thinking process. Testing shows superior performance on AlpacaEval (52.5% win rate) and Arena-Hard (37.3% win rate). This research suggests explicit thinking capabilities can enhance AI performance across all task types.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)