Multimodal AI is artificial intelligence that combines multiple types, or modes, of data – such as text, video, audio, time series, and numeric data – to create more accurate determinations, draw insightful conclusions or make more precise predictions about the real world. By leveraging the unique strengths of each data type, researchers and developers can create AI systems that possess a deeper level of perception and cognition. This integration enables machines to process and interpret data in a more human-like manner, revolutionizing the way we interact with technology. This week we are featuring an excerpt of a high-level introduction to multimodal AI from James Le, Twelve Labs’ Head of Developer Experience. Twelve Labs is a Radical Ventures portfolio company and a leading developer of multimodal models. The company’s products include the ability to understand, search and summarize videos.

The world surrounding us involves multiple modalities – we see objects, hear sounds, feel textures, smell odors, and so on. Generally, a modality refers to how something happens or is experienced. A research problem is therefore characterized as multimodal when it includes multiple modalities.

Multimodal AI is a rapidly evolving field that focuses on understanding and leveraging multiple modalities to build more comprehensive and accurate AI models. Recent advancements in foundation models, such as large pre-trained language models, have enabled researchers to address more complex and sophisticated problems by combining modalities. These models are capable of multimodal representation learning for a wide range of modalities, including image, text, speech, and video. As a result, multimodal AI is being used to tackle a variety of tasks, from visual question-answering and text-to-image generation to video understanding and robotics navigation.

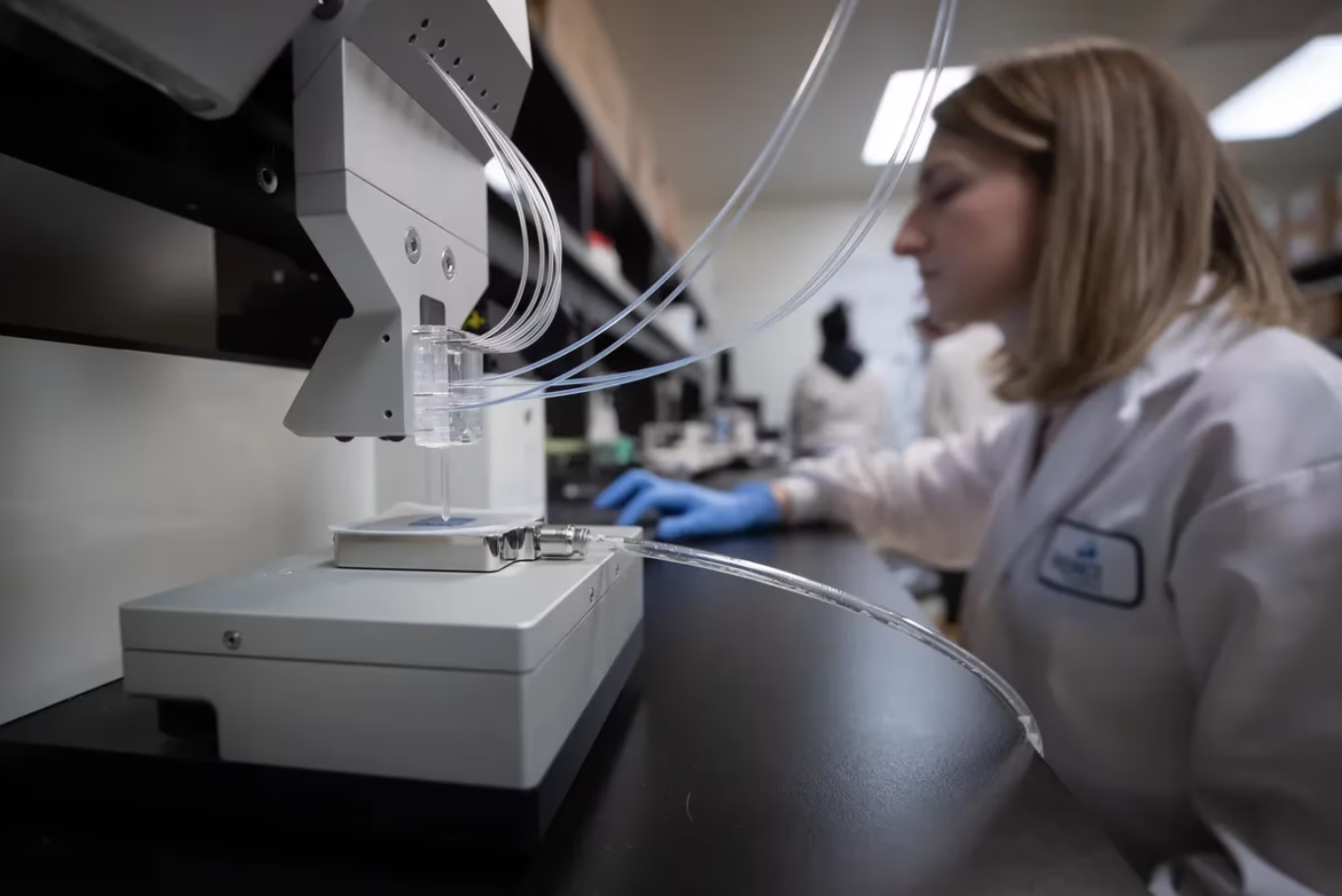

Multimodal AI is being used to tackle a wide range of applications across various industries including autonomous vehicles, where multimodal AI can help improve safety and navigation by integrating multiple sensor modalities, such as cameras, lidars, and radar. Another area where multimodal AI is making advances is in healthcare, where it assists in the diagnosis and treatment of diseases by analyzing medical images, text data, and patient records. Combining modalities can provide a more comprehensive and accurate assessment of patient health.

In the entertainment industry, multimodal AI is used to create more immersive and interactive user experiences. For example, virtual and augmented reality technologies rely heavily on multimodal AI to create seamless interactions between users and their digital environments. Additionally, multimodal AI is used to generate more engaging and personalized content, such as custom recommendations and dynamically generated video content.

As researchers continue to explore the potential of multimodal AI, we will likely see even more creative and impactful applications emerge.

AI News This Week

-

Synthetic data could be better than real data (Nature)

Machine-generated data sets have the potential to improve privacy and representation in artificial intelligence. Creating good synthetic data that both preserves privacy and reflects diversity, and that is made widely available, will improve the performance of AI and expand its uses. Improvements in synthetic data may democratize AI research which, to date, has been dominated by the companies with access to the largest data sets.

-

Startup Sees AI as a Safer Way to Train AI in Cars (Bloomberg)

The autonomous vehicle industry is estimated to have raised $100 billion. Some startups have folded, and auto giants like Ford Motor Co. have abandoned their efforts. Waabi, a Radical Ventures portfolio company, is avoiding the capital-intensive dynamics of the industry. According to its CEO and co-founder, Raquel Urtasun, Waabi’s application of generative AI to create synthetic training environments enabled the company to build its self-driving system for the equivalent of 5% of the cost of its competitors.

-

AI could be just the productivity the economy needs (Barrons – subscription may be required)

AI accelerates innovation by making research and the development of new ideas more efficient, boosting not just the level, but the rate of increase in productivity growth. For instance, researchers found AI-based systems could help predict future scientific discoveries, dramatically accelerating science in some domains. In turn, such discoveries can spark the kinds of practical innovations that fuel faster productivity growth. Even small increases in the rate of productivity improvement will compound over time. Current estimates of economic growth predict that output will be one-third higher in 20 years, but a combination of the direct impact of generative AI, plus its potential impact on future growth, could lead instead to a near doubling of output over this period, with even higher rates in future years.

-

Human toddlers are inspiring new approaches to robot learning (TechCrunch)

Researchers at Carnegie Mellon University are using a combination of active and passive learning techniques to train robots. RoboAgent, a joint effort between Carnegie Mellon University and Meta AI, can learn quickly and efficiently by observing tasks being performed via the internet, coupled with active learning by way of remotely tele-operating the robot. By taking learnings from one environment, RoboAgent can apply them to another, making robots more useful in unstructured settings like homes, hospitals and other public spaces.

-

AI algorithm discovers ‘potentially hazardous’ asteroid 600 feet wide in a 1st for astronomy (Space.com)

A new artificial intelligence algorithm programmed to hunt for potentially dangerous near-Earth asteroids has discovered its first space rock. The roughly 180 metres-wide asteroid has received the designation 2022 SF289, and is expected to approach within 225,000 kilometres of Earth. While the distance is shorter than that between our planet and the moon, it does not mean it will impact Earth in the foreseeable future. Previously, searching for potentially hazardous asteroids involved taking images of parts of the sky at least four times a night. Astronomers would spot an asteroid by detecting a moving point of light traveling in an unambiguous straight line across the series of images. The new system powered by AI can make a determination from just two images, speeding up the asteroid detection process.

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)