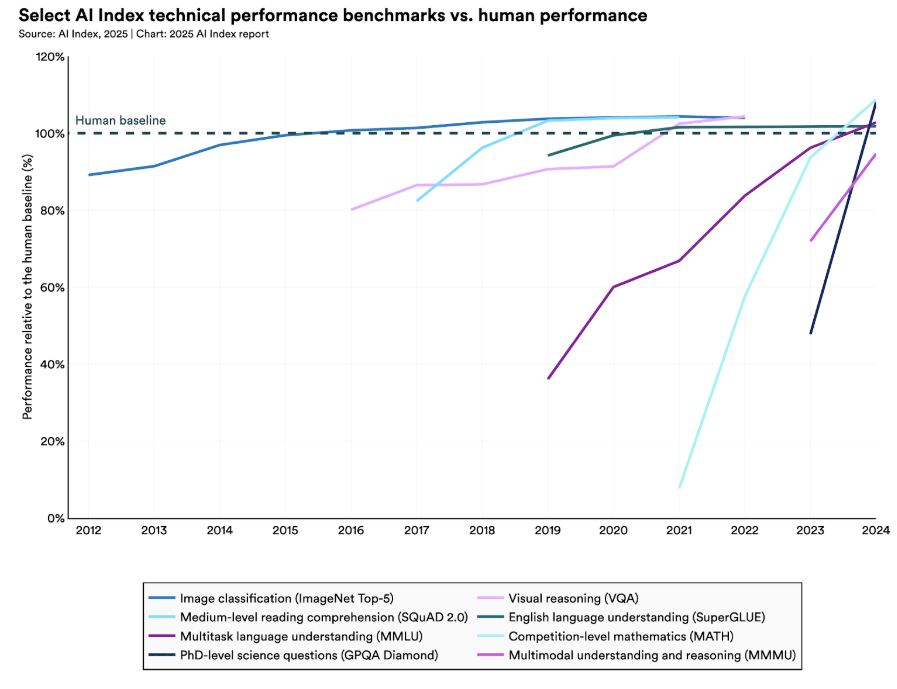

AI is now part of every executive’s vocabulary. The image below captures the increasing comments on AI during earning calls since the launch of ChatGPT. This trend goes beyond big tech giants to companies operating across verticals, including healthcare and pharma.

This is no surprise, given businesses everywhere are leveraging foundation models. The most popular are large language models (e.g., GPT3, GPT4). A recent example in healthcare is Epic integrating GPT-4 into its EHR. Epic owns the highest share of the U.S. acute care hospital market and hopes to increase provider productivity and reduce the administrative burden of clinicians through this partnership. Another recent example is Google’s medical large language model, Med-PALM, which is being released to a select group of Google Cloud customers to explore use cases as they investigate safe and responsible ways to use the technology.

But first, what is a Foundation Model?

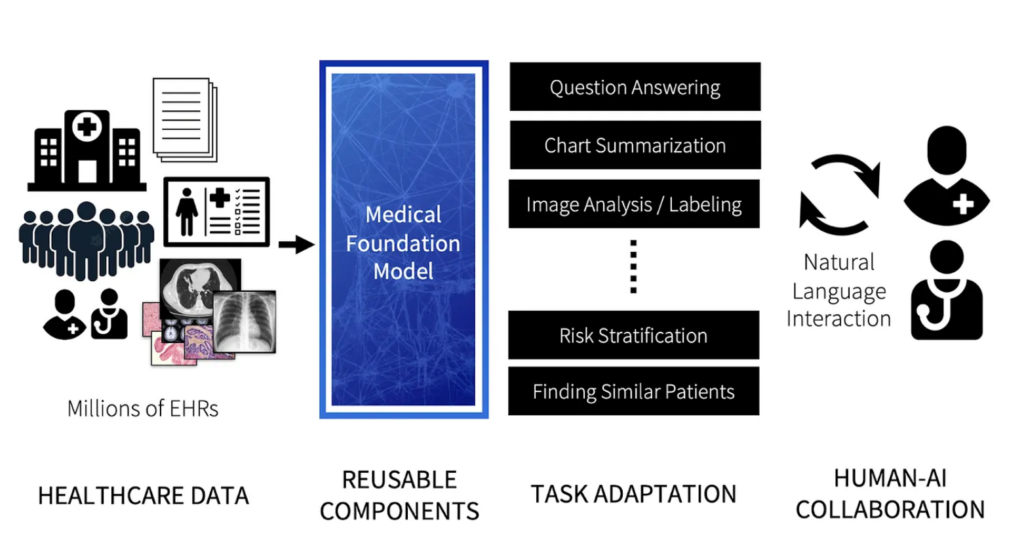

The term foundation model was coined by Stanford HAI in 2021 to describe a growing number of models trained using self-supervised learning on large amounts of broad data with the ability to adapt to a wide range of downstream tasks.

This two-minute explainer video released by Stanford HAI summarizes foundation models. It also makes a clear call to action to ensure these models are built and deployed safely, being of utmost relevance to the healthcare industry.

Foundation Models: A Tool in the Toolkit for Health AI Researchers & Engineers

In theory, multi-modal generalized healthcare models have the potential to significantly reduce the cost and time associated with developing and deploying models for singular clinical or administrative tasks, specifically costs associated with data acquisition, labeling, and pipelining.

Healthcare is ideally suited to the development of foundation models. The scale of multi-modal healthcare data keeps multiplying. We are now close to a point when digital data outpaces data lying within healthcare organization siloes. Unlocking this data has been challenging, and existing legacy systems make it tough to leverage that data effectively for healthcare decisions. This makes collecting and using healthcare data to train algorithms costly and time-consuming, impacting the market speed for healthcare AI algorithms.

This piece details the various other benefits of foundation models in healthcare.

Healthcare, in theory, is ideally suited to the development of foundation models. These models become part of a toolkit to develop task-specific healthcare models.

How Foundation Models Can Advance AI in Healthcare, published by Stanford HAI

How can Foundation Models be Leveraged for Administrative and Clinical Tasks?

The lowest hanging fruit is general and administrative tasks. These tasks have created massive inefficiencies in the healthcare system and have a higher margin for error than clinical decisions. We are seeing the development of models that support downstream tasks associated with note-taking & summarization (e.g., DeepScribe, Abridge, Tali, Ambience), patient data identification & redaction (e.g., Science IO, Mendel), billing & revenue cycle management (e.g., Fathom, Nym, Semantic Health), and administrative navigation & patient engagement (e.g., Hippocratic AI) in multi-stakeholder healthcare systems.

Clinical foundation models may take longer to reach the mainstream and will be rightfully held to a higher standard of safety and reliability. Multi-modal generalist medical foundation models have the promise to deliver on multiple target tasks but will come up against a few challenges and will be leveraged differently. Clinical AI that solves varied human health conditions has a very low to negligible margin for error. Stanford HAI recently called for a Medical HELM evaluation framework that builds upon the HELM project that improved the transparency in evaluating Large Language Models. When medical foundation models become safe to use, they will be fine-tuned with small, high-quality, unbiased, task-specific ‘designer datasets’ to support clinical decision-making accurately.

Foundation models are being deployed at scale and revolutionizing everything we do. While the adoption of foundation models in healthcare will be slower relative to other sectors, it will usher in a new era of health AI deployments by making the process more efficient.

There are many questions that remain especially pertaining to the access and safety of these models. Stay tuned as we interview experts and cover more in this space.

Thank you to Charles Onu from Ubenwa, who shared his perspective on leveraging foundation models in his toolkit for building task-specific healthcare models.

Radical Reads is edited by Ebin Tomy.