“Like kids to a soccer ball!” That was Canada Research Chair in Generative Models and Radical Scientific Advisor, David Duvenaud’s take on the interest in generative AI at this year’s NeurIPS summit. The largest AI research conference was held earlier this month, and one thing was very clear to everyone attending: generative AI and large language models are dominating AI research.

Since GPT-3’s introduction in 2020, the transformer architecture that underpins the model has been in the spotlight (one of the inventors of transformers is Aidan Gomez, the Co-Founder, and CEO of Radical portfolio company Cohere). Initially, applied to word recognition and text generation, the versatility of transformers has researchers asking what else they can do. This was underlined by the launch of ChatGPT, which was released in the midst of NeurIPS and quickly dominated hallway conversations and Twitter threads. Multimodality also took centre stage this year, showcased by DeepMind’s Flamingo, which aims to tackle multiple tasks with a visual language model (VLM).

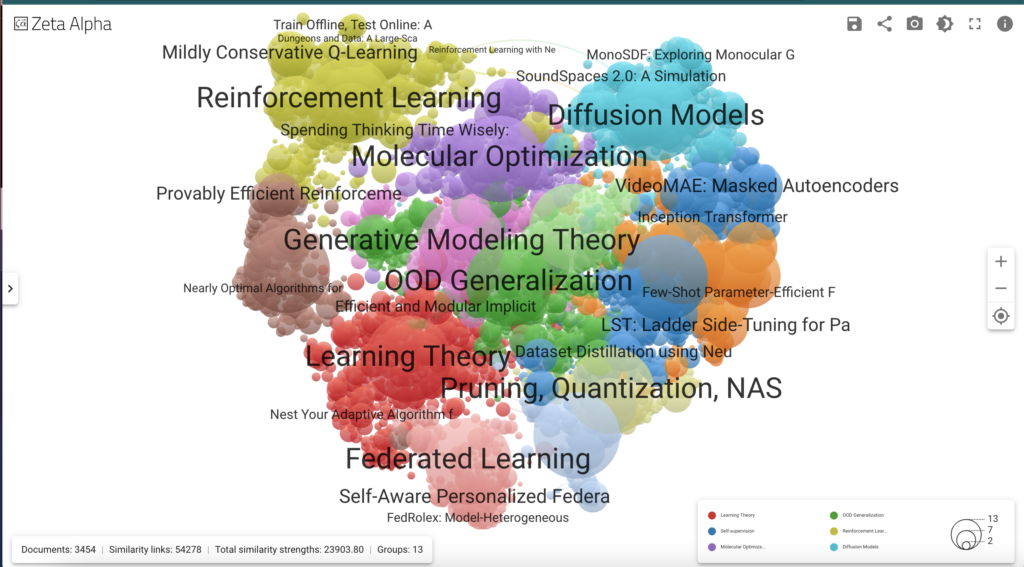

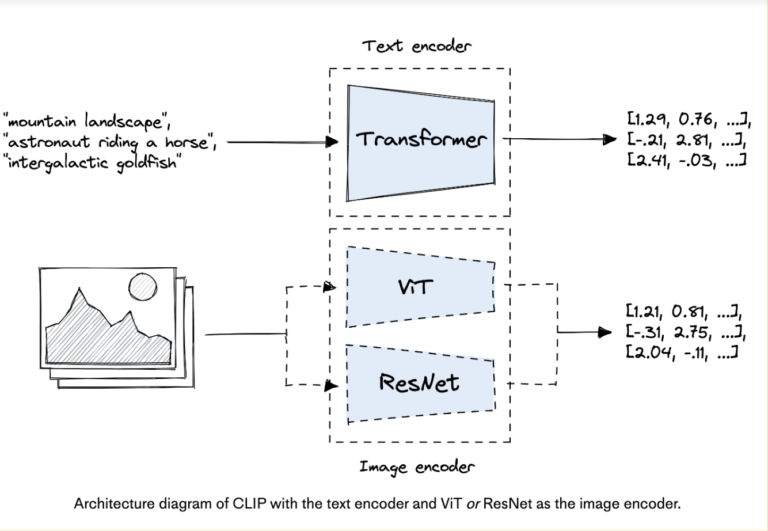

Many papers submitted to NeurIPS iterated on CLIP, a 12-layer text transformer for building text embeddings, and a ResNet or vision transformer for building image embeddings. Transformers were also showcased as an active area of research in reinforcement learning – one of NeurIPS’ longest-running hot topics.

Image Source: Pinecone, “Embedding Methods for Image Search

Text-to-image generation models also grabbed attention at this year’s conference, as the pace of commercial adoption continues to surprise researchers. Diffusion models went from research ideation to mainstream usage in two years, outpacing the three years it took transformers to find the equivalent traction. The conference highlighted how this modelling technique has spread beyond the realm of 2D still image generation and is being applied to 3D scene synthesis, and video generation.

The time between AI research breakthroughs to mainstream adoption continues to collapse, effectively turning NeurIPS into a showcase of technologies that may quickly find commercial application.

In its Emerging Technologies and Trends Impact Radar for 2022 released last week, Gartner offered a preview of what these new technologies may look like. The report predicts that by 2025, generative AI will produce 10 percent of all data (currently less than 1 percent) and 50 percent of drug discovery and development initiatives will be powered by generative AI. By 2027, Gartner predicts that 30 percent of manufacturers will use generative AI to enhance their product development effectiveness.

At Radical, we are longtime believers and investors in generative AI. In fact, the invention of transformers in 2017, and the belief that they would revolutionize industries, helped catalyze our founders’ decision to sell their deep learning company, Layer 6 AI, and build Radical Ventures with a focus on AI.

AI News This Week

-

What we got right and wrong in our 2022 AI predictions (Forbes)

Radical Ventures Partner Rob Toews published a list of 10 predictions about the world of artificial intelligence in 2022. Each year he revisits these predictions to see how things actually played out. This year 7/10 were right, (including two “right-ish” predictions). Correct predictions included the dominance of language AI and Toronto establishing itself as the most important AI hub in the world outside of Silicon Valley and China.

-

Cohere AI hires YouTube’s CFO Martin Kon to be its new COO (Fortune)

Radical Ventures portfolio company Cohere announced Martin Kon as its new President & COO. From his role as CFO of Google’s YouTube division where he led strategy, finance, business operations and commercial data analytics and reported directly to YouTube CEO and Alphabet’s CFO, Martin brings extensive experience delivering innovative products to market, optimizing existing ones, and creating new revenue streams. In an interview with Fortune, Kon says he sees natural language processing (NLP) as “the next disruption and transformation in how we, as humans, inform and entertain ourselves.” He points out, “90% of enterprise data is unstructured, and that NLP is the key to unlocking it. The problem, he says, is that building a large language model of your own requires very expensive supercomputing infrastructure. And while Google has that kind of infrastructure, much of it is focused on using AI to generate improvements in Google’s existing product suite, not in rolling out products aimed at enterprise customers to help them transform their own businesses. Cohere, on the other hand, is all about bringing Google-quality AI to the masses.”

-

Want to experience the future of search? Go to You.com (Forbes)

Google-challenger and Radical portfolio company, You.com, announced an open platform for search, launching a new stage in the evolution of how we use the Internet. Introduced a year ago with the goal of giving users control over their search experience, You.com has now reached well over 1 million actively searching users, with the number of searches growing over 400% in the last 6 months. The future of search has arrived in the form of an open dashboard for managing our digital life. “Gone are the days when one monolith controls what we see online and sells our data while making trillions of dollars and hoarding the profit for themselves,” noted You’s co-founder and CEO Richard Socher.

-

Inside SpaceX’s lucrative new government satellite program (The Information – subscription may be required)

Starshield was recently unveiled by SpaceX as a business to build and launch a secured satellite network for government entities. Former SpaceX engineers Jim Martz and Brandi Sippel started Starshield in 2018 with the idea of making the satellite technology developed for Starlink available to commercial and government customers to host their own payloads. They have since joined Radical portfolio company Muon Space – adding to a team of some of the world’s foremost experts in the domains of Earth Remote sensing science with the mission to power climate action through next-generation Earth Observation.

-

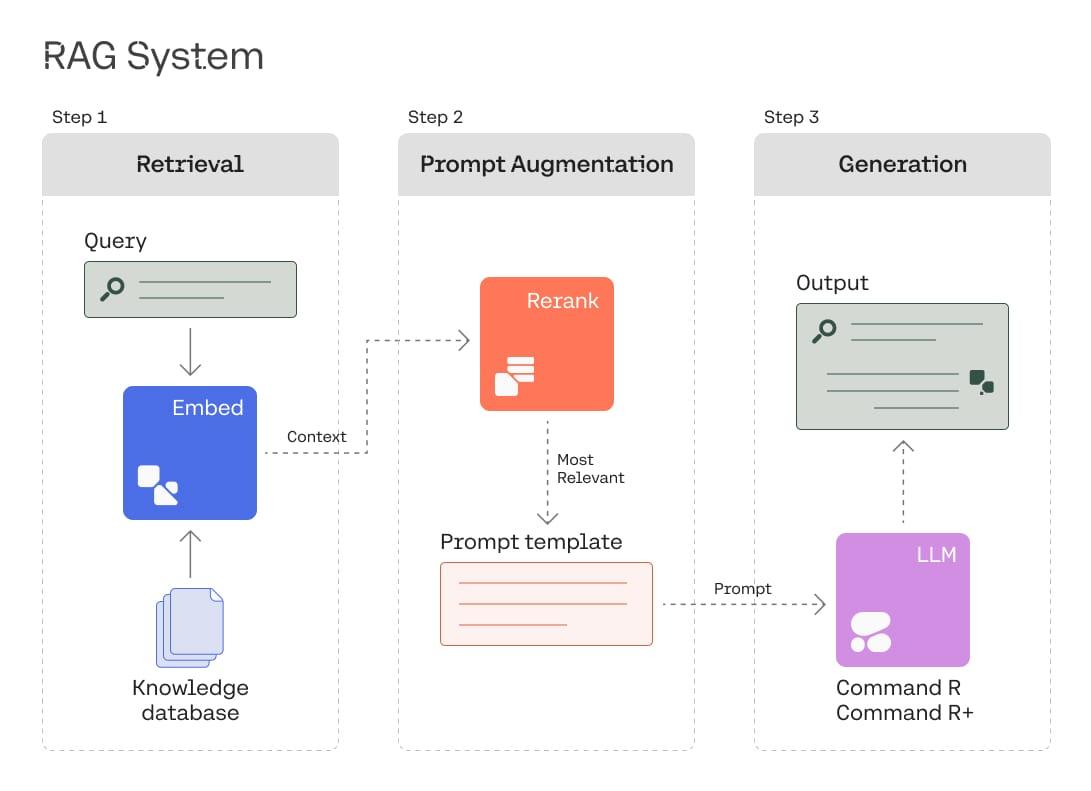

Semantic search + ChatGPT (Medium: George Sivulka)

ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers because there is currently no source of truth. Hebbia, a Radical Ventures portfolio company, is building the semantic search memory layer that gives generative AI a semantic search “memory.” Semantic search “readers” can feed generative “writers” to address all shortcomings of generative models, making them more accurate (priming models with relevant primary sources), more trustworthy (citing sources behind every generation), and more easily updated (updating an index can happen almost instantaneously with no need to retrain a billion-parameter model).

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)