This week, we are republishing a post from Radical Partner Molly Welch’s Substack, where she shares her thoughts on the key themes defining AI in 2026.

Unlike 2025, 2026 has no numerically significant properties. It’s not a perfect square, cube, or highly composite number. But don’t let that fool you. Over three years post ChatGPT and uncomfortably close to much-heralded 2027, we’re no longer in the first innings of language model maturation or AI adoption. 2026 will be a “make or break” year for the AI ecosystem. And if the first few weeks are any indication, the year is going to be quite the ride.

Here are the themes I’m watching in 2026:

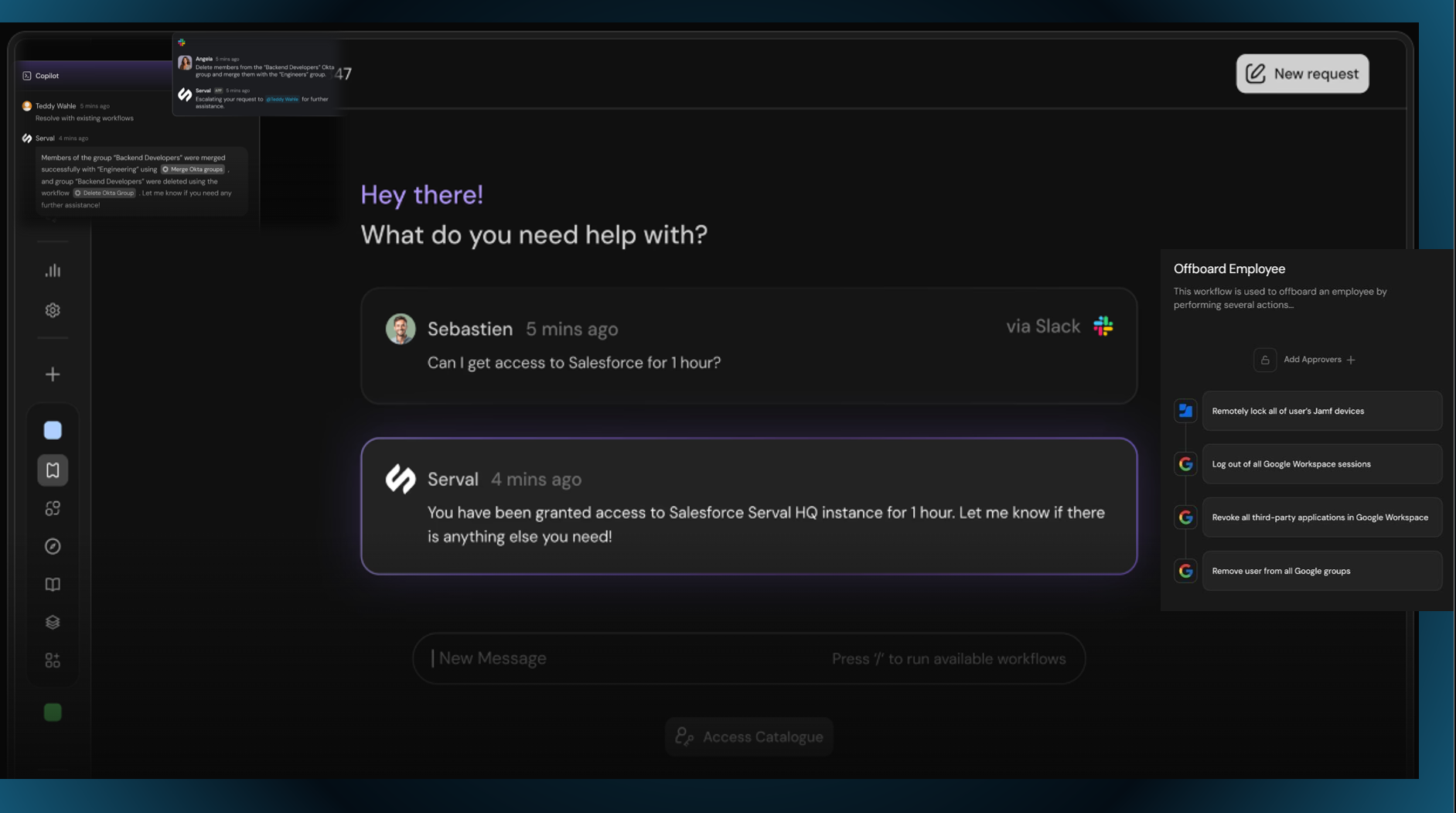

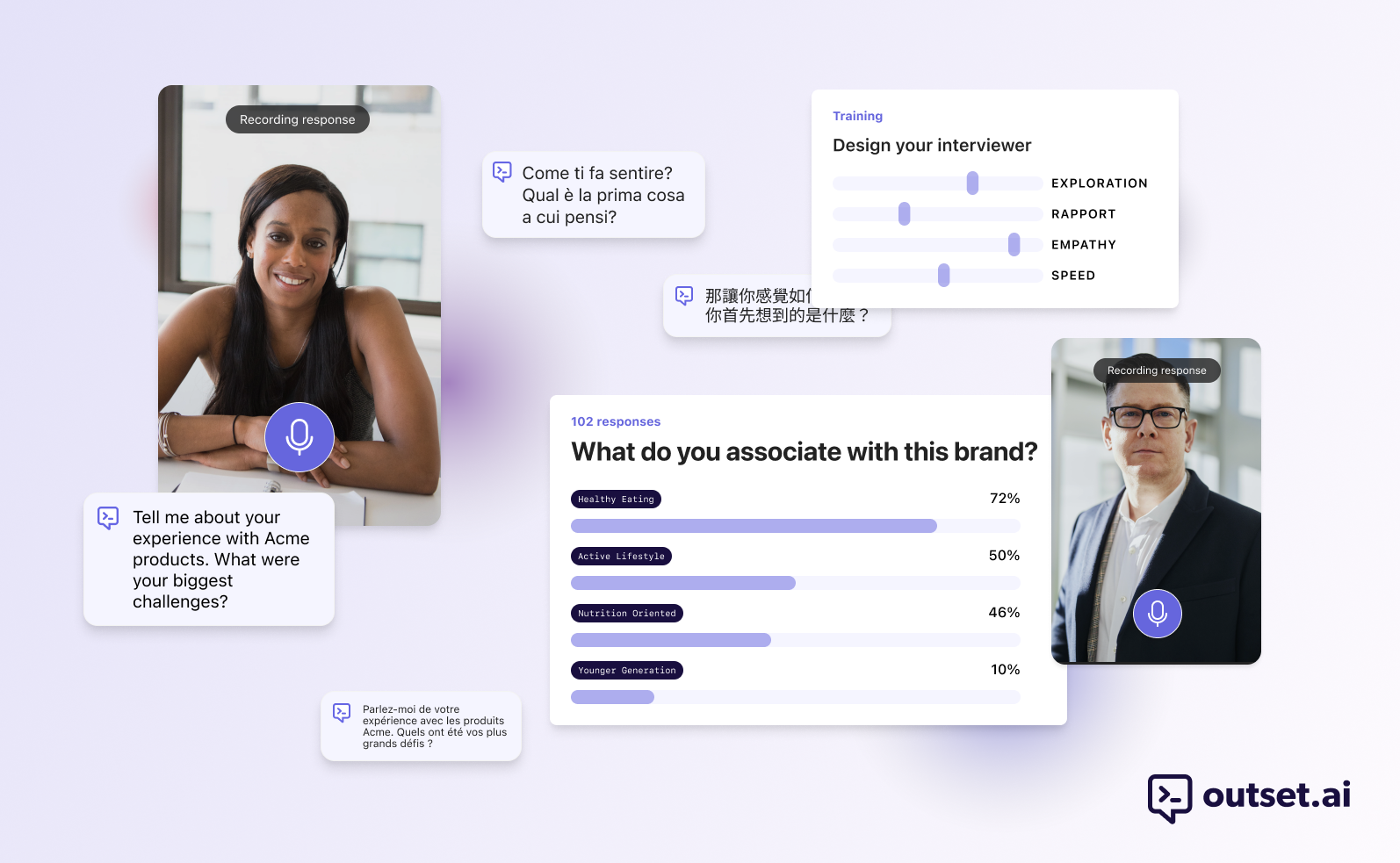

- The “last mile” for agents: There is a growing recognition that making enterprise agents truly useful in production requires more than today’s models offer off-the-shelf. Agentic systems need more specificity, more scaffolding for navigating decision-making ambiguity, and better ability to “learn on the fly.” There are a number of approaches emerging to bridge these gaps:

- Memory and continual learning: Instilling memory into models remains a challenging research problem, as does continual learning – the ability to enable models to “learn on the fly” and adjust weights based on interaction. Exciting research directions include sparse memory finetuning, cartridges, test time training to discover, and other approaches.

- Reinforcement learning (RL): RL enables systems to learn from experience, which works well in contexts where organizations can provide specific KPIs and problems are verifiable. A crop of startups has emerged to provide “RL-as-a-service” for enterprises. Companies like Prime Intellect are abstracting away the complexity of managing RL pipelines and building custom models for customers.

- Context management: “Context graphs” have emerged as a key concept describing storage of enterprise decision traces, which can help guide agents as they tackle long-horizon tasks.

- World models come into their own: World models will take center stage this year with increasing technical progress and commercial momentum. Already, we’ve seen impressive product launches with Radical portfolio World Labs’ Marble (and now Marble API!) and technical demonstrations with Google DeepMind’s Genie. I anticipate that world models will become increasingly central to workflows in industries like gaming, entertainment, and robotics.

- Neolabs and the disaggregation of research: Several years ago, the consensus narrative was that all important AI research happened in just a handful of industrial labs. Today, these labs have become juggernauts balancing not just core research, but also fast-growing business lines and ever-expanding commercial agendas. As a result, we’re seeing frontier research begin to unbundle into more specialized, venture-backed “neolabs,” which focus talent and resources on new approaches or particular research agendas. Beyond Thinking Machines, notable examples include the sciences, with companies like Radical portfolio companies Periodic Labs; chip design, with Ricursive Intelligence; continual learning and memory; and more. I anticipate that we’ll see more schisms from the labs in 2026. Importantly, we’ll also see early indications of performance from the first crop. The core question: can neolabs drive game-changing new research, and can they build products and businesses around it?

- Western challengers in the open model ecosystem: Chinese open-weight models were the clear center of gravity in 2025, sparked by the notorious “DeepSeek Moment” and sustained by relentless momentum from DeepSeek and Alibaba’s Qwen, which surpassed LLaMa to become the most-downloaded LLM family on Hugging Face in September 2025. Meta’s LLaMa-4 rollout, meanwhile, landed with mixed community reception, creating a vacuum for a new Western standard-bearer. Into that gap, a credible Western bench is forming. OpenAI’s Apache-licensed gpt-oss models, Mistral’s newly open-weight Mistral 3 family, NVIDIA’s Nemotron 3 releases, and fully open research efforts like Ai2’s OLMo 2 are collectively rebuilding momentum. And new institutions are emerging, most notably Reflection AI, which has refocused on building frontier open intelligence and is aiming to release its first model on that trajectory.

- Real-world inputs are the bottleneck: Chips have long been a bottleneck for AI progress, but real-world dependencies will go beyond just chips in 2026 and encompass the inputs necessary to scale chips. Think rare earths, energy infrastructure, data center cooling systems, and real estate.

- Chip heterogeneity increases: As compute bottlenecks become more acute, organizations will be more willing to leverage alternative chipsets, particularly for inference. Growing use of alternative chipsets will be a boon to challenger alternative chip provider startups — NVIDIA’s Groq acquisition is a bellwether here — but it will also create new bottlenecks in software, especially for high-quality kernels for non-NVIDIA GPUs. LLMs can help! While they can’t yet write kernels out of the box, efforts like KernelBench show growing progress. We’ll see LLM-generated kernels get a lot better this year, and these efforts may help unblock utilization for challenger chips.

- Long-horizon tasks are unlocked: Claude Opus 4.5 is a meaningful step forward relative to previous model generations in terms of practical agent reliability, especially for coding and computer use. I anticipate we’ll see agents more capable of performing long-horizon, multi-step tasks, which will unlock more economically valuable work across the enterprise.

- More formal methods: The more important jobs that agents fulfill, the harder guarantees will be necessary to verify their outputs and restrict their activity. Particularly in high-risk domains like aerospace and defense, formal methods can wrap around autonomous systems to provide runtime assurance and safety control. Used in parallel with probabilistic systems, they can make agents more deployable at scale.

- Vertical-specific data providers: Vertical-specific data providers are quietly becoming one of the highest-leverage businesses in the AI stack. As frontier models capabilities coalesce, performance is increasingly bottlenecked by what you can teach a system on: rights-cleared, high-signal data that matches the real-world distribution of a domain. This can include robot trajectories and failure modes, instrument exhaust, longitudinal scientific measurements, compliant biomedical corpora, 3D assets, etc. The winners won’t just “sell datasets,” but run a continuous collection-and-verification engine (schemas, labeling/QA, provenance, and gold evals) that makes probabilistic systems reliable where it matters. In an agentic world, the moat may be less about model weights and more about owning the reality anchors that ground models.

- AI that builds AI: We’re entering a new phase where AI doesn’t just use software, but helps build the next generation of AI. The idea isn’t new: Google’s early AutoML work proved that search and optimization can discover architectures and hyperparameters better than hand-tuning, but it was largely bounded to narrow design spaces. What’s different now is that frontier models can act as end-to-end engineering agents, writing code, generating experiments, interpreting results, and iterating, while modern training stacks, abundant open-source tooling, and scalable compute make rapid “build-measure-learn” loops cheap enough to run continuously. This year will bring meaningful steps forward in this recursive self-improvement and a number of new startups focused squarely on the effort.

In 2026, the bottleneck is no longer raw intelligence, but rather real-world utility: memory, context, trusted data, and the physical inputs that make models useful and durable. As learning moves online (RL, continual learning, etc.), advantages will accrue to those who own feedback loops and can ship autonomous systems with guardrails. The companies that win 2026 won’t just make models smarter— they’ll make them reliable and dynamic enough to run the world’s workflows.

AI News This Week

-

Why Software Stocks are Getting Pummelled (The Economist)

Software stocks have declined about 25% from their October peak amid fears that AI will disrupt the industry. Two concerns dominate: AI coding tools will enable companies to build software in-house, and AI-native startups will steal market share from incumbents. Rebuttals to these concerns include the fact that companies have steadily outsourced software development for decades, from 30% in-house in the 1990s to 15% today, since building software distracts from core business. AI-generated code remains “sloppy” with conceptual errors, and costs will rise as subsidies end. Established providers can spread development costs across customers, use AI more effectively, and possess deep pockets for AI acquisitions.

-

Where Is A.I. Taking Us? (NYT)

The New York Times convened eight AI experts who revealed sharp disagreements on AI’s trajectory. Most predict AI will have a transformative impact on programming, with developers already completing tasks 56% faster with AI tools, though only a third trust the outputs. Nick Frosst, co-founder of Radical Ventures portfolio company Cohere, expects AI to “fade into the background like GPS,” becoming mundane infrastructure powering daily workflows. Some of the experts argue that artificial general intelligence remains unlikely within 10 years, citing missing capabilities in abstraction and self-awareness. Sharp divisions appeared on unemployment risks. Most of the experts convened by the Times predict that daily chatbot use will become standard, yet disagree on whether it will displace jobs or merely shift the nature of work. The prevailing advice from the experts was to develop uniquely human skills such as creativity, critical thinking, and face-to-face collaboration while learning to work alongside AI systems.

-

Long-Running AI Agents Transform Work Architecture (WSJ)

A new class of “long-running” AI agents is fundamentally reshaping how work gets done. Unlike previous AI iterations that generate immediate responses, new agent platforms accept broad objectives and operate autonomously for extended periods while maintaining context. The architectural shift allows agents to dynamically plan, iterate, and execute without rigid preprogramming. Some experts point out that this “shifts work from creation to editing,” requiring taste and judgment over syntax, and that junior employees who are native to AI tools may outperform senior employees who insist on manual work.

-

China’s Genius Plan to Win the AI Race is Already Paying Off (FT)

China’s state-driven “genius classes” are proving decisive in the AI race, producing 100,000 elite science students annually who form the backbone of the country’s AI breakthroughs. The release of DeepSeek-R1 exemplifies the payoff, with the company’s team of 100+ researchers, almost all coming from genius classes, enabling them to build world-class reasoning models at a fraction of typical costs. Over 1,000 registered generative AI models now operate in China, enabled by the five million STEM majors that graduate yearly and go on to fill core positions at leading AI companies. China’s approach differs fundamentally from Western models, with students bypassing traditional exams, focusing on college-level problem-solving from age 16-18 through immersive competition training.

-

Research: When AI Builds AI (CSET)

Frontier AI companies already use their own models to accelerate R&D, creating conditions for compounding acceleration where productivity gains reach 10x, 100x, then 1000x over human-only R&D. It is unknown if AI R&D has “o-ring automation” properties where certain tasks remain difficult for AI but suitable for humans, maintaining a comparative advantage and slowing acceleration. In acceleration scenarios, advanced systems may exist inside companies long before visible external effects, making AI R&D “the single most existentially important technology development on the planet.”

Radical Reads is edited by Ebin Tomy (Analyst, Radical Ventures)